23a204d75f91c85bb542269447dbfa2164c695ce,ch13/wob_click_train.py,,,#,27

Before Change

env = wob_vnc.MiniWoBCropper(env)

env.configure(remotes=REMOTE_ADDR)

obs = env.reset()

net = model_vnc.Model(input_shape=(3, wob_vnc.HEIGHT, wob_vnc.WIDTH),

n_actions=env.action_space.n)

print(net)

obs, reward, done, info = step_env(env, env.action_space.sample())

obs_v = Variable(torch.from_numpy(np.array(obs)))

r = net(obs_v)

print(r[0].size(), r[1].size())

pass

After Change

agent = ptan.agent.PolicyAgent(lambda x: net(x)[0], cuda=args.cuda,

apply_softmax=True)

exp_source = ptan.experience.ExperienceSourceFirstLast(

[env], agent, gamma=GAMMA, steps_count=REWARD_STEPS, vectorized=True)

// obs, reward, done, info = step_env(env, env.action_space.sample())

// obs_v = Variable(torch.from_numpy(np.array(obs)))

// r = net(obs_v)

// print(r[0].size(), r[1].size())

for idx, exp in enumerate(exp_source):

print(exp)

if idx > 100:

break

time.sleep(0.5)

pass

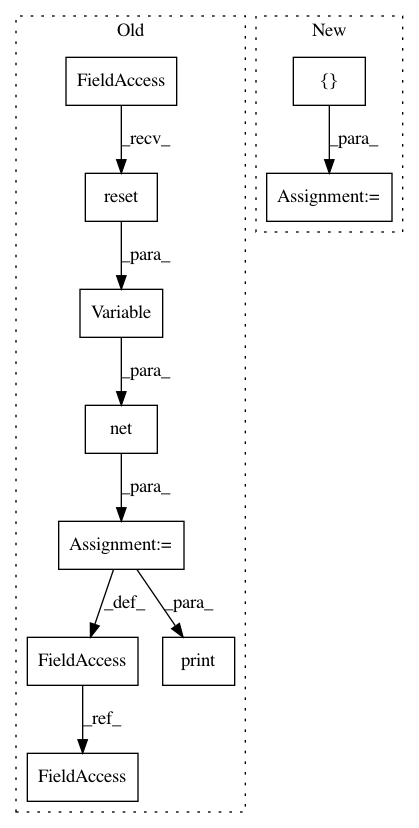

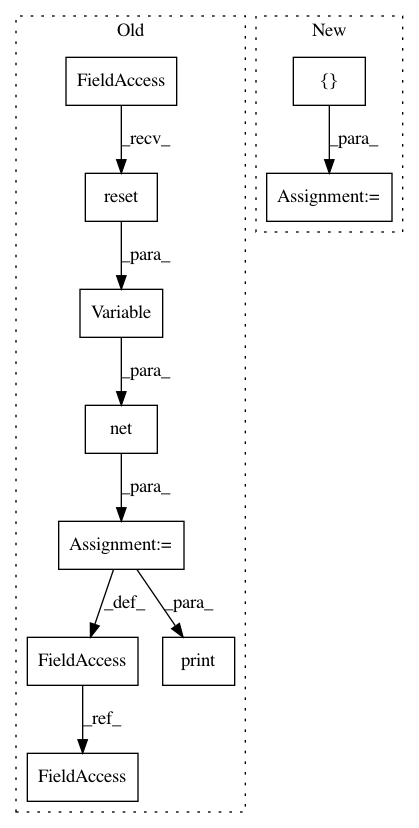

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 10

Instances

Project Name: PacktPublishing/Deep-Reinforcement-Learning-Hands-On

Commit Name: 23a204d75f91c85bb542269447dbfa2164c695ce

Time: 2018-01-19

Author: max.lapan@gmail.com

File Name: ch13/wob_click_train.py

Class Name:

Method Name:

Project Name: PacktPublishing/Deep-Reinforcement-Learning-Hands-On

Commit Name: a0113631d7e9d6ec65531457ce03e06c902a4d0b

Time: 2017-10-17

Author: max.lapan@gmail.com

File Name: ch06/01_dqn_pong.py

Class Name:

Method Name:

Project Name: PacktPublishing/Deep-Reinforcement-Learning-Hands-On

Commit Name: 3bb0fd78af4f9dbddf7c01dde71844a283099650

Time: 2017-10-16

Author: max.lapan@gmail.com

File Name: ch06/01_dqn_pong.py

Class Name:

Method Name: