00726c8b57ad409363ede0e754c0137d3fcc71cc,chapter04/gamblers_problem.py,,figure_4_3,#,23

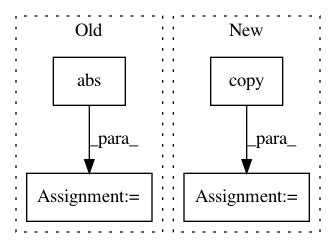

Before Change

action_returns.append(

HEAD_PROB * state_value[state + action] + (1 - HEAD_PROB) * state_value[state - action])

new_value = np.max(action_returns)

delta += np.abs(state_value[state] - new_value)

// update state value

state_value[state] = new_value

if delta < 1e-9:

breakAfter Change

// value iteration

while True:

old_state_value = state_value.copy()

sweeps_history.append(old_state_value)

for state in STATES[1:GOAL]:

// get possilbe actions for current stateIn pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances Project Name: ShangtongZhang/reinforcement-learning-an-introduction

Commit Name: 00726c8b57ad409363ede0e754c0137d3fcc71cc

Time: 2019-06-12

Author: wlbksy@126.com

File Name: chapter04/gamblers_problem.py

Class Name:

Method Name: figure_4_3

Project Name: rlworkgroup/garage

Commit Name: f33a03ea4d71525eabadbe22f31ad0a91607b134

Time: 2019-02-05

Author: rjulian@usc.edu

File Name: garage/envs/point_env.py

Class Name: PointEnv

Method Name: step

Project Name: HyperGAN/HyperGAN

Commit Name: 594da79c98f360331613f786918f08957c39d13c

Time: 2019-12-05

Author: mikkel@255bits.com

File Name: hypergan/trainers/simultaneous_trainer.py

Class Name: SimultaneousTrainer

Method Name: _create