db7b74579181f9cbae3583f447d83148714a1c3d,stanza/models/classifiers/cnn_classifier.py,CNNClassifier,forward,#CNNClassifier#Any#Any#,83

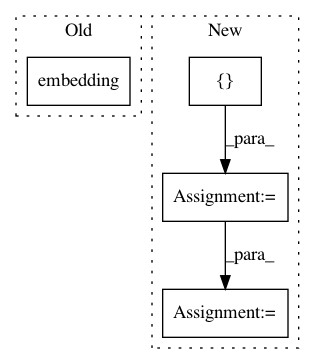

Before Change

new_word = word

if new_word in self.vocab_map:

idx = torch.tensor(self.vocab_map[new_word], requires_grad=False, device=device)

input_vectors.append(self.embedding(idx) )

continue

if new_word[-1] == """:After Change

begin_pad_width = random.randint(0, max_phrase_len - len(phrase))

end_pad_width = max_phrase_len - begin_pad_width - len(phrase)

indices = []

unknowns = []

for i in range(begin_pad_width):

indices.append(PAD_ID)

for word in phrase:

// our current word vectors are all entirely lowercased

word = word.lower()

if word in self.vocab_map:

indices.append(self.vocab_map[word])

continue

new_word = word.replace("-", "")

// google vectors have words which are all dashes

if len(new_word) == 0:

new_word = word

if new_word in self.vocab_map:

indices.append(self.vocab_map[new_word])

continue

if new_word[-1] == """:

new_word = new_word[:-1]

if new_word in self.vocab_map:

indices.append(self.vocab_map[new_word])

continue

// TODO: split UNK based on part of speech? might be an interesting experiment

unknowns.append(len(indices))

indices.append(PAD_ID)

for i in range(end_pad_width):

indices.append(PAD_ID)

indices = torch.tensor(indices, requires_grad=False, device=device)

input_vectors = self.embedding(indices)

for unknown in unknowns:

input_vectors[unknown, :] = self.unk

// we will now have an N x emb_size tensor

// this is the input to the CNN

// there are two ways in which this padding is suboptimal

// the first is that for short sentences, smaller windows will

// be padded to the point that some windows are entirely pad

// the second is that a sentence S will have more or less padding

// depending on what other sentences are in its batch

// we assume these effects are pretty minimal

// reshape x to 1xNxE

x = input_vectors.unsqueeze(0)

input_tensor.append(x)

x = torch.stack(input_tensor)

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances Project Name: stanfordnlp/stanza

Commit Name: db7b74579181f9cbae3583f447d83148714a1c3d

Time: 2020-06-15

Author: horatio@gmail.com

File Name: stanza/models/classifiers/cnn_classifier.py

Class Name: CNNClassifier

Method Name: forward

Project Name: analysiscenter/batchflow

Commit Name: 4c50261df4847bdfd7c8067307e8532f96d04104

Time: 2019-08-02

Author: Tsimfer.SA@gazprom-neft.ru

File Name: batchflow/models/torch/encoder_decoder.py

Class Name: EncoderDecoder

Method Name: body

Project Name: dpressel/mead-baseline

Commit Name: 71bd73748b835de5ae20bdc90ce4321e47f4c2b2

Time: 2019-09-25

Author: dpressel@gmail.com

File Name: python/eight_mile/tf/layers.py

Class Name: EmbeddingsStack

Method Name: call