ba164c0dbb3d8171004380956a88431f4e8248ba,onmt/Models.py,Embeddings,make_positional_encodings,#Embeddings#Any#Any#,51

Before Change

for i in range(dim):

for j in range(max_len):

k = float(j) / (10000.0 ** (2.0*i / float(dim)))

pe[j, 0, i] = math.cos(k) if i % 2 == 1 else math.sin(k)

return pe

def load_pretrained_vectors(self, emb_file):

if emb_file is not None:After Change

def make_positional_encodings(self, dim, max_len):

pe = torch.arange(0, max_len).unsqueeze(1).expand(max_len, dim)

div_term = 1 / torch.pow(10000, torch.arange(0, dim * 2, 2) / dim)

pe = pe * div_term.expand_as(pe)

pe[:, 0::2] = torch.sin(pe[:, 0::2])

pe[:, 1::2] = torch.cos(pe[:, 1::2])

return pe.unsqueeze(1)

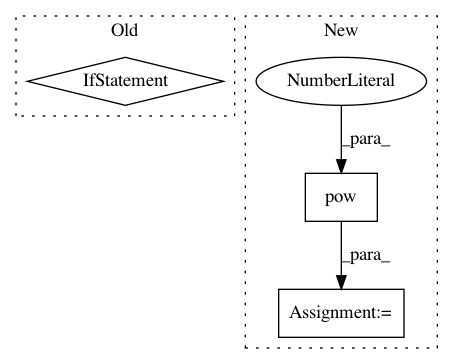

def load_pretrained_vectors(self, emb_file):In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances Project Name: OpenNMT/OpenNMT-py

Commit Name: ba164c0dbb3d8171004380956a88431f4e8248ba

Time: 2017-08-01

Author: bpeters@coli.uni-saarland.de

File Name: onmt/Models.py

Class Name: Embeddings

Method Name: make_positional_encodings

Project Name: arraiy/torchgeometry

Commit Name: 7f0eb809f1509c452d85000fd002b12c22e358ca

Time: 2019-08-22

Author: ducha.aiki@gmail.com

File Name: kornia/filters/kernels.py

Class Name:

Method Name: gaussian

Project Name: mathics/Mathics

Commit Name: 7ed2f75e6799b3421a52da2c08842e41177a50ab

Time: 2016-08-23

Author: Bernhard.Liebl@gmx.org

File Name: mathics/builtin/graphics.py

Class Name: LABColor

Method Name: to_xyza