cd5dfd62a1c0baeb68fdbc4eb56f66298b5d10e9,train_vidreid_xent.py,,main,#,36

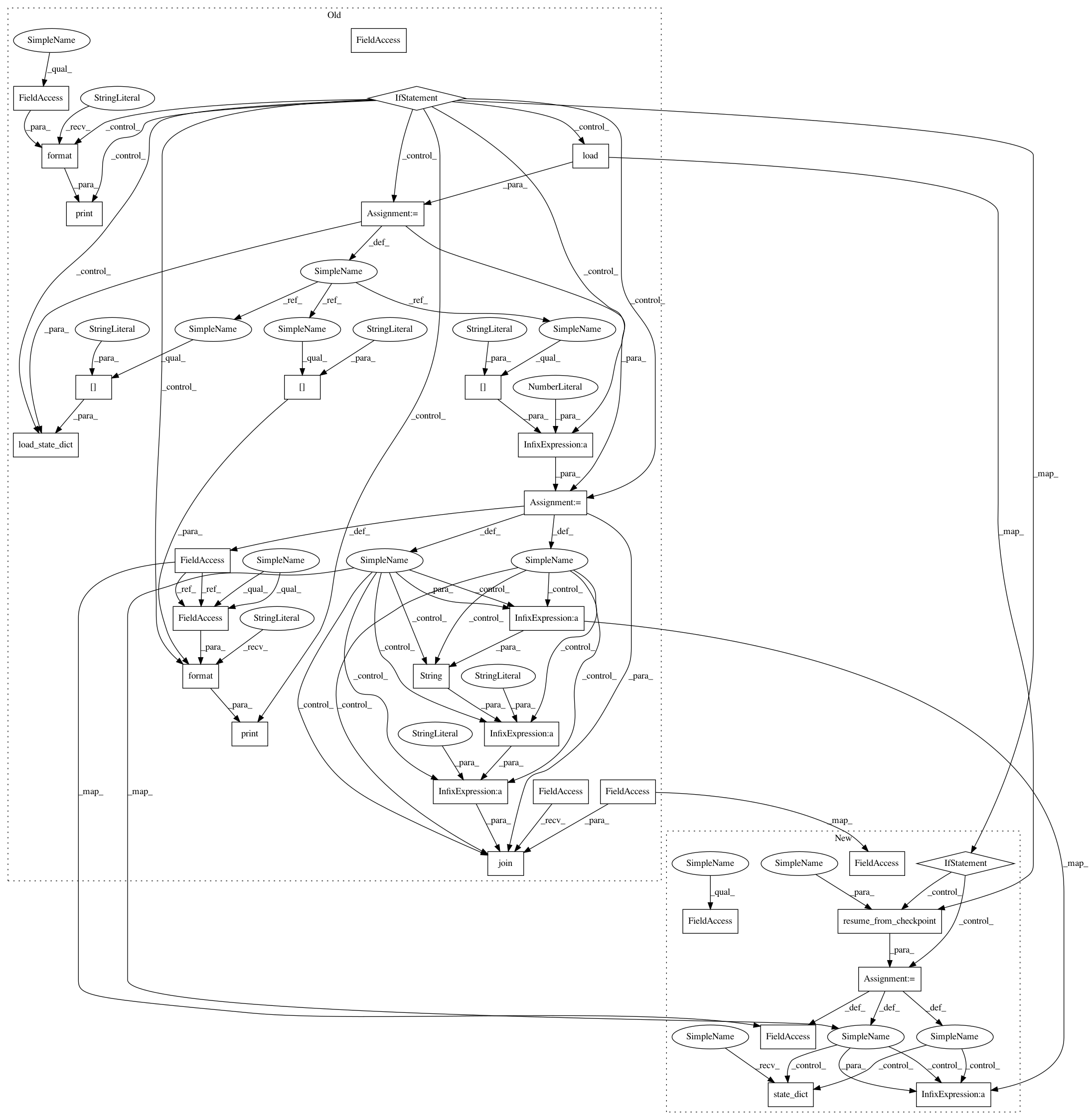

Before Change

if args.load_weights and check_isfile(args.load_weights):

load_pretrained_weights(model, args.load_weights)

if args.resume and check_isfile(args.resume):

checkpoint = torch.load(args.resume)

model.load_state_dict(checkpoint["state_dict"])

args.start_epoch = checkpoint["epoch"] + 1

print("Loaded checkpoint from "{}"".format(args.resume))

print("- start_epoch: {}\n- rank1: {}".format(args.start_epoch, checkpoint["rank1"]))

model = nn.DataParallel(model).cuda() if use_gpu else model

criterion = CrossEntropyLoss(num_classes=dm.num_train_pids, use_gpu=use_gpu, label_smooth=args.label_smooth)

optimizer = init_optimizer(model, **optimizer_kwargs(args))

scheduler = init_lr_scheduler(optimizer, **lr_scheduler_kwargs(args))

if args.evaluate:

print("Evaluate only")

for name in args.target_names:

print("Evaluating {} ...".format(name))

queryloader = testloader_dict[name]["query"]

galleryloader = testloader_dict[name]["gallery"]

distmat = test(model, queryloader, galleryloader, args.pool_tracklet_features, use_gpu, return_distmat=True)

if args.visualize_ranks:

visualize_ranked_results(

distmat, dm.return_testdataset_by_name(name),

save_dir=osp.join(args.save_dir, "ranked_results", name),

topk=20

)

return

start_time = time.time()

ranklogger = RankLogger(args.source_names, args.target_names)

train_time = 0

print("=> Start training")

if args.fixbase_epoch > 0:

print("Train {} for {} epochs while keeping other layers frozen".format(args.open_layers, args.fixbase_epoch))

initial_optim_state = optimizer.state_dict()

for epoch in range(args.fixbase_epoch):

start_train_time = time.time()

train(epoch, model, criterion, optimizer, trainloader, use_gpu, fixbase=True)

train_time += round(time.time() - start_train_time)

print("Done. All layers are open to train for {} epochs".format(args.max_epoch))

optimizer.load_state_dict(initial_optim_state)

for epoch in range(args.start_epoch, args.max_epoch):

start_train_time = time.time()

train(epoch, model, criterion, optimizer, trainloader, use_gpu)

train_time += round(time.time() - start_train_time)

scheduler.step()

if (epoch + 1) > args.start_eval and args.eval_freq > 0 and (epoch + 1) % args.eval_freq == 0 or (epoch + 1) == args.max_epoch:

print("=> Test")

for name in args.target_names:

print("Evaluating {} ...".format(name))

queryloader = testloader_dict[name]["query"]

galleryloader = testloader_dict[name]["gallery"]

rank1 = test(model, queryloader, galleryloader, args.pool_tracklet_features, use_gpu)

ranklogger.write(name, epoch + 1, rank1)

save_checkpoint({

"state_dict": model.state_dict(),

"rank1": rank1,

"epoch": epoch,

}, False, osp.join(args.save_dir, "checkpoint_ep" + str(epoch + 1) + ".pth.tar"))

elapsed = round(time.time() - start_time)

elapsed = str(datetime.timedelta(seconds=elapsed))

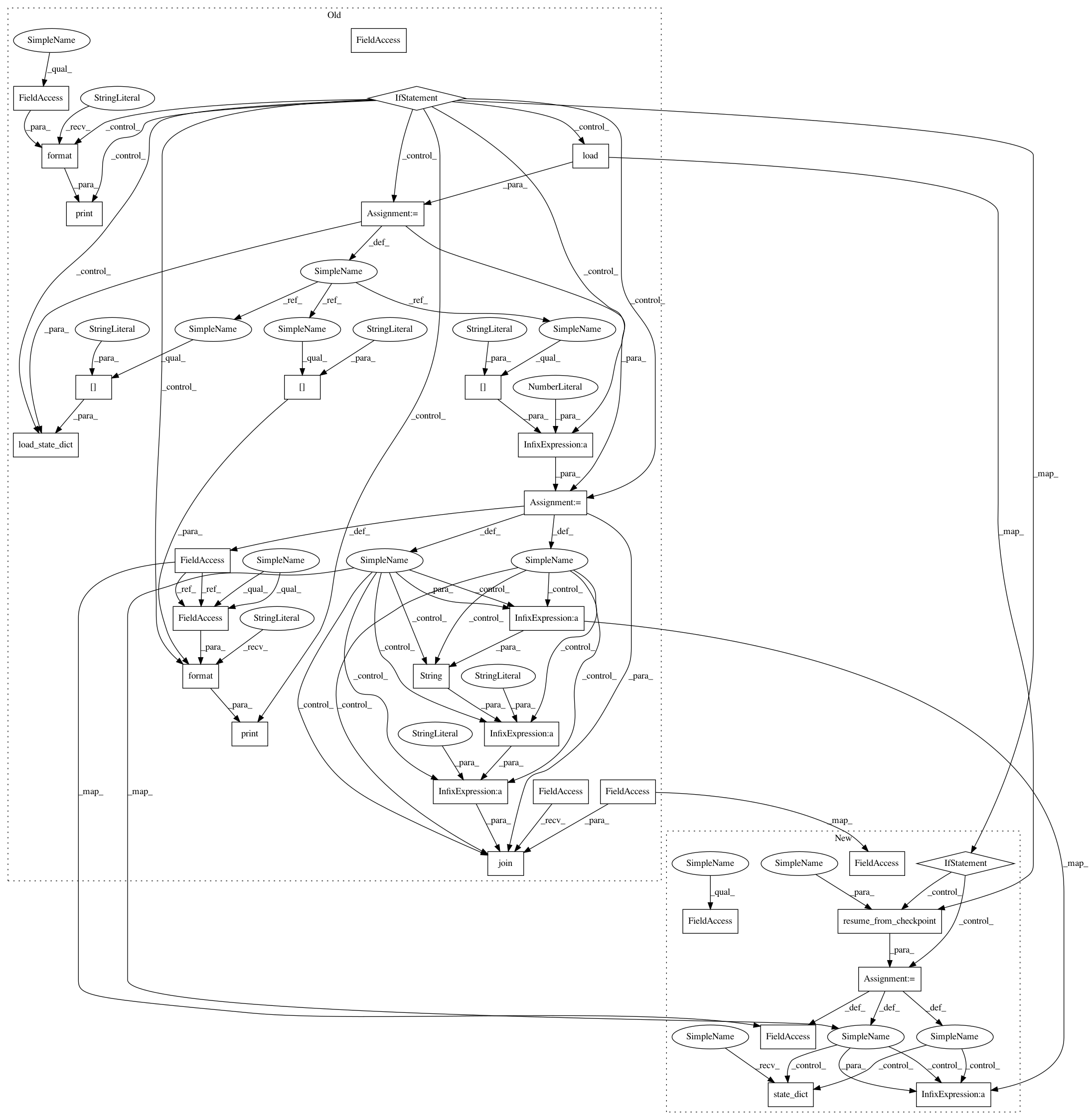

After Change

optimizer = init_optimizer(model, **optimizer_kwargs(args))

scheduler = init_lr_scheduler(optimizer, **lr_scheduler_kwargs(args))

if args.resume and check_isfile(args.resume):

args.start_epoch = resume_from_checkpoint(args.resume, model, optimizer=optimizer)

if args.evaluate:

print("Evaluate only")

for name in args.target_names:

print("Evaluating {} ...".format(name))

queryloader = testloader_dict[name]["query"]

galleryloader = testloader_dict[name]["gallery"]

distmat = test(model, queryloader, galleryloader, args.pool_tracklet_features, use_gpu, return_distmat=True)

if args.visualize_ranks:

visualize_ranked_results(

distmat, dm.return_testdataset_by_name(name),

save_dir=osp.join(args.save_dir, "ranked_results", name),

topk=20

)

return

start_time = time.time()

ranklogger = RankLogger(args.source_names, args.target_names)

train_time = 0

print("=> Start training")

if args.fixbase_epoch > 0:

print("Train {} for {} epochs while keeping other layers frozen".format(args.open_layers, args.fixbase_epoch))

initial_optim_state = optimizer.state_dict()

for epoch in range(args.fixbase_epoch):

start_train_time = time.time()

train(epoch, model, criterion, optimizer, trainloader, use_gpu, fixbase=True)

train_time += round(time.time() - start_train_time)

print("Done. All layers are open to train for {} epochs".format(args.max_epoch))

optimizer.load_state_dict(initial_optim_state)

for epoch in range(args.start_epoch, args.max_epoch):

start_train_time = time.time()

train(epoch, model, criterion, optimizer, trainloader, use_gpu)

train_time += round(time.time() - start_train_time)

scheduler.step()

if (epoch + 1) > args.start_eval and args.eval_freq > 0 and (epoch + 1) % args.eval_freq == 0 or (epoch + 1) == args.max_epoch:

print("=> Test")

for name in args.target_names:

print("Evaluating {} ...".format(name))

queryloader = testloader_dict[name]["query"]

galleryloader = testloader_dict[name]["gallery"]

rank1 = test(model, queryloader, galleryloader, args.pool_tracklet_features, use_gpu)

ranklogger.write(name, epoch + 1, rank1)

save_checkpoint({

"state_dict": model.state_dict(),

"rank1": rank1,

"epoch": epoch + 1,

"arch": args.arch,

"optimizer": optimizer.state_dict(),

}, args.save_dir)

elapsed = round(time.time() - start_time)

elapsed = str(datetime.timedelta(seconds=elapsed))

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 32

Instances

Project Name: KaiyangZhou/deep-person-reid

Commit Name: cd5dfd62a1c0baeb68fdbc4eb56f66298b5d10e9

Time: 2019-02-20

Author: k.zhou@qmul.ac.uk

File Name: train_vidreid_xent.py

Class Name:

Method Name: main

Project Name: KaiyangZhou/deep-person-reid

Commit Name: cd5dfd62a1c0baeb68fdbc4eb56f66298b5d10e9

Time: 2019-02-20

Author: k.zhou@qmul.ac.uk

File Name: train_imgreid_xent.py

Class Name:

Method Name: main

Project Name: KaiyangZhou/deep-person-reid

Commit Name: cd5dfd62a1c0baeb68fdbc4eb56f66298b5d10e9

Time: 2019-02-20

Author: k.zhou@qmul.ac.uk

File Name: train_imgreid_xent_htri.py

Class Name:

Method Name: main

Project Name: KaiyangZhou/deep-person-reid

Commit Name: cd5dfd62a1c0baeb68fdbc4eb56f66298b5d10e9

Time: 2019-02-20

Author: k.zhou@qmul.ac.uk

File Name: train_vidreid_xent.py

Class Name:

Method Name: main