86fe58ebb8a046215c2a558217a7a836b254a1a8,python/dgl/nn/pytorch/softmax.py,EdgeSoftmax,backward,#Any#Any#,60

Before Change

ctx.backward_cache = None

g.edata["out"] = out

g.edata["grad_s"] = out * grad_out

g.update_all(fn.copy_e("grad_s", "m"), fn.sum("m", "accum"))

g.apply_edges(fn.e_mul_v("out", "accum", "out"))

grad_score = g.edata["grad_s"] - g.edata["out"]

return None, grad_score, None

After Change

grad_score = sds - sds * sds_sum // multiple expressions

return grad_score.data

n_nodes, n_edges, gidx = ctx.backward_cache

out, = ctx.saved_tensors

//g.edata["grad_s"] = out * grad_out

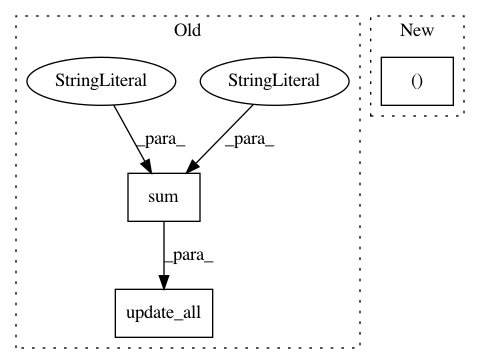

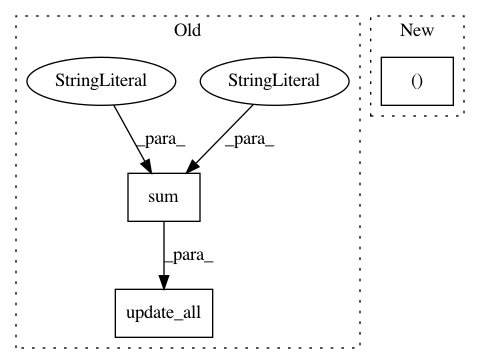

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances

Project Name: dmlc/dgl

Commit Name: 86fe58ebb8a046215c2a558217a7a836b254a1a8

Time: 2020-01-14

Author: coin2028@hotmail.com

File Name: python/dgl/nn/pytorch/softmax.py

Class Name: EdgeSoftmax

Method Name: backward

Project Name: dmlc/dgl

Commit Name: 565f0c88fc5cfbcfd5476da8146e92c793af4834

Time: 2019-02-25

Author: minjie.wang@nyu.edu

File Name: examples/pytorch/gat/gat.py

Class Name: GraphAttention

Method Name: edge_softmax

Project Name: dmlc/dgl

Commit Name: 86fe58ebb8a046215c2a558217a7a836b254a1a8

Time: 2020-01-14

Author: coin2028@hotmail.com

File Name: python/dgl/nn/pytorch/softmax.py

Class Name: EdgeSoftmax

Method Name: forward