9804bad1775b284d22e7423375df4a63364548f4,mlxtend/classifier/adaline.py,Adaline,fit,#Adaline#,62

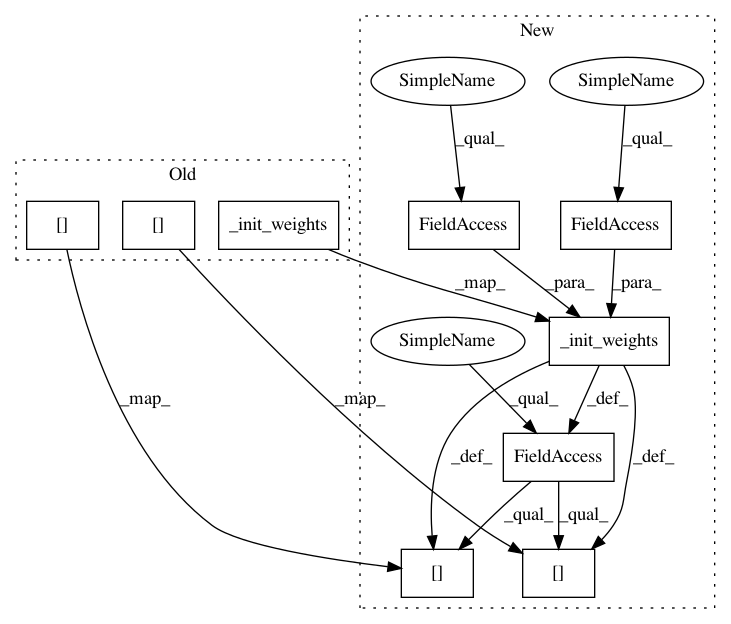

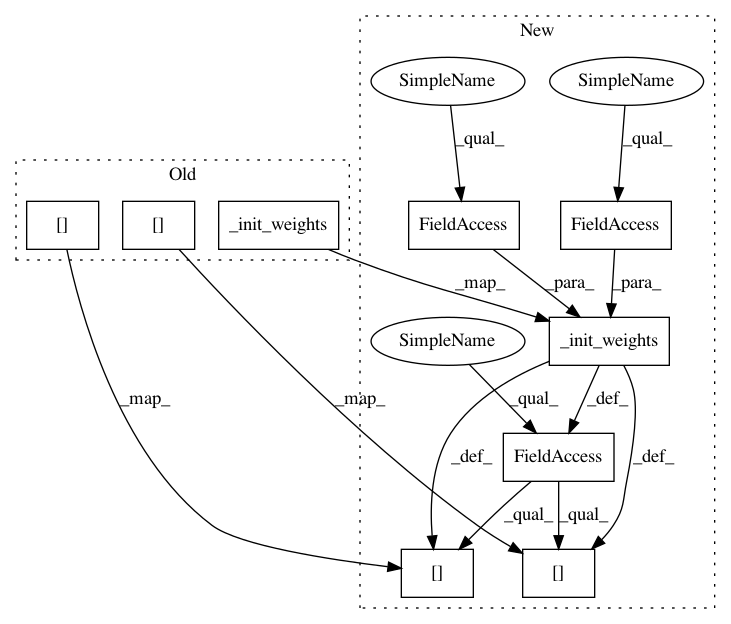

Before Change

// initialize weights

if init_weights:

self._init_weights(shape=1 + X.shape[1])

self.cost_ = []

if self.minibatches is None:

self.w_ = self._normal_equation(X, y)

// Gradient descent or stochastic gradient descent learning

else:

n_idx = list(range(y.shape[0]))

self.init_time_ = time()

for i in range(self.epochs):

if self.minibatches > 1:

X, y = self._shuffle(X, y)

minis = np.array_split(n_idx, self.minibatches)

for idx in minis:

y_val = self.activation(X[idx])

errors = (y[idx] - y_val)

self.w_[1:] += self.eta * X[idx].T.dot(errors)

self.w_[0] += self.eta * errors.sum()

cost = self._sum_squared_error_cost(y, self.activation(X))

self.cost_.append(cost)

After Change

self.thres_ = 0.5

if init_weights:

self.w_ = self._init_weights(shape=1 + X.shape[1],

zero_init_weight=self.zero_init_weight,

seed=self.random_seed)

self.cost_ = []

if self.minibatches is None:

self.w_ = self._normal_equation(X, y)

// Gradient descent or stochastic gradient descent learning

else:

n_idx = list(range(y.shape[0]))

self.init_time_ = time()

for i in range(self.epochs):

if self.minibatches > 1:

X, y = self._shuffle(X, y)

minis = np.array_split(n_idx, self.minibatches)

for idx in minis:

y_val = self.activation(X[idx])

errors = (y[idx] - y_val)

self.w_[1:] += self.eta * X[idx].T.dot(errors)

self.w_[0] += self.eta * errors.sum()

cost = self._sum_squared_error_cost(y, self.activation(X))

self.cost_.append(cost)

In pattern: SUPERPATTERN

Frequency: 4

Non-data size: 9

Instances

Project Name: rasbt/mlxtend

Commit Name: 9804bad1775b284d22e7423375df4a63364548f4

Time: 2016-03-07

Author: mail@sebastianraschka.com

File Name: mlxtend/classifier/adaline.py

Class Name: Adaline

Method Name: fit

Project Name: rasbt/mlxtend

Commit Name: 9804bad1775b284d22e7423375df4a63364548f4

Time: 2016-03-07

Author: mail@sebastianraschka.com

File Name: mlxtend/classifier/perceptron.py

Class Name: Perceptron

Method Name: fit

Project Name: rasbt/mlxtend

Commit Name: 9804bad1775b284d22e7423375df4a63364548f4

Time: 2016-03-07

Author: mail@sebastianraschka.com

File Name: mlxtend/classifier/adaline.py

Class Name: Adaline

Method Name: fit

Project Name: rasbt/mlxtend

Commit Name: be73e6cacf409e7b7b4a919f630e06c3d5f4af85

Time: 2016-03-16

Author: mail@sebastianraschka.com

File Name: mlxtend/classifier/adaline.py

Class Name: Adaline

Method Name: fit

Project Name: rasbt/mlxtend

Commit Name: be73e6cacf409e7b7b4a919f630e06c3d5f4af85

Time: 2016-03-16

Author: mail@sebastianraschka.com

File Name: mlxtend/classifier/perceptron.py

Class Name: Perceptron

Method Name: fit