1f8dd5463cd199ffa64ad98fe3274726ba7301bf,examples/attention_tagger.py,,main,#,138

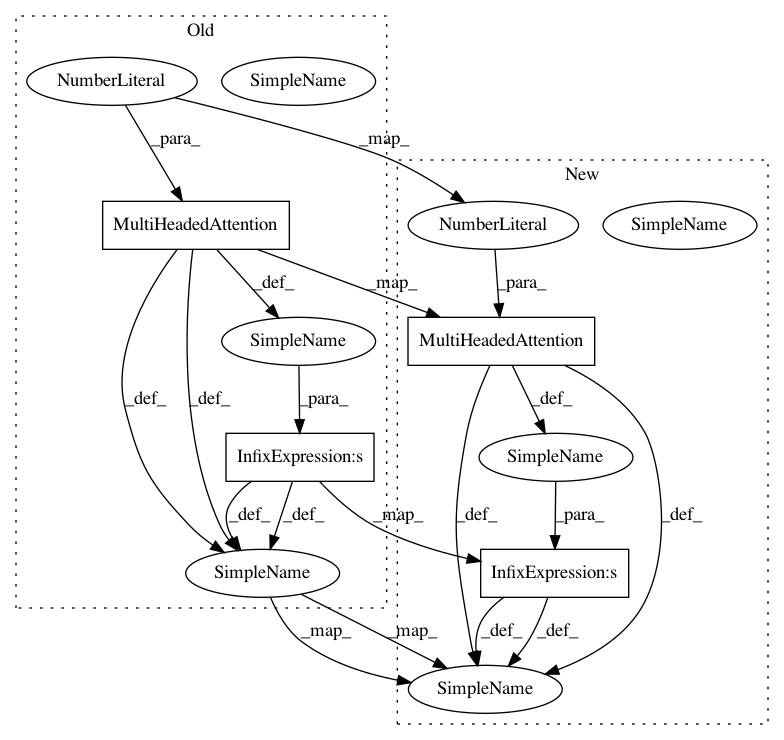

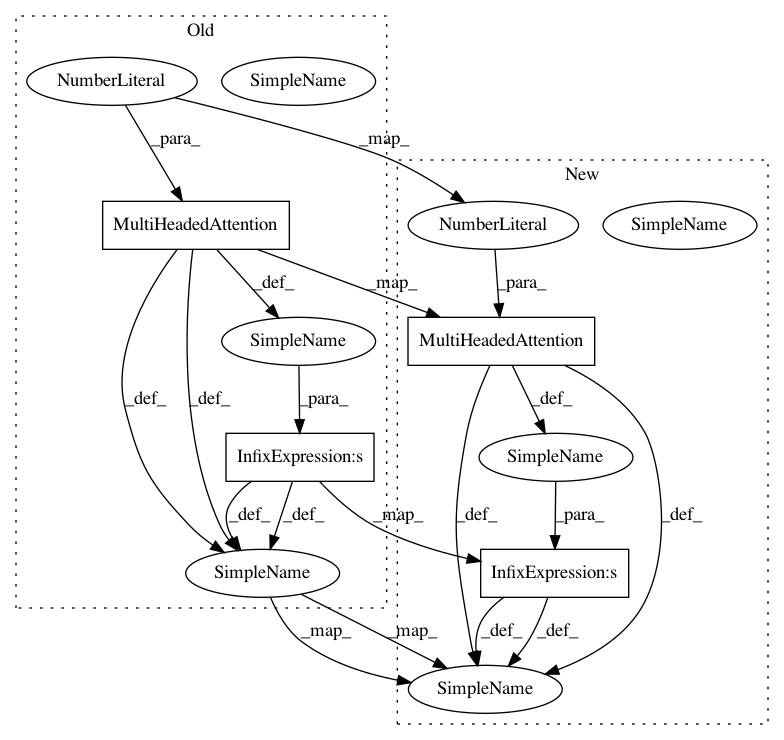

Before Change

prefix = HashEmbed(width // 2, 100, column=2)

suffix = HashEmbed(width // 2, 100, column=3)

model = (

with_flatten(

(lower_case | shape | prefix | suffix)

>> Maxout(width, pieces=3), pad=0)

>> PositionEncode(1000, width)

>> flatten_add_lengths

>> Residual(MultiHeadedAttention(nM=width, nH=1))

>> unflatten

>> with_flatten(Softmax(nr_tag))

)

After Change

prefix = HashEmbed(width // 2, 100, column=2)

suffix = HashEmbed(width // 2, 100, column=3)

model = (

with_flatten(

(lower_case | shape | prefix | suffix)

>> Maxout(width, pieces=3))

>> PositionEncode(1000, width)

>> flatten_add_lengths

>> Residual(

get_qkv_self_attention(Affine(width*3, width), nM=width, nH=4)

>> MultiHeadedAttention(nM=width, nH=4)

>> with_getitem(0, Affine(width, width)))

>> unflatten

>> with_flatten(Softmax(nr_tag))

)

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances

Project Name: explosion/thinc

Commit Name: 1f8dd5463cd199ffa64ad98fe3274726ba7301bf

Time: 2019-06-10

Author: honnibal+gh@gmail.com

File Name: examples/attention_tagger.py

Class Name:

Method Name: main

Project Name: explosion/thinc

Commit Name: fdc2749cceb52addb817f22ed5531535867cd29e

Time: 2019-06-10

Author: honnibal+gh@gmail.com

File Name: examples/attention_tagger.py

Class Name:

Method Name: main

Project Name: explosion/thinc

Commit Name: e07ec603e2163f7b8b75d9953ee616d1d2f47cdd

Time: 2019-06-09

Author: honnibal+gh@gmail.com

File Name: examples/attention_tagger.py

Class Name:

Method Name: main