78f46822aec6cea5e216e1f7404d351835c54346,yarll/agents/sac.py,SAC,train,#SAC#,92

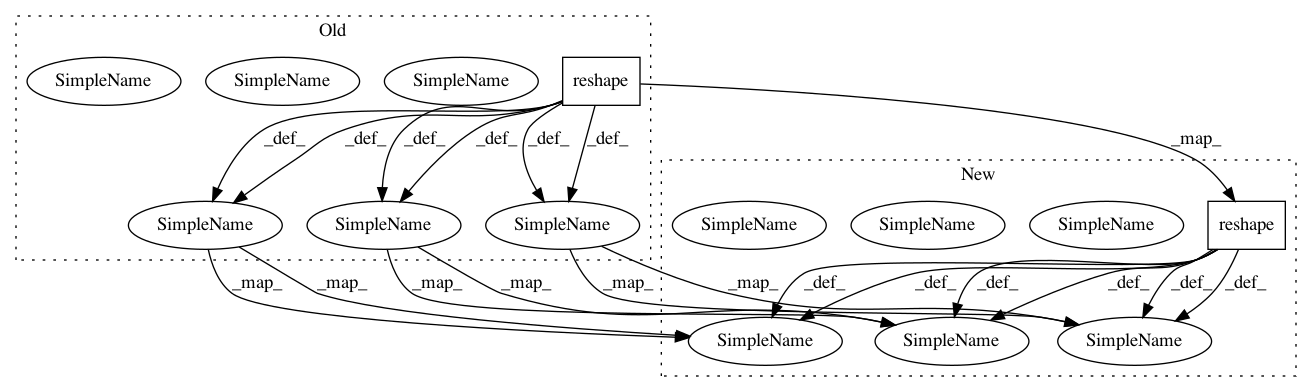

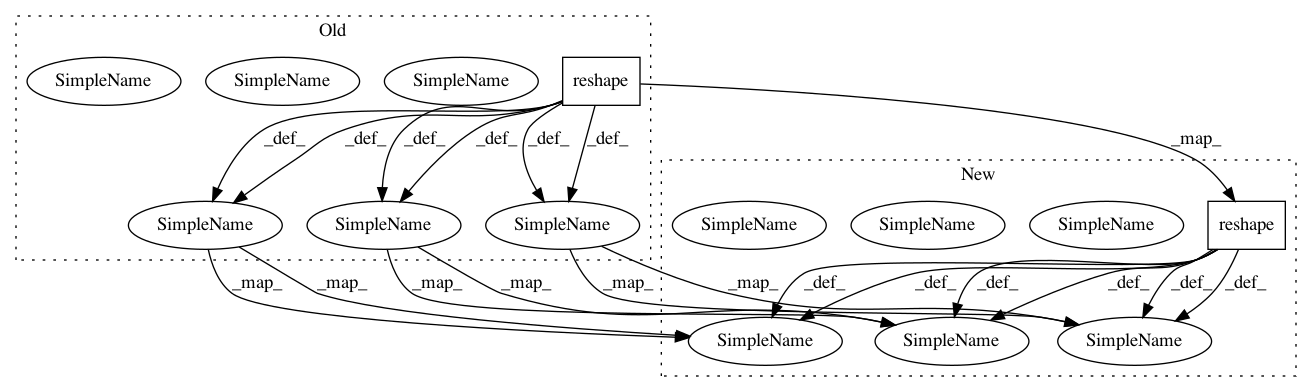

Before Change

next_value_batch = self.target_value(state1_batch)

softq_targets = reward_batch + (1 - terminal1_batch) * \

self.config["gamma"] * tf.reshape(next_value_batch, [-1])

softq_targets = tf.cast(tf.reshape(softq_targets, [self.config["batch_size"], 1]), tf.float32)

actions, action_logprob = self.actor_network(state0_batch)

new_softq = self.softq_network(state0_batch, actions)

with tf.GradientTape() as softq_tape:

softq = self.softq_network(state0_batch, action_batch)

softq_loss = tf.reduce_mean(tf.square(softq - softq_targets))

value_target = tf.stop_gradient(new_softq - action_logprob)

with tf.GradientTape() as value_tape:

values = self.value_network(state0_batch)

value_loss = tf.reduce_mean(tf.square(values - value_target))

advantage = tf.stop_gradient(action_logprob - new_softq + values)

with tf.GradientTape() as actor_tape:

_, action_logprob = self.actor_network(state0_batch)

actor_loss = tf.reduce_mean(action_logprob * advantage)

actor_gradients = actor_tape.gradient(actor_loss, self.actor_network.trainable_weights)

softq_gradients = softq_tape.gradient(softq_loss, self.softq_network.trainable_weights)

value_gradients = value_tape.gradient(value_loss, self.value_network.trainable_weights)

self.actor_optimizer.apply_gradients(zip(actor_gradients, self.actor_network.trainable_weights))

After Change

next_value_batch = self.target_value(state1_batch)

softq_targets = reward_batch + (1 - terminal1_batch) * \

self.config["gamma"] * tf.reshape(next_value_batch, [-1])

softq_targets = tf.reshape(softq_targets, [self.config["batch_size"], 1])

with tf.GradientTape() as softq_tape:

softq = self.softq_network(state0_batch, action_batch)

softq_loss = tf.reduce_mean(tf.square(softq - softq_targets))

with tf.GradientTape() as value_tape:

values = self.value_network(state0_batch)

with tf.GradientTape() as actor_tape:

actions, action_logprob = self.actor_network(state0_batch)

new_softq = self.softq_network(state0_batch, actions)

advantage = tf.stop_gradient(action_logprob - new_softq + values)

actor_loss = tf.reduce_mean(action_logprob * advantage)

value_target = tf.stop_gradient(new_softq - action_logprob)

with value_tape:

value_loss = tf.reduce_mean(tf.square(values - value_target))

actor_gradients = actor_tape.gradient(actor_loss, self.actor_network.trainable_weights)

softq_gradients = softq_tape.gradient(softq_loss, self.softq_network.trainable_weights)

value_gradients = value_tape.gradient(value_loss, self.value_network.trainable_weights)

self.actor_optimizer.apply_gradients(zip(actor_gradients, self.actor_network.trainable_weights))

In pattern: SUPERPATTERN

Frequency: 4

Non-data size: 2

Instances

Project Name: arnomoonens/yarll

Commit Name: 78f46822aec6cea5e216e1f7404d351835c54346

Time: 2019-06-10

Author: arno.moonens@gmail.com

File Name: yarll/agents/sac.py

Class Name: SAC

Method Name: train

Project Name: pgmpy/pgmpy

Commit Name: 33a33b24185e45478c4758ac14cab8ae5234c44b

Time: 2016-06-17

Author: utkarsh.gupta550@gmail.com

File Name: pgmpy/inference/base_continuous.py

Class Name: AbstractGaussian

Method Name: get_val

Project Name: arnomoonens/yarll

Commit Name: 78f46822aec6cea5e216e1f7404d351835c54346

Time: 2019-06-10

Author: arno.moonens@gmail.com

File Name: yarll/agents/sac.py

Class Name: SAC

Method Name: train

Project Name: AIRLab-POLIMI/mushroom

Commit Name: e0416e93d7ddfec66d7efa740fa691e4bb1f1eab

Time: 2021-02-10

Author: carlo.deramo@gmail.com

File Name: mushroom_rl/algorithms/value/dqn/rainbow.py

Class Name: Rainbow

Method Name: fit

Project Name: modAL-python/modAL

Commit Name: caec2c73aad40c0e632e978964ec5c3cf4773c9e

Time: 2019-06-02

Author: theodore.danka@gmail.com

File Name: examples/pytorch_integration.py

Class Name:

Method Name: