6585887db30feab4c87f81b6ffedc3ba2dcb13bb,thinc/neural/_classes/multiheaded_attention.py,MultiHeadedAttention,_apply_attn,#MultiHeadedAttention#,398

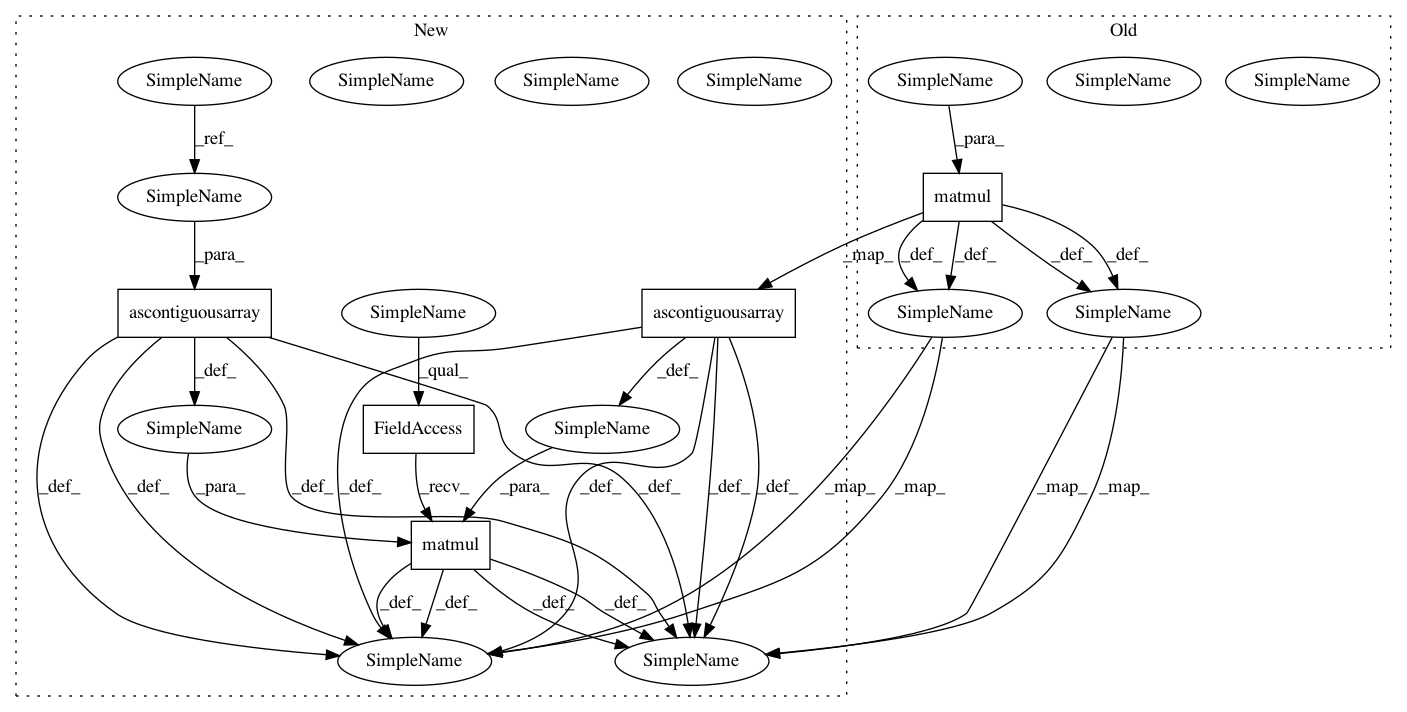

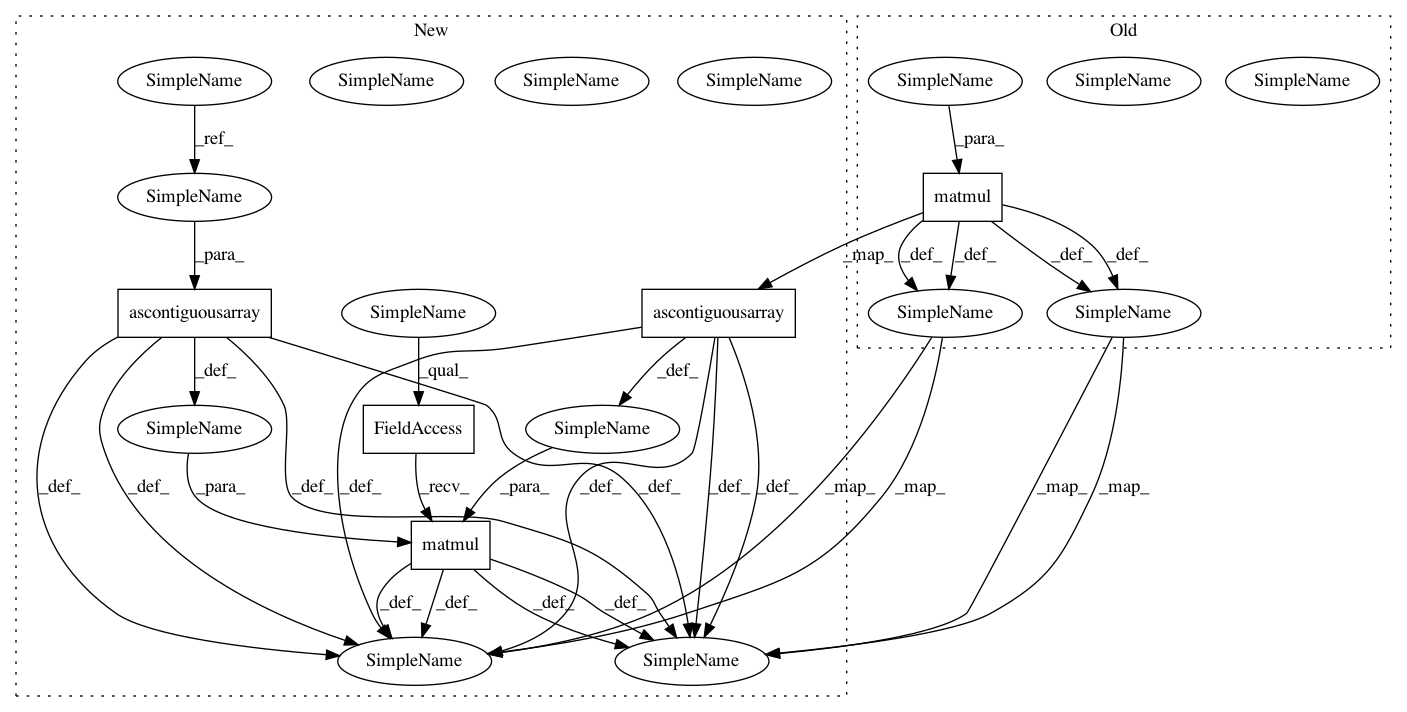

Before Change

nD = V0.shape[-1]

V1 = V0.reshape((nB * nH, nL, nD))

S1 = S0.reshape((nB * nH, nL, nL))

S2 = self.ops.xp.matmul(S1, V1)

S3 = S2.reshape((nB, nH, nL, nD))

def backprop_attn4(dS3):

dS2 = dS3.reshape((nB * nH, nL, nD))

After Change

// S3: (nB, nH, nL, nD)

nB, nH, nL, nL = S0.shape

nD = V0.shape[-1]

V1 = V0.reshape((nB * nH, nL, nD))

S1 = S0.reshape((nB * nH, nL, nL))

S2 = self.ops.matmul(self.ops.xp.ascontiguousarray(S1), self.ops.xp.ascontiguousarray(V1))

S3 = S2.reshape((nB, nH, nL, nD))

def backprop_attn4(dS3):

dS2 = self.ops.xp.ascontiguousarray(dS3.reshape((nB * nH, nL, nD)))

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances

Project Name: explosion/thinc

Commit Name: 6585887db30feab4c87f81b6ffedc3ba2dcb13bb

Time: 2019-06-09

Author: honnibal+gh@gmail.com

File Name: thinc/neural/_classes/multiheaded_attention.py

Class Name: MultiHeadedAttention

Method Name: _apply_attn

Project Name: explosion/thinc

Commit Name: 6585887db30feab4c87f81b6ffedc3ba2dcb13bb

Time: 2019-06-09

Author: honnibal+gh@gmail.com

File Name: thinc/neural/_classes/multiheaded_attention.py

Class Name: SparseAttention

Method Name: _scaled_dot_prod

Project Name: explosion/thinc

Commit Name: 6585887db30feab4c87f81b6ffedc3ba2dcb13bb

Time: 2019-06-09

Author: honnibal+gh@gmail.com

File Name: thinc/neural/_classes/multiheaded_attention.py

Class Name: MultiHeadedAttention

Method Name: _scaled_dot_prod

Project Name: explosion/thinc

Commit Name: 6585887db30feab4c87f81b6ffedc3ba2dcb13bb

Time: 2019-06-09

Author: honnibal+gh@gmail.com

File Name: thinc/neural/_classes/multiheaded_attention.py

Class Name: MultiHeadedAttention

Method Name: _apply_attn