373ae159f7ae1cabaf87228d1ae0fb6acd1c6363,ch14/lib/model.py,AgentDDPG,__call__,#AgentDDPG#,131

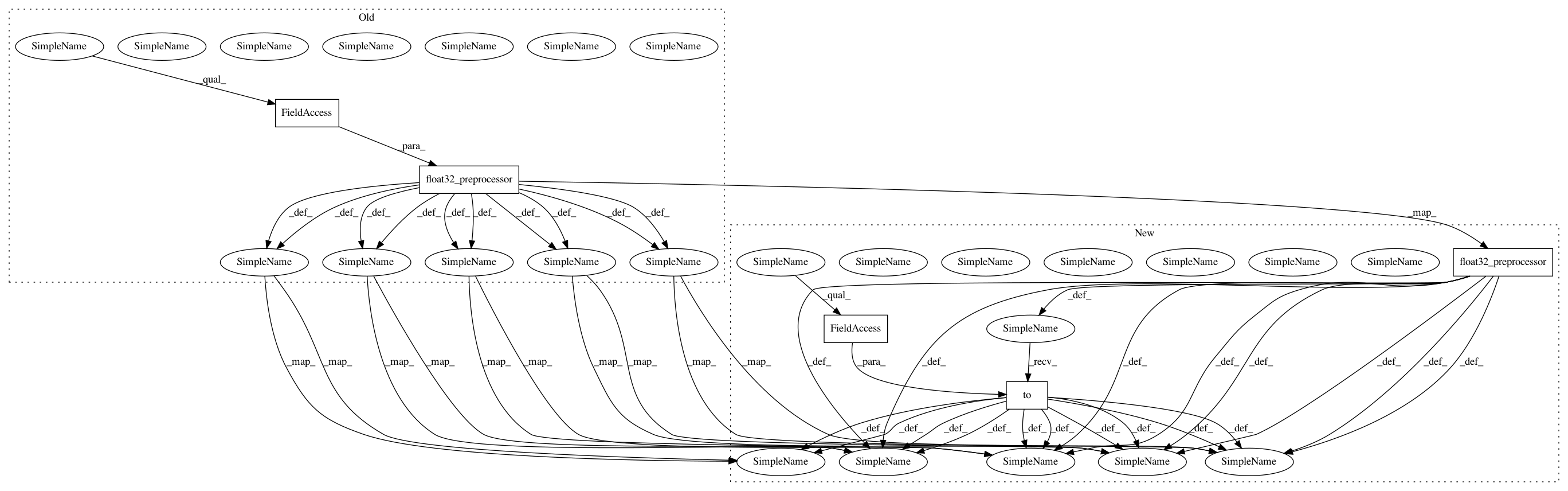

Before Change

return None

def __call__(self, states, agent_states):

states_v = ptan.agent.float32_preprocessor(states, cuda=self.cuda)

mu_v = self.net(states_v)

actions = mu_v.data.cpu().numpy()

if self.ou_enabled and self.ou_epsilon > 0:

new_a_states = []

for a_state, action in zip(agent_states, actions):

if a_state is None:

a_state = np.zeros(shape=action.shape, dtype=np.float32)

a_state += self.ou_teta * (self.ou_mu - a_state)

a_state += self.ou_sigma * np.random.normal(size=action.shape)

action += self.ou_epsilon * a_state

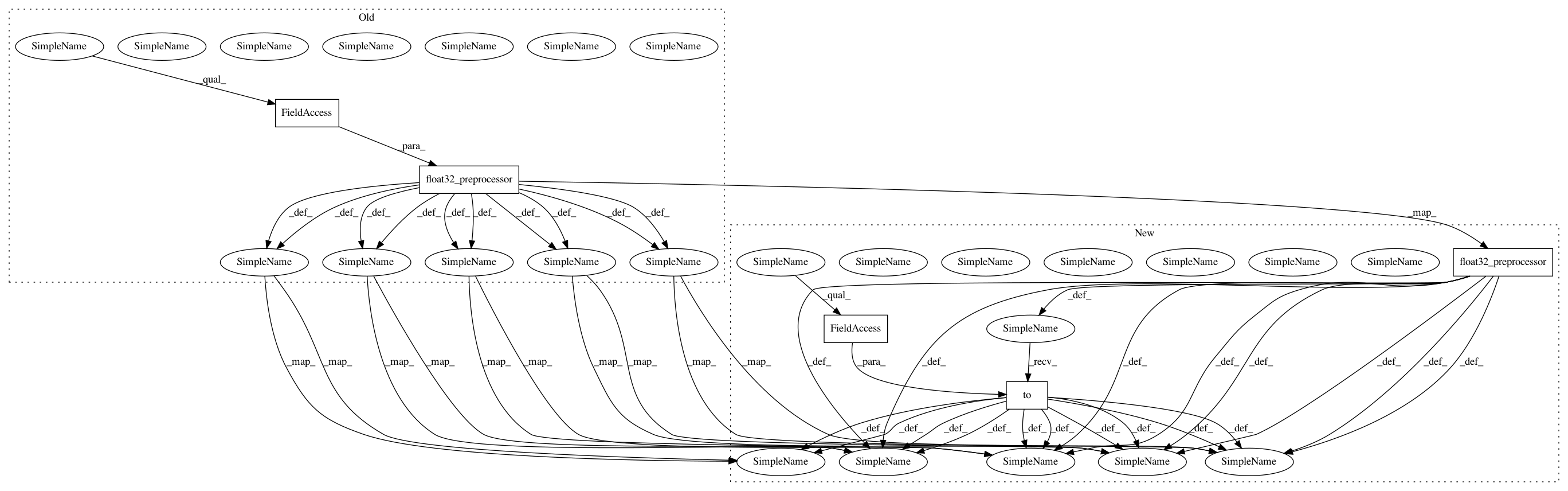

After Change

return None

def __call__(self, states, agent_states):

states_v = ptan.agent.float32_preprocessor(states).to(self.device)

mu_v = self.net(states_v)

actions = mu_v.data.cpu().numpy()

if self.ou_enabled and self.ou_epsilon > 0:

new_a_states = []

for a_state, action in zip(agent_states, actions):

if a_state is None:

a_state = np.zeros(shape=action.shape, dtype=np.float32)

a_state += self.ou_teta * (self.ou_mu - a_state)

a_state += self.ou_sigma * np.random.normal(size=action.shape)

action += self.ou_epsilon * a_state

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances

Project Name: PacktPublishing/Deep-Reinforcement-Learning-Hands-On

Commit Name: 373ae159f7ae1cabaf87228d1ae0fb6acd1c6363

Time: 2018-04-29

Author: max.lapan@gmail.com

File Name: ch14/lib/model.py

Class Name: AgentDDPG

Method Name: __call__

Project Name: PacktPublishing/Deep-Reinforcement-Learning-Hands-On

Commit Name: 373ae159f7ae1cabaf87228d1ae0fb6acd1c6363

Time: 2018-04-29

Author: max.lapan@gmail.com

File Name: ch14/lib/model.py

Class Name: AgentD4PG

Method Name: __call__

Project Name: PacktPublishing/Deep-Reinforcement-Learning-Hands-On

Commit Name: d5b0cd8e7960c247bb7c5b7c832358f8831780fb

Time: 2018-04-29

Author: max.lapan@gmail.com

File Name: ch15/lib/model.py

Class Name: AgentA2C

Method Name: __call__