84feedee2b6d0933cd6c3d7e420c369fe17c6cda,official/vision/beta/ops/spatial_transform_ops.py,,multilevel_crop_and_resize,#,136

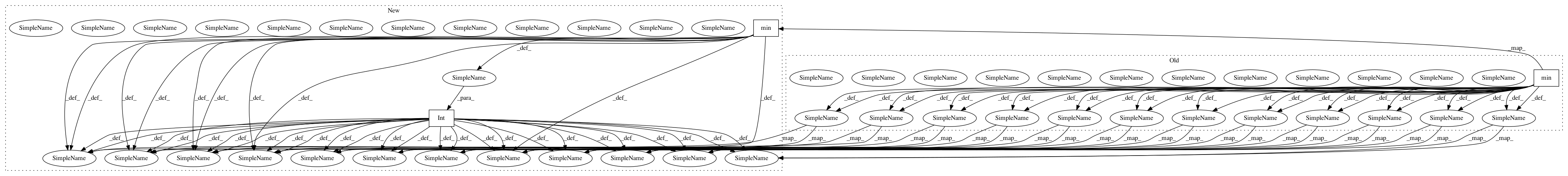

Before Change

with tf.name_scope("multilevel_crop_and_resize"):

levels = list(features.keys())

min_level = min(levels)

max_level = max(levels)

batch_size, max_feature_height, max_feature_width, num_filters = (

features[min_level].get_shape().as_list())

if batch_size is None:

batch_size = tf.shape(features[min_level])[0]

_, num_boxes, _ = boxes.get_shape().as_list()

// Stack feature pyramid into a features_all of shape

// [batch_size, levels, height, width, num_filters].

features_all = []

feature_heights = []

feature_widths = []

for level in range(min_level, max_level + 1):

shape = features[level].get_shape().as_list()

feature_heights.append(shape[1])

feature_widths.append(shape[2])

// Concat tensor of [batch_size, height_l * width_l, num_filters] for each

// levels.

features_all.append(

tf.reshape(features[level], [batch_size, -1, num_filters]))

features_r2 = tf.reshape(tf.concat(features_all, 1), [-1, num_filters])

// Calculate height_l * width_l for each level.

level_dim_sizes = [

feature_widths[i] * feature_heights[i]

for i in range(len(feature_widths))

]

// level_dim_offsets is accumulated sum of level_dim_size.

level_dim_offsets = [0]

for i in range(len(feature_widths) - 1):

level_dim_offsets.append(level_dim_offsets[i] + level_dim_sizes[i])

batch_dim_size = level_dim_offsets[-1] + level_dim_sizes[-1]

level_dim_offsets = tf.constant(level_dim_offsets, tf.int32)

height_dim_sizes = tf.constant(feature_widths, tf.int32)

// Assigns boxes to the right level.

box_width = boxes[:, :, 3] - boxes[:, :, 1]

box_height = boxes[:, :, 2] - boxes[:, :, 0]

areas_sqrt = tf.cast(tf.sqrt(box_height * box_width), tf.float32)

levels = tf.cast(

tf.math.floordiv(

tf.math.log(tf.divide(areas_sqrt, 224.0)),

tf.math.log(2.0)) + 4.0,

dtype=tf.int32)

// Maps levels between [min_level, max_level].

levels = tf.minimum(max_level, tf.maximum(levels, min_level))

// Projects box location and sizes to corresponding feature levels.

scale_to_level = tf.cast(

tf.pow(tf.constant(2.0), tf.cast(levels, tf.float32)),

dtype=boxes.dtype)

boxes /= tf.expand_dims(scale_to_level, axis=2)

box_width /= scale_to_level

box_height /= scale_to_level

boxes = tf.concat([boxes[:, :, 0:2],

tf.expand_dims(box_height, -1),

tf.expand_dims(box_width, -1)], axis=-1)

// Maps levels to [0, max_level-min_level].

levels -= min_level

level_strides = tf.pow([[2.0]], tf.cast(levels, tf.float32))

boundary = tf.cast(

tf.concat([

tf.expand_dims(

[[tf.cast(max_feature_height, tf.float32)]] / level_strides - 1,

axis=-1),

tf.expand_dims(

[[tf.cast(max_feature_width, tf.float32)]] / level_strides - 1,

axis=-1),

],

axis=-1), boxes.dtype)

// Compute grid positions.

kernel_y, kernel_x, box_gridy0y1, box_gridx0x1 = _compute_grid_positions(

boxes, boundary, output_size, sample_offset)

x_indices = tf.cast(

tf.reshape(box_gridx0x1, [batch_size, num_boxes, output_size * 2]),

dtype=tf.int32)

y_indices = tf.cast(

tf.reshape(box_gridy0y1, [batch_size, num_boxes, output_size * 2]),

dtype=tf.int32)

batch_size_offset = tf.tile(

tf.reshape(

tf.range(batch_size) * batch_dim_size, [batch_size, 1, 1, 1]),

[1, num_boxes, output_size * 2, output_size * 2])

// Get level offset for each box. Each box belongs to one level.

levels_offset = tf.tile(

tf.reshape(

tf.gather(level_dim_offsets, levels),

[batch_size, num_boxes, 1, 1]),

[1, 1, output_size * 2, output_size * 2])

y_indices_offset = tf.tile(

tf.reshape(

y_indices * tf.expand_dims(tf.gather(height_dim_sizes, levels), -1),

[batch_size, num_boxes, output_size * 2, 1]),

[1, 1, 1, output_size * 2])

x_indices_offset = tf.tile(

tf.reshape(x_indices, [batch_size, num_boxes, 1, output_size * 2]),

[1, 1, output_size * 2, 1])

indices = tf.reshape(

batch_size_offset + levels_offset + y_indices_offset + x_indices_offset,

[-1])

// TODO(wangtao): replace tf.gather with tf.gather_nd and try to get similar

// performance.

features_per_box = tf.reshape(

tf.gather(features_r2, indices),

[batch_size, num_boxes, output_size * 2, output_size * 2, num_filters])

// Bilinear interpolation.

features_per_box = _feature_bilinear_interpolation(

features_per_box, kernel_y, kernel_x)

return features_per_box

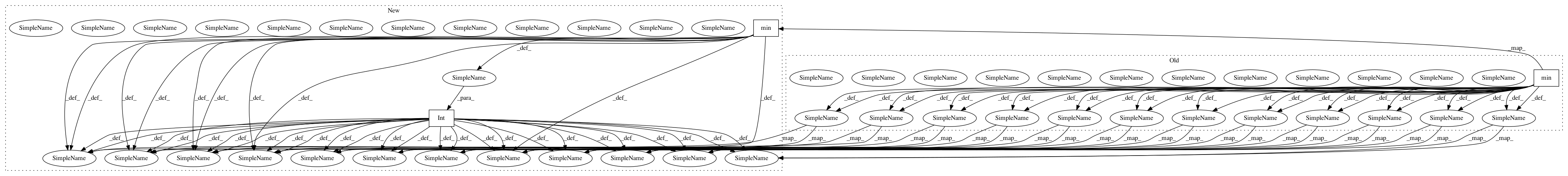

After Change

with tf.name_scope("multilevel_crop_and_resize"):

levels = list(features.keys())

min_level = int(min(levels))

max_level = int(max(levels))

batch_size, max_feature_height, max_feature_width, num_filters = (

features[str(min_level)].get_shape().as_list())

if batch_size is None:

batch_size = tf.shape(features[str(min_level)])[0]

_, num_boxes, _ = boxes.get_shape().as_list()

// Stack feature pyramid into a features_all of shape

// [batch_size, levels, height, width, num_filters].

features_all = []

feature_heights = []

feature_widths = []

for level in range(min_level, max_level + 1):

shape = features[str(level)].get_shape().as_list()

feature_heights.append(shape[1])

feature_widths.append(shape[2])

// Concat tensor of [batch_size, height_l * width_l, num_filters] for each

// levels.

features_all.append(

tf.reshape(features[str(level)], [batch_size, -1, num_filters]))

features_r2 = tf.reshape(tf.concat(features_all, 1), [-1, num_filters])

// Calculate height_l * width_l for each level.

level_dim_sizes = [

feature_widths[i] * feature_heights[i]

for i in range(len(feature_widths))

]

// level_dim_offsets is accumulated sum of level_dim_size.

level_dim_offsets = [0]

for i in range(len(feature_widths) - 1):

level_dim_offsets.append(level_dim_offsets[i] + level_dim_sizes[i])

batch_dim_size = level_dim_offsets[-1] + level_dim_sizes[-1]

level_dim_offsets = tf.constant(level_dim_offsets, tf.int32)

height_dim_sizes = tf.constant(feature_widths, tf.int32)

// Assigns boxes to the right level.

box_width = boxes[:, :, 3] - boxes[:, :, 1]

box_height = boxes[:, :, 2] - boxes[:, :, 0]

areas_sqrt = tf.cast(tf.sqrt(box_height * box_width), tf.float32)

levels = tf.cast(

tf.math.floordiv(

tf.math.log(tf.divide(areas_sqrt, 224.0)),

tf.math.log(2.0)) + 4.0,

dtype=tf.int32)

// Maps levels between [min_level, max_level].

levels = tf.minimum(max_level, tf.maximum(levels, min_level))

// Projects box location and sizes to corresponding feature levels.

scale_to_level = tf.cast(

tf.pow(tf.constant(2.0), tf.cast(levels, tf.float32)),

dtype=boxes.dtype)

boxes /= tf.expand_dims(scale_to_level, axis=2)

box_width /= scale_to_level

box_height /= scale_to_level

boxes = tf.concat([boxes[:, :, 0:2],

tf.expand_dims(box_height, -1),

tf.expand_dims(box_width, -1)], axis=-1)

// Maps levels to [0, max_level-min_level].

levels -= min_level

level_strides = tf.pow([[2.0]], tf.cast(levels, tf.float32))

boundary = tf.cast(

tf.concat([

tf.expand_dims(

[[tf.cast(max_feature_height, tf.float32)]] / level_strides - 1,

axis=-1),

tf.expand_dims(

[[tf.cast(max_feature_width, tf.float32)]] / level_strides - 1,

axis=-1),

],

axis=-1), boxes.dtype)

// Compute grid positions.

kernel_y, kernel_x, box_gridy0y1, box_gridx0x1 = _compute_grid_positions(

boxes, boundary, output_size, sample_offset)

x_indices = tf.cast(

tf.reshape(box_gridx0x1, [batch_size, num_boxes, output_size * 2]),

dtype=tf.int32)

y_indices = tf.cast(

tf.reshape(box_gridy0y1, [batch_size, num_boxes, output_size * 2]),

dtype=tf.int32)

batch_size_offset = tf.tile(

tf.reshape(

tf.range(batch_size) * batch_dim_size, [batch_size, 1, 1, 1]),

[1, num_boxes, output_size * 2, output_size * 2])

// Get level offset for each box. Each box belongs to one level.

levels_offset = tf.tile(

tf.reshape(

tf.gather(level_dim_offsets, levels),

[batch_size, num_boxes, 1, 1]),

[1, 1, output_size * 2, output_size * 2])

y_indices_offset = tf.tile(

tf.reshape(

y_indices * tf.expand_dims(tf.gather(height_dim_sizes, levels), -1),

[batch_size, num_boxes, output_size * 2, 1]),

[1, 1, 1, output_size * 2])

x_indices_offset = tf.tile(

tf.reshape(x_indices, [batch_size, num_boxes, 1, output_size * 2]),

[1, 1, output_size * 2, 1])

indices = tf.reshape(

batch_size_offset + levels_offset + y_indices_offset + x_indices_offset,

[-1])

// TODO(wangtao): replace tf.gather with tf.gather_nd and try to get similar

// performance.

features_per_box = tf.reshape(

tf.gather(features_r2, indices),

[batch_size, num_boxes, output_size * 2, output_size * 2, num_filters])

// Bilinear interpolation.

features_per_box = _feature_bilinear_interpolation(

features_per_box, kernel_y, kernel_x)

return features_per_box

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances

Project Name: tensorflow/models

Commit Name: 84feedee2b6d0933cd6c3d7e420c369fe17c6cda

Time: 2020-09-11

Author: arashwan@google.com

File Name: official/vision/beta/ops/spatial_transform_ops.py

Class Name:

Method Name: multilevel_crop_and_resize

Project Name: tensorflow/models

Commit Name: 84feedee2b6d0933cd6c3d7e420c369fe17c6cda

Time: 2020-09-11

Author: arashwan@google.com

File Name: official/vision/beta/modeling/layers/detection_generator.py

Class Name: MultilevelDetectionGenerator

Method Name: __call__

Project Name: scikit-video/scikit-video

Commit Name: ad44f30f465a90fe878b507a8a10a0090701db13

Time: 2016-12-11

Author: tgoodall@utexas.edu

File Name: skvideo/measure/viideo.py

Class Name:

Method Name: viideo_score