dfb84aa20650e8c3a7ee46690767ee34bb630a1f,txtgen/data/database.py,PairedTextDataBase,__call__,#PairedTextDataBase#,411

Before Change

return data_provider

def __call__(self):

data = self._data_provider.get(self._data_provider.list_items())

data = dict(zip(self._data_provider.list_items(), data))

num_threads = 1

// Recommended capacity =

// (num_threads + a small safety margin) * batch_size + margin

capacity = (num_threads + 32) * self._hparams.batch_size + 1024

allow_smaller_final_batch = self._hparams.allow_smaller_final_batch

if len(self._hparams.bucket_boundaries) == 0:

data_batch = tf.train.batch(

tensors=data,

batch_size=self._hparams.batch_size,

num_threads=num_threads,

capacity=capacity,

enqueue_many=False,

dynamic_pad=True,

allow_smaller_final_batch=allow_smaller_final_batch,

name="%s/batch" % self.name)

else:

input_length = data[self._src_dataset.decoder.length_tensor_name]

_, data_batch = tf.contrib.training.bucket_by_sequence_length(

input_length=input_length,

tensors=data,

After Change

return data_provider

def __call__(self):

data = self._data_provider.get(list(self._data_provider.list_items()))

data = dict(zip(list(self._data_provider.list_items()), data))

num_threads = 1

// Recommended capacity =

// (num_threads + a small safety margin) * batch_size + margin

capacity = (num_threads + 32) * self._hparams.batch_size + 1024

allow_smaller_final_batch = self._hparams.allow_smaller_final_batch

if len(self._hparams.bucket_boundaries) == 0:

data_batch = tf.train.batch(

tensors=data,

batch_size=self._hparams.batch_size,

num_threads=num_threads,

capacity=capacity,

enqueue_many=False,

dynamic_pad=True,

allow_smaller_final_batch=allow_smaller_final_batch,

name="%s/batch" % self.name)

else:

input_length = data[self._src_dataset.decoder.length_tensor_name]

_, data_batch = tf.contrib.training.bucket_by_sequence_length(

input_length=input_length,

tensors=data,

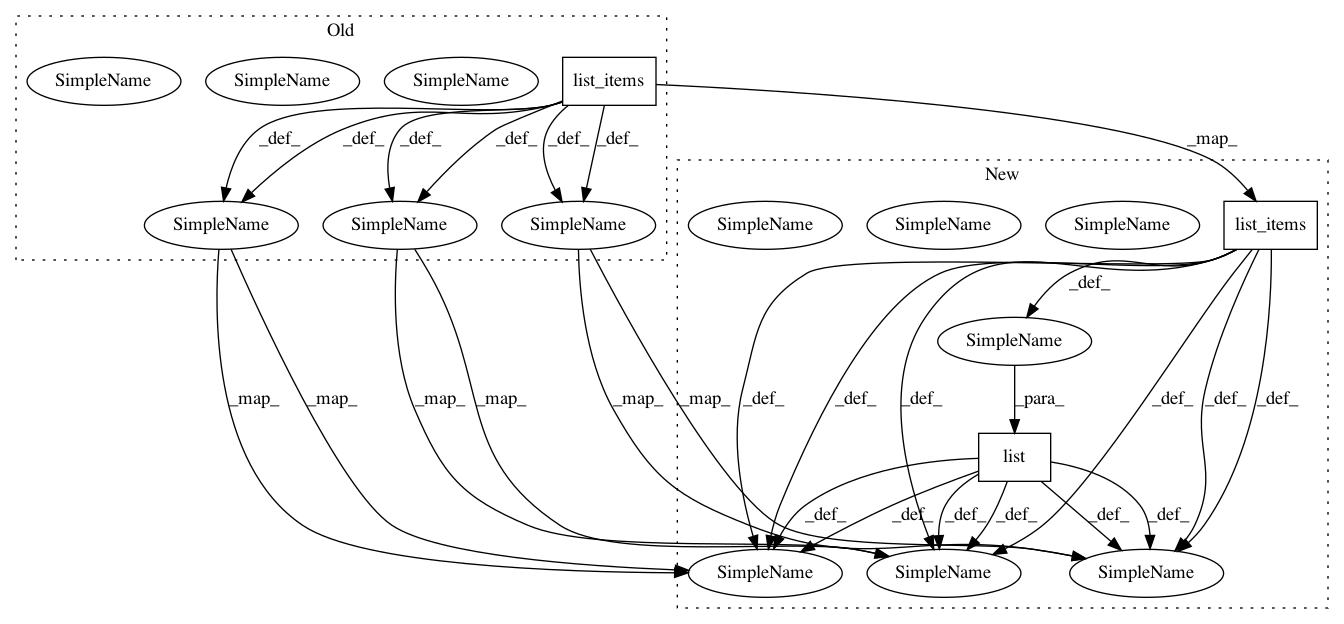

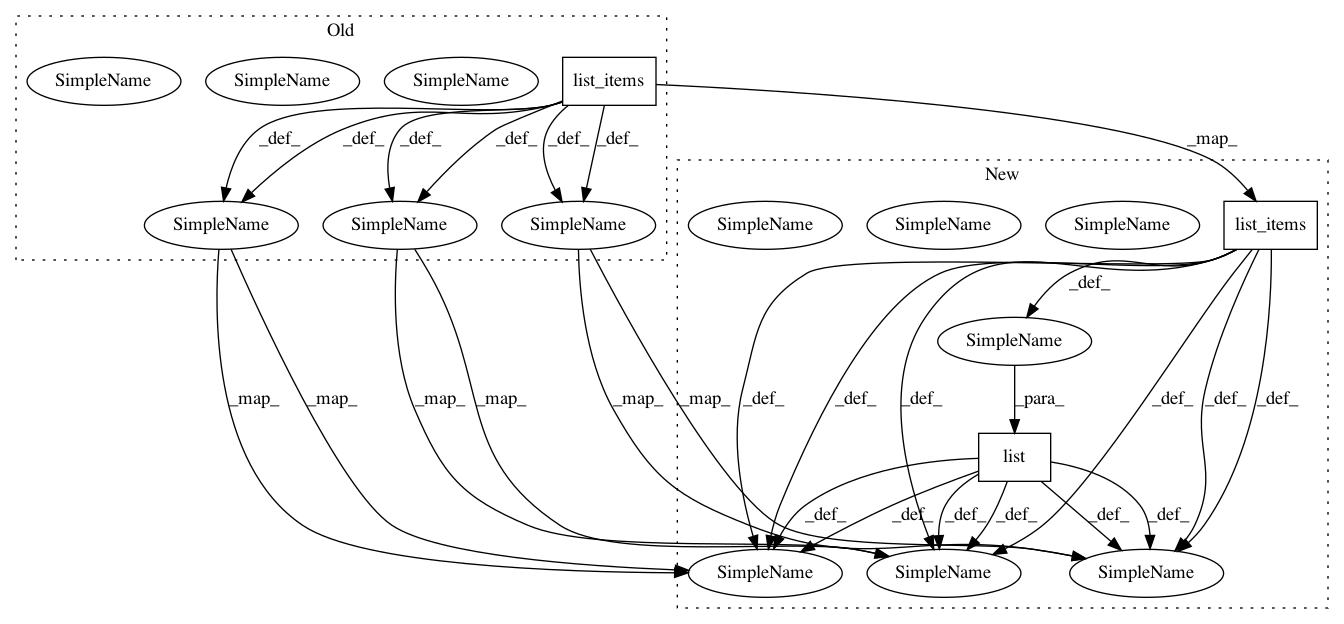

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances

Project Name: asyml/texar

Commit Name: dfb84aa20650e8c3a7ee46690767ee34bb630a1f

Time: 2017-11-08

Author: shore@pku.edu.cn

File Name: txtgen/data/database.py

Class Name: PairedTextDataBase

Method Name: __call__

Project Name: asyml/texar

Commit Name: 4337dec1bcbc32fa9d7a6a255280ee98bee8d158

Time: 2017-10-15

Author: diwang@cs.cmu.edu

File Name: txtgen/data/database.py

Class Name: MonoTextDataBase

Method Name: __call__