ff9c1dac887643e464f5f829c7d8b920b0aa8140,rllib/agents/ddpg/ddpg_torch_policy.py,,ddpg_actor_critic_loss,#,30

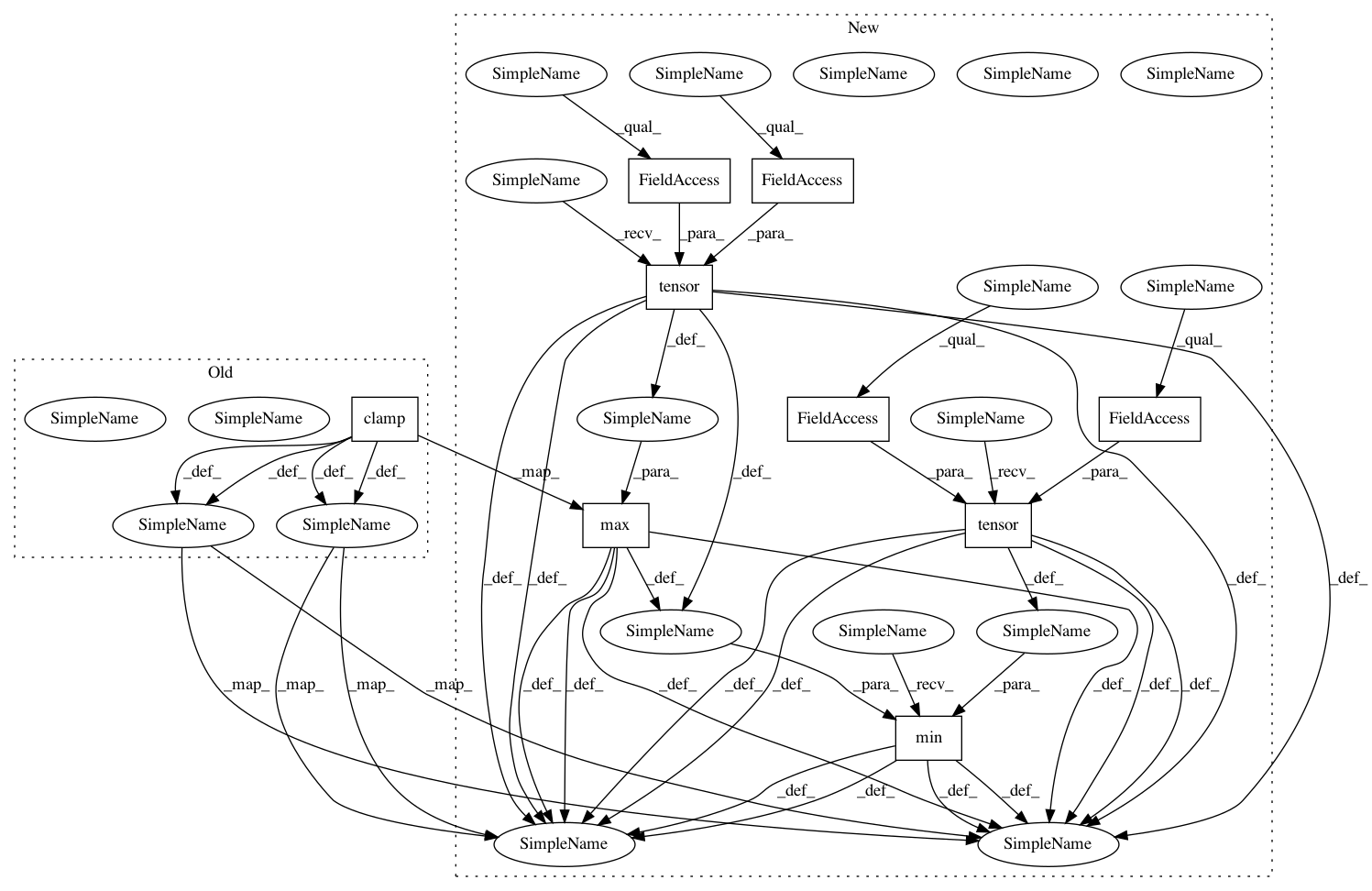

Before Change

mean=torch.zeros(policy_tp1.size()),

std=policy.config["target_noise"]), -target_noise_clip,

target_noise_clip)

policy_tp1_smoothed = torch.clamp(policy_tp1 + clipped_normal_sample,

policy.action_space.low.item(0),

policy.action_space.high.item(0))

else:

// No smoothing, just use deterministic actions.

policy_tp1_smoothed = policy_tp1

// Q-net(s) evaluation.

// prev_update_ops = set(tf1.get_collection(tf.GraphKeys.UPDATE_OPS))

// Q-values for given actions & observations in given current

q_t = model.get_q_values(model_out_t, train_batch[SampleBatch.ACTIONS])

// Q-values for current policy (no noise) in given current state

q_t_det_policy = model.get_q_values(model_out_t, policy_t)

actor_loss = -torch.mean(q_t_det_policy)

if twin_q:

twin_q_t = model.get_twin_q_values(model_out_t,

train_batch[SampleBatch.ACTIONS])

// q_batchnorm_update_ops = list(

// set(tf1.get_collection(tf.GraphKeys.UPDATE_OPS)) - prev_update_ops)

// Target q-net(s) evaluation.

q_tp1 = policy.target_model.get_q_values(target_model_out_tp1,

policy_tp1_smoothed)

if twin_q:

twin_q_tp1 = policy.target_model.get_twin_q_values(

target_model_out_tp1, policy_tp1_smoothed)

q_t_selected = torch.squeeze(q_t, axis=len(q_t.shape) - 1)

if twin_q:

twin_q_t_selected = torch.squeeze(twin_q_t, axis=len(q_t.shape) - 1)

q_tp1 = torch.min(q_tp1, twin_q_tp1)

q_tp1_best = torch.squeeze(input=q_tp1, axis=len(q_tp1.shape) - 1)

q_tp1_best_masked = \

(1.0 - train_batch[SampleBatch.DONES].float()) * \

q_tp1_best

// Compute RHS of bellman equation.

q_t_selected_target = (train_batch[SampleBatch.REWARDS] +

gamma**n_step * q_tp1_best_masked).detach()

// Compute the error (potentially clipped).

if twin_q:

td_error = q_t_selected - q_t_selected_target

twin_td_error = twin_q_t_selected - q_t_selected_target

td_error = td_error + twin_td_error

if use_huber:

errors = huber_loss(td_error, huber_threshold) \

+ huber_loss(twin_td_error, huber_threshold)

else:

errors = 0.5 * \

(torch.pow(td_error, 2.0) + torch.pow(twin_td_error, 2.0))

else:

td_error = q_t_selected - q_t_selected_target

if use_huber:

errors = huber_loss(td_error, huber_threshold)

else:

errors = 0.5 * torch.pow(td_error, 2.0)

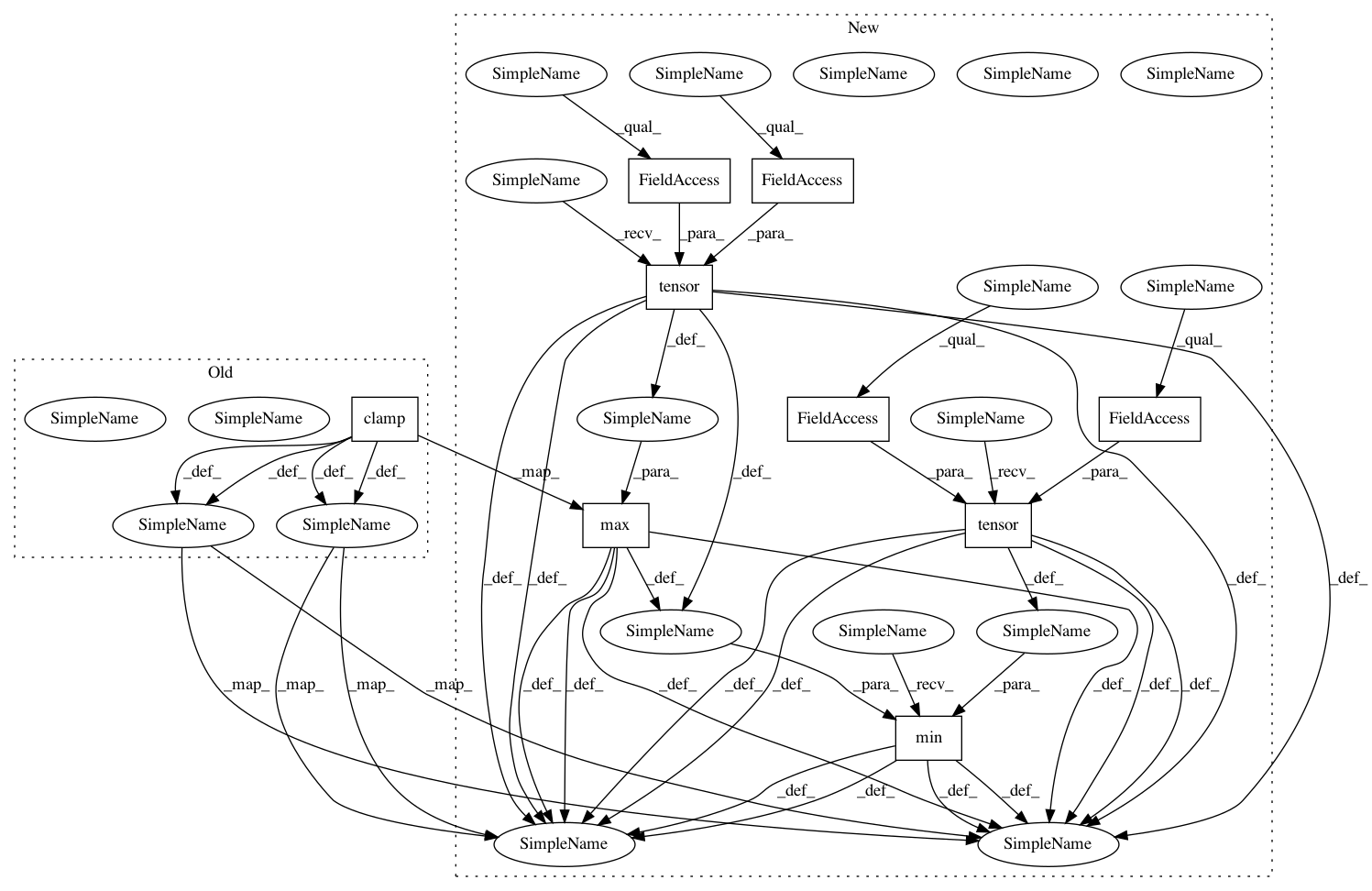

After Change

-target_noise_clip,

target_noise_clip)

policy_tp1_smoothed = torch.min(

torch.max(

policy_tp1 + clipped_normal_sample,

torch.tensor(

policy.action_space.low,

dtype=torch.float32,

device=policy_tp1.device)),

torch.tensor(

policy.action_space.high,

dtype=torch.float32,

device=policy_tp1.device))

else:

// No smoothing, just use deterministic actions.

policy_tp1_smoothed = policy_tp1

// Q-net(s) evaluation.

// prev_update_ops = set(tf1.get_collection(tf.GraphKeys.UPDATE_OPS))

// Q-values for given actions & observations in given current

q_t = model.get_q_values(model_out_t, train_batch[SampleBatch.ACTIONS])

// Q-values for current policy (no noise) in given current state

q_t_det_policy = model.get_q_values(model_out_t, policy_t)

actor_loss = -torch.mean(q_t_det_policy)

if twin_q:

twin_q_t = model.get_twin_q_values(model_out_t,

train_batch[SampleBatch.ACTIONS])

// q_batchnorm_update_ops = list(

// set(tf1.get_collection(tf.GraphKeys.UPDATE_OPS)) - prev_update_ops)

// Target q-net(s) evaluation.

q_tp1 = policy.target_model.get_q_values(target_model_out_tp1,

policy_tp1_smoothed)

if twin_q:

twin_q_tp1 = policy.target_model.get_twin_q_values(

target_model_out_tp1, policy_tp1_smoothed)

q_t_selected = torch.squeeze(q_t, axis=len(q_t.shape) - 1)

if twin_q:

twin_q_t_selected = torch.squeeze(twin_q_t, axis=len(q_t.shape) - 1)

q_tp1 = torch.min(q_tp1, twin_q_tp1)

q_tp1_best = torch.squeeze(input=q_tp1, axis=len(q_tp1.shape) - 1)

q_tp1_best_masked = \

(1.0 - train_batch[SampleBatch.DONES].float()) * \

q_tp1_best

// Compute RHS of bellman equation.

q_t_selected_target = (train_batch[SampleBatch.REWARDS] +

gamma**n_step * q_tp1_best_masked).detach()

// Compute the error (potentially clipped).

if twin_q:

td_error = q_t_selected - q_t_selected_target

twin_td_error = twin_q_t_selected - q_t_selected_target

td_error = td_error + twin_td_error

if use_huber:

errors = huber_loss(td_error, huber_threshold) \

+ huber_loss(twin_td_error, huber_threshold)

else:

errors = 0.5 * \

(torch.pow(td_error, 2.0) + torch.pow(twin_td_error, 2.0))

else:

td_error = q_t_selected - q_t_selected_target

if use_huber:

errors = huber_loss(td_error, huber_threshold)

else:

errors = 0.5 * torch.pow(td_error, 2.0)

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 9

Instances

Project Name: ray-project/ray

Commit Name: ff9c1dac887643e464f5f829c7d8b920b0aa8140

Time: 2020-07-28

Author: sven@anyscale.io

File Name: rllib/agents/ddpg/ddpg_torch_policy.py

Class Name:

Method Name: ddpg_actor_critic_loss

Project Name: ray-project/ray

Commit Name: ff9c1dac887643e464f5f829c7d8b920b0aa8140

Time: 2020-07-28

Author: sven@anyscale.io

File Name: rllib/agents/ddpg/ddpg_torch_policy.py

Class Name:

Method Name: ddpg_actor_critic_loss

Project Name: ray-project/ray

Commit Name: ff9c1dac887643e464f5f829c7d8b920b0aa8140

Time: 2020-07-28

Author: sven@anyscale.io

File Name: rllib/utils/exploration/gaussian_noise.py

Class Name: GaussianNoise

Method Name: _get_torch_exploration_action

Project Name: ray-project/ray

Commit Name: ff9c1dac887643e464f5f829c7d8b920b0aa8140

Time: 2020-07-28

Author: sven@anyscale.io

File Name: rllib/utils/exploration/ornstein_uhlenbeck_noise.py

Class Name: OrnsteinUhlenbeckNoise

Method Name: _get_torch_exploration_action