3c874575bf40e8b1fa2280371131a8f29ebb3e98,src/gluonnlp/models/mobilebert.py,MobileBertModel,hybrid_forward,#MobileBertModel#Any#Any#Any#Any#,484

Before Change

embedding = self.get_initial_embedding(F, inputs, token_types)

contextual_embeddings, additional_outputs = self.encoder(embedding, valid_length)

outputs = []

outputs.append(contextual_embeddings)

if self.use_pooler:

pooled_out = self.apply_pooling(contextual_embeddings)

outputs.append(pooled_out)

return tuple(outputs) if len(outputs) > 1 else outputs[0]

def get_initial_embedding(self, F, inputs, token_types=None, trigram_embed=True):

Get the initial token embeddings that considers the token type and positional embeddings

After Change

embedding = self.get_initial_embedding(F, inputs, token_types)

if self._compute_layout != self._layout:

contextual_embeddings, additional_outputs = self.encoder(F.np.swapaxes(embedding, 0, 1),

valid_length)

contextual_embeddings = F.np.swapaxes(contextual_embeddings, 0, 1)

else:

contextual_embeddings, additional_outputs = self.encoder(embedding, valid_length)

if self.use_pooler:

pooled_out = self.apply_pooling(contextual_embeddings)

return contextual_embeddings, pooled_out

else:

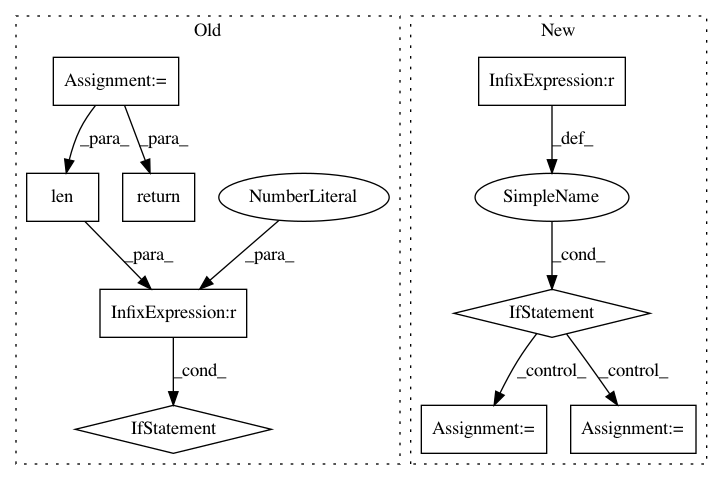

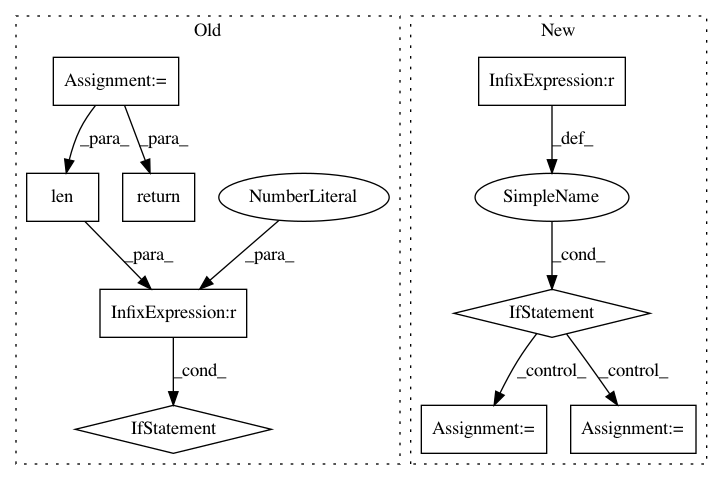

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 9

Instances

Project Name: dmlc/gluon-nlp

Commit Name: 3c874575bf40e8b1fa2280371131a8f29ebb3e98

Time: 2020-07-28

Author: xshiab@connect.ust.hk

File Name: src/gluonnlp/models/mobilebert.py

Class Name: MobileBertModel

Method Name: hybrid_forward

Project Name: keras-team/keras

Commit Name: 002a9d5d2b2c26fff63293e3007ede9ab7dee616

Time: 2015-11-02

Author: makoto.matsuyama@gmx.com

File Name: keras/layers/core.py

Class Name: Merge

Method Name: output_shape

Project Name: dmlc/gluon-nlp

Commit Name: 3c874575bf40e8b1fa2280371131a8f29ebb3e98

Time: 2020-07-28

Author: xshiab@connect.ust.hk

File Name: src/gluonnlp/models/roberta.py

Class Name: RobertaModel

Method Name: hybrid_forward