35999e65a83c8b9f67b833623a5b9ce47af7cc71,luminoth/utils/training.py,,get_learning_rate,#Any#Any#,16

Before Change

method = train_config.learning_rate_decay_method

if not method or method == "none":

return train_config.initial_learning_rate

if method not in LEARNING_RATE_DECAY_METHODS:

raise ValueError("Invalid learning_rate method "{}"".format(method))

if method == "piecewise_constant":

learning_rate = tf.train.piecewise_constant(

global_step, boundaries=[

tf.cast(train_config.learning_rate_decay, tf.int64), ],

values=[

train_config.initial_learning_rate,

train_config.initial_learning_rate * 0.1

], name="learning_rate_piecewise_constant"

)

elif method == "exponential_decay":

learning_rate = tf.train.exponential_decay(

learning_rate=train_config.initial_learning_rate,

global_step=global_step,

decay_steps=train_config.learning_rate_decay, decay_rate=0.96,

staircase=True, name="learning_rate_with_decay"

)

tf.summary.scalar("losses/learning_rate", learning_rate)

return learning_rate

After Change

lr_config = train_config.learning_rate.copy()

decay_method = lr_config.pop("decay_method", None)

if not decay_method or decay_method == "none":

return lr_config.get("value") or lr_config.get("learning_rate")

if decay_method not in LEARNING_RATE_DECAY_METHODS:

raise ValueError("Invalid learning_rate method "{}"".format(

decay_method

))

if decay_method == "piecewise_constant":

lr_config["x"] = global_step

else:

lr_config["global_step"] = global_step

// boundaries, when used, must be the same type as global_step (int64).

if "boundaries" in lr_config:

lr_config["boundaries"] = [

tf.cast(b, tf.int64) for b in lr_config["boundaries"]

]

decay_function = LEARNING_RATE_DECAY_METHODS[decay_method]

learning_rate = decay_function(

**lr_config

)

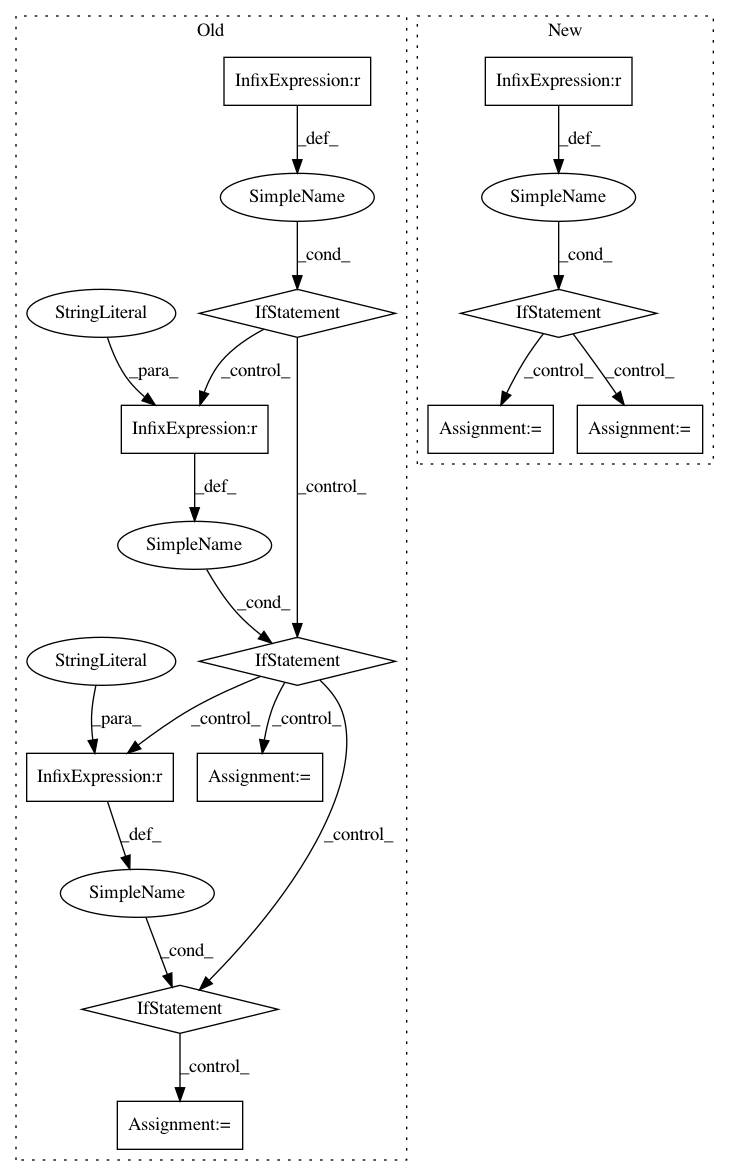

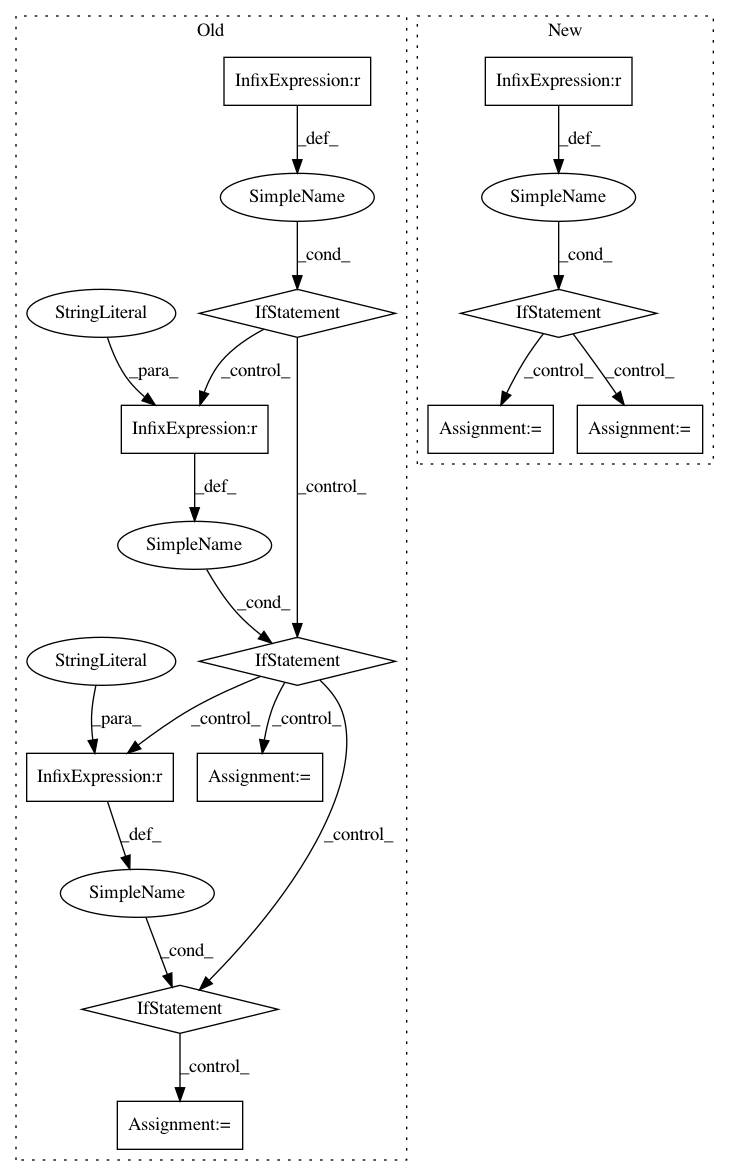

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 12

Instances

Project Name: tryolabs/luminoth

Commit Name: 35999e65a83c8b9f67b833623a5b9ce47af7cc71

Time: 2017-09-19

Author: javirey@gmail.com

File Name: luminoth/utils/training.py

Class Name:

Method Name: get_learning_rate

Project Name: keras-team/autokeras

Commit Name: 13aa31bc1a0b87d6c4b5e787c9b041ec83831c34

Time: 2020-07-31

Author: jin@tamu.edu

File Name: autokeras/blocks/reduction.py

Class Name: SpatialReduction

Method Name: build

Project Name: NifTK/NiftyNet

Commit Name: 3ec1dac0608e511d4cf28e93a3fb908bbabceac5

Time: 2017-04-26

Author: z.eaton-rosen@ucl.ac.uk

File Name: nn/loss.py

Class Name: LossFunction

Method Name: set_loss_type