ba164c0dbb3d8171004380956a88431f4e8248ba,onmt/Models.py,Embeddings,make_positional_encodings,#Embeddings#Any#Any#,51

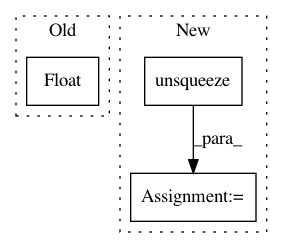

Before Change

pe = torch.zeros(max_len, 1, dim)

for i in range(dim):

for j in range(max_len):

k = float(j) / (10000.0 ** (2.0*i / float(dim) ))

pe[j, 0, i] = math.cos(k) if i % 2 == 1 else math.sin(k)

return pe

After Change

return self.word_lut.embedding_dim

def make_positional_encodings(self, dim, max_len):

pe = torch .arange(0, max_len).unsqueeze(1).expand(max_len, dim)

div_term = 1 / torch.pow(10000, torch.arange(0, dim * 2, 2) / dim)

pe = pe * div_term.expand_as(pe)

pe[:, 0::2] = torch.sin(pe[:, 0::2])

pe[:, 1::2] = torch.cos(pe[:, 1::2])

return pe.unsqueeze(1)

def load_pretrained_vectors(self, emb_file):In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances Project Name: OpenNMT/OpenNMT-py

Commit Name: ba164c0dbb3d8171004380956a88431f4e8248ba

Time: 2017-08-01

Author: bpeters@coli.uni-saarland.de

File Name: onmt/Models.py

Class Name: Embeddings

Method Name: make_positional_encodings

Project Name: allenai/allennlp

Commit Name: a066c15e83c261e9e677bde3ada85ff35a72a94c

Time: 2020-02-25

Author: wuzhaofeng1997@gmail.com

File Name: allennlp/models/coreference_resolution/coref.py

Class Name: CoreferenceResolver

Method Name: forward

Project Name: maciejkula/spotlight

Commit Name: 70e4d7fe60a9658bb27b9f5fb67592a1222b2ec3

Time: 2017-07-06

Author: maciej.kula@gmail.com

File Name: spotlight/sequence/representations.py

Class Name: PoolNet

Method Name: user_representation