70e4d7fe60a9658bb27b9f5fb67592a1222b2ec3,spotlight/sequence/representations.py,PoolNet,user_representation,#PoolNet#Any#,23

Before Change

sequence_embedding_sum = (sequence_embeddings

.sum(1)

.view(item_sequences.size()[0], -1))

non_padding_entries = ((item_sequences != PADDING_IDX)

.float()

.sum(1)

.expand_as(sequence_embedding_sum))

After Change

(0, 0, 1, 0))

// Average representations, ignoring padding.

sequence_embedding_sum = torch.cumsum(sequence_embeddings, 2)

non_padding_entries = (

torch.cumsum((sequence_embeddings != 0.0).float(), 2)

.expand_as(sequence_embedding_sum)

)

user_representations = (

sequence_embedding_sum / (non_padding_entries + 1)

).squeeze(3)

return user_representations[:, :, :-1], user_representations[:, :, -1]

def forward(self, user_representations, targets):

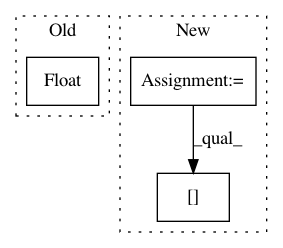

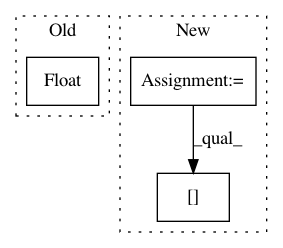

In pattern: SUPERPATTERN

Frequency: 4

Non-data size: 3

Instances

Project Name: maciejkula/spotlight

Commit Name: 70e4d7fe60a9658bb27b9f5fb67592a1222b2ec3

Time: 2017-07-06

Author: maciej.kula@gmail.com

File Name: spotlight/sequence/representations.py

Class Name: PoolNet

Method Name: user_representation

Project Name: OpenNMT/OpenNMT-py

Commit Name: ba164c0dbb3d8171004380956a88431f4e8248ba

Time: 2017-08-01

Author: bpeters@coli.uni-saarland.de

File Name: onmt/Models.py

Class Name: Embeddings

Method Name: make_positional_encodings

Project Name: AlexEMG/DeepLabCut

Commit Name: fa668630735a5c6127d711677ce40e313285c102

Time: 2018-08-17

Author: amathis@fas.harvard.edu

File Name: pose-tensorflow/nnet/pose_net.py

Class Name: PoseNet

Method Name: extract_features

Project Name: PetrochukM/PyTorch-NLP

Commit Name: e58e5a994ab596edfa81e167ac34c829b0fddbca

Time: 2019-02-26

Author: zbyte64@gmail.com

File Name: torchnlp/word_to_vector/pretrained_word_vectors.py

Class Name: _PretrainedWordVectors

Method Name: cache