2bf8adc6913f17dfe8324b1b1a63df4d2b92b9a1,scripts/language_model/large_word_language_model.py,,test,#Any#Any#Any#,268

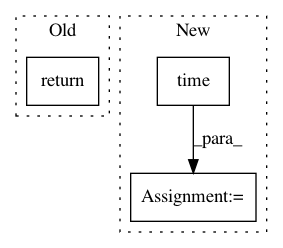

Before Change

if max_nbatch_eval and nbatch > max_nbatch_eval:

print("Quit evaluation early at batch %d"%nbatch)

break

return avg

def evaluate():

Evaluate loop for the trained model

print(eval_model)After Change

ntotal = 0

nbatch = 0

hidden = eval_model.begin_state(batch_size=batch_size, func=mx.nd.zeros, ctx=ctx)

start_time = time.time()

for data, target, mask in data_stream:

data = data.as_in_context(ctx)

target = target.as_in_context(ctx)

mask = mask.as_in_context(ctx)

output, hidden = eval_model(data, hidden)

hidden = detach(hidden)

output = output.reshape((-3, -1))

L = loss(output, target.reshape(-1,)) * mask.reshape((-1,))

total_L += L.mean()

ntotal += mask.mean()

nbatch += 1

avg = total_L / ntotal

if nbatch % args.log_interval == 0:

avg_scalar = float(avg.asscalar())

ppl = math.exp(avg_scalar)

throughput = batch_size*args.log_interval/(time.time()-start_time)

print("Evaluation batch %d: test loss %.2f, test ppl %.2f, "

"throughput = %.2f samples/s"%(nbatch, avg_scalar, ppl, throughput))

start_time = time.time()

if max_nbatch_eval and nbatch > max_nbatch_eval:In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances Project Name: dmlc/gluon-nlp

Commit Name: 2bf8adc6913f17dfe8324b1b1a63df4d2b92b9a1

Time: 2018-08-13

Author: linhaibin.eric@gmail.com

File Name: scripts/language_model/large_word_language_model.py

Class Name:

Method Name: test

Project Name: OpenNMT/OpenNMT-py

Commit Name: 2c3dd6f3bd666bf7aeee2786dd00bc577b08bc0e

Time: 2017-06-26

Author: srush@sum1gpu02.rc.fas.harvard.edu

File Name: onmt/Models.py

Class Name: TransformerEncoder

Method Name: forward

Project Name: keras-team/autokeras

Commit Name: b048efa4f956b80266942caea6ee8b4311e1d17a

Time: 2018-04-08

Author: jin@tamu.edu

File Name: autokeras/classifier.py

Class Name: ClassifierBase

Method Name: fit