49dea39d34a562a46daa562051a66846326f66f4,rl_coach/agents/pal_agent.py,PALAgent,learn_from_batch,#PALAgent#Any#,53

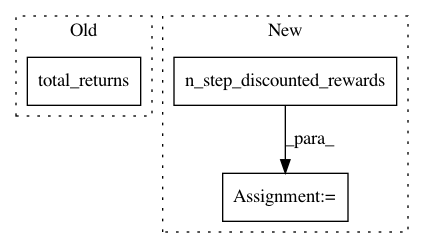

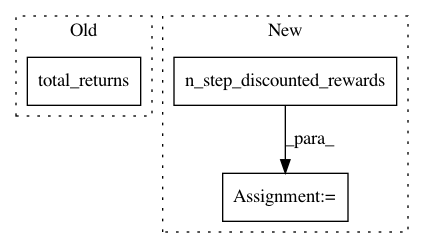

Before Change

TD_targets[i, batch.actions()[i]] -= self.alpha * advantage_learning_update

// mixing monte carlo updates

monte_carlo_target = batch.total_returns()[i]

TD_targets[i, batch.actions()[i]] = (1 - self.monte_carlo_mixing_rate) * TD_targets[i, batch.actions()[i]] \

+ self.monte_carlo_mixing_rate * monte_carlo_target

After Change

// calculate TD error

TD_targets = np.copy(q_st_online)

total_returns = batch.n_step_discounted_rewards()

for i in range(self.ap.network_wrappers["main"].batch_size):

TD_targets[i, batch.actions()[i]] = batch.rewards()[i] + \

(1.0 - batch.game_overs()[i]) * self.ap.algorithm.discount * \

q_st_plus_1_target[i][selected_actions[i]]

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances

Project Name: NervanaSystems/coach

Commit Name: 49dea39d34a562a46daa562051a66846326f66f4

Time: 2018-11-07

Author: gal.leibovich@intel.com

File Name: rl_coach/agents/pal_agent.py

Class Name: PALAgent

Method Name: learn_from_batch

Project Name: NervanaSystems/coach

Commit Name: 49dea39d34a562a46daa562051a66846326f66f4

Time: 2018-11-07

Author: gal.leibovich@intel.com

File Name: rl_coach/agents/clipped_ppo_agent.py

Class Name: ClippedPPOAgent

Method Name: fill_advantages

Project Name: NervanaSystems/coach

Commit Name: 49dea39d34a562a46daa562051a66846326f66f4

Time: 2018-11-07

Author: gal.leibovich@intel.com

File Name: rl_coach/agents/ppo_agent.py

Class Name: PPOAgent

Method Name: fill_advantages