428516056abe41f135133e732a8d44af6ce9a234,rllib/policy/torch_policy.py,TorchPolicy,learn_on_batch,#TorchPolicy#Any#,221

Before Change

p.grad /= self.distributed_world_size

info["allreduce_latency"] = time.time() - start

self._optimizer.step()

info.update(self.extra_grad_info(train_batch))

return {

LEARNER_STATS_KEY: infoAfter Change

// Erase gradients in all vars of this optimizer.

opt.zero_grad()

// Recompute gradients of loss over all variables.

loss_out[i].backward(retain_graph=(i < len(self._optimizers) - 1))

grad_info.update(self.extra_grad_process(opt, loss_out[i]))

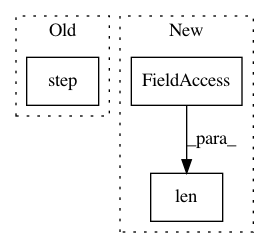

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances Project Name: ray-project/ray

Commit Name: 428516056abe41f135133e732a8d44af6ce9a234

Time: 2020-04-15

Author: sven@anyscale.io

File Name: rllib/policy/torch_policy.py

Class Name: TorchPolicy

Method Name: learn_on_batch

Project Name: NervanaSystems/coach

Commit Name: 9e9c4fd3322b6e8f47572fefdb8fd65018fb96f7

Time: 2019-05-27

Author: gal.leibovich@intel.com

File Name: rl_coach/exploration_policies/e_greedy.py

Class Name: EGreedy

Method Name: get_action

Project Name: allenai/allennlp

Commit Name: 5ad7a33a04d8829ad3439b5f9390bd136105f986

Time: 2020-05-28

Author: tobiasr@allenai.org

File Name: allennlp/nn/beam_search.py

Class Name: BeamSearch

Method Name: search