4d8cca40654deb641809bf8fb376afd9b1633d52,examples/sparse_gnmt/inference.py,,inference,#Any#Any#Any#Any#Any#Any#Any#,118

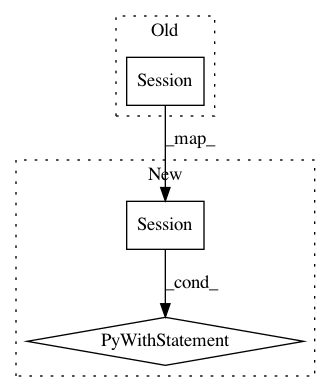

Before Change

if hparams.quantize_ckpt or hparams.from_quantized_ckpt:

model_helper.add_quatization_variables(infer_model)

sess = tf.Session(

graph=infer_model.graph, config=utils.get_config_proto())

with infer_model.graph.as_default():

load_fn = model_helper.load_model if not hparams.from_quantized_ckpt \

else model_helper.load_quantized_model

if hparams.quantize_ckpt:After Change

if hparams.quantize_ckpt or hparams.from_quantized_ckpt:

model_helper.add_quatization_variables(infer_model)

with tf.Session(graph=infer_model.graph, config=utils.get_config_proto()) as sess:

with infer_model.graph.as_default():

load_fn = model_helper.load_model if not hparams.from_quantized_ckpt \

else model_helper.load_quantized_model

if hparams.quantize_ckpt:

load_fn(infer_model.model, ckpt_path, sess, "infer")

load_fn = model_helper.load_quantized_model

ckpt_path = os.path.join(hparams.out_dir, "quant_" + os.path.basename(ckpt_path))

model_helper.quantize_checkpoint(sess, ckpt_path)

loaded_infer_model = load_fn(infer_model.model, ckpt_path, sess, "infer")

if num_workers == 1:

single_worker_inference(

sess,

infer_model,

loaded_infer_model,

inference_input_file,

inference_output_file,

hparams)

else:

multi_worker_inference(

sess,

infer_model,

loaded_infer_model,

inference_input_file,

inference_output_file,

hparams,

num_workers=num_workers,

jobid=jobid)

def single_worker_inference(sess,

infer_model,

loaded_infer_model,

inference_input_file,In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances Project Name: NervanaSystems/nlp-architect

Commit Name: 4d8cca40654deb641809bf8fb376afd9b1633d52

Time: 2019-03-31

Author: daniel.korat@intel.com

File Name: examples/sparse_gnmt/inference.py

Class Name:

Method Name: inference

Project Name: hanxiao/bert-as-service

Commit Name: 63cee221bf9d956f19dae264a4fdc3206471a6e8

Time: 2018-12-13

Author: hanhxiao@tencent.com

File Name: server/bert_serving/server/helper.py

Class Name:

Method Name: optimize_graph

Project Name: NifTK/NiftyNet

Commit Name: ae585178b8f190bcbf7f1433a731fa27fc32271a

Time: 2017-07-23

Author: wenqi.li@ucl.ac.uk

File Name: niftynet/engine/application_driver.py

Class Name: ApplicationDriver

Method Name: run_application