bc51dbc0c56f68ed30857755026633f78eef1ae8,spotlight/layers.py,BloomEmbedding,forward,#BloomEmbedding#Any#,171

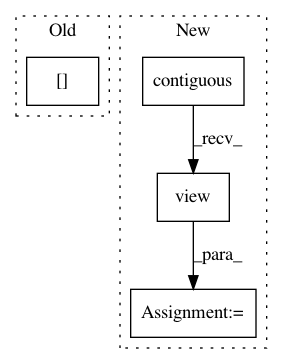

Before Change

embedding = self.embeddings(masked_indices[:, :, 0])

for idx in range(1, len(self._masks)):

embedding += self.embeddings(masked_indices[:, :, idx])

embedding /= len(self._masks)

After Change

batch_size, seq_size = indices.size(0), 1

if not indices.is_contiguous():

indices = indices.contiguous()

indices = indices.data.view(batch_size * seq_size, 1)

torch.mul(

indices.expand_as(masks),

masks,

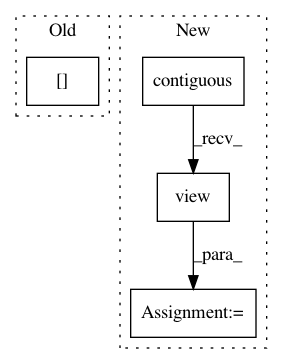

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances

Project Name: maciejkula/spotlight

Commit Name: bc51dbc0c56f68ed30857755026633f78eef1ae8

Time: 2017-08-20

Author: maciej.kula@gmail.com

File Name: spotlight/layers.py

Class Name: BloomEmbedding

Method Name: forward

Project Name: asappresearch/sru

Commit Name: bd570b58cefa57062f8c14e06b83800c52f4925a

Time: 2019-09-18

Author: hp@asapp.com

File Name: sru/sru_functional.py

Class Name: SRU

Method Name: forward

Project Name: maciejkula/spotlight

Commit Name: fcf346e85356a73472a10c564a0a40011139f6be

Time: 2017-07-02

Author: maciej.kula@gmail.com

File Name: spotlight/sequence/representations.py

Class Name: CNNNet

Method Name: user_representation