68fbfd1876c367323acf830736bae1af499cc0fe,onmt/modules/Transformer.py,TransformerDecoder,forward,#TransformerDecoder#Any#Any#Any#Any#,262

Before Change

if state.previous_input is not None:

outputs = outputs[state.previous_input.size(0):]

attn = attn[:, state.previous_input.size(0):].squeeze()

attn = torch.stack([attn])

attns["std"] = attn

if self._copy:

attns["copy"] = attn

After Change

saved_inputs = []

for i in range(self.num_layers):

prev_layer_input = None

if state.previous_input is not None:

prev_layer_input = state.previous_layer_inputs[i]

output, attn, all_input \

= self.transformer_layers[i](output, src_memory_bank,

src_pad_mask, tgt_pad_mask,

previous_input=prev_layer_input)

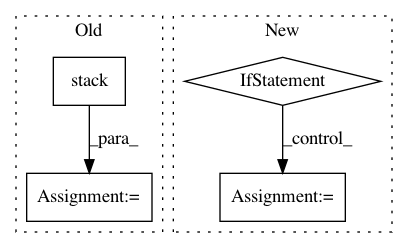

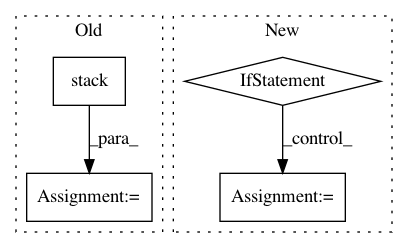

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances

Project Name: OpenNMT/OpenNMT-py

Commit Name: 68fbfd1876c367323acf830736bae1af499cc0fe

Time: 2018-03-07

Author: dengyuntian@gmail.com

File Name: onmt/modules/Transformer.py

Class Name: TransformerDecoder

Method Name: forward

Project Name: catalyst-team/catalyst

Commit Name: 1ef3ad90a3423ed15ca41e0ea4e81012ebe84a9f

Time: 2020-08-11

Author: scitator@gmail.com

File Name: catalyst/data/scripts/project_embeddings.py

Class Name:

Method Name: main

Project Name: OpenNMT/OpenNMT-py

Commit Name: cfb1491c3d172927794d6dffc60048ee56330bd2

Time: 2017-06-15

Author: srush@seas.harvard.edu

File Name: onmt/Models.py

Class Name: Decoder

Method Name: forward