668c3ef362995c55633fde592354160fec1d1efd,onmt/encoders/transformer.py,TransformerEncoder,forward,#TransformerEncoder#Any#Any#,114

Before Change

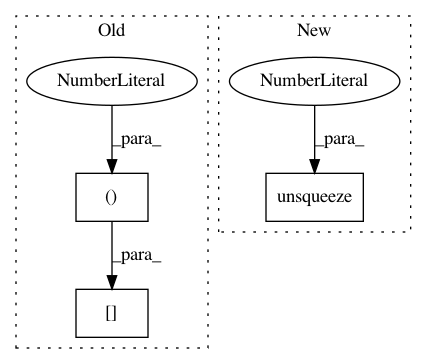

emb = self.embeddings(src)

out = emb.transpose(0, 1).contiguous()

words = src [:, :, 0 ].transpose(0, 1)

w_batch, w_len = words.size()

padding_idx = self.embeddings.word_padding_idx

mask = words.data.eq(padding_idx).unsqueeze(1) // [B, 1, T]After Change

emb = self.embeddings(src)

out = emb.transpose(0, 1).contiguous()

mask = ~sequence_mask(lengths).unsqueeze(1)

// Run the forward pass of every layer of the tranformer.

for layer in self.transformer:

out = layer(out, mask)

out = self.layer_norm(out)In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances Project Name: OpenNMT/OpenNMT-py

Commit Name: 668c3ef362995c55633fde592354160fec1d1efd

Time: 2019-06-27

Author: dylan.flaute@gmail.com

File Name: onmt/encoders/transformer.py

Class Name: TransformerEncoder

Method Name: forward

Project Name: OpenNMT/OpenNMT-py

Commit Name: ba164c0dbb3d8171004380956a88431f4e8248ba

Time: 2017-08-01

Author: bpeters@coli.uni-saarland.de

File Name: onmt/Models.py

Class Name: Embeddings

Method Name: make_positional_encodings

Project Name: OpenNMT/OpenNMT-py

Commit Name: 668c3ef362995c55633fde592354160fec1d1efd

Time: 2019-06-27

Author: dylan.flaute@gmail.com

File Name: onmt/decoders/transformer.py

Class Name: TransformerDecoder

Method Name: forward