5e599fb01df65d156a40f7a138ab6627a06a50db,gpflow/optimizers/natgrad.py,NaturalGradient,_natgrad_steps,#NaturalGradient#Any#Any#,113

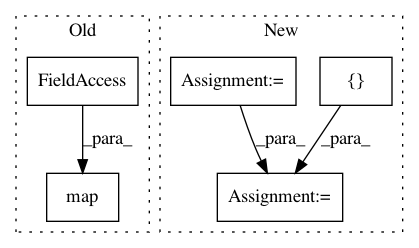

Before Change

self._natgrad_step(loss_fn, q_mu, q_sqrt, xi_transform)

with tf.name_scope(f"{self._name}/natural_gradient_steps"):

list(map(natural_gradient_step, *zip(*parameters)) )

def _natgrad_step(

self, loss_fn: Callable, q_mu: Parameter, q_sqrt: Parameter, xi_transform: XiTransformAfter Change

tape.watch(unconstrained_variables)

loss = loss_fn()

q_mu_grads, q_sqrt_grads = tape.gradient(loss, [q_mus, q_sqrts])

// NOTE that these are the gradients in *unconstrained* space

with tf.name_scope(f"{self._name}/natural_gradient_steps"):

for q_mu_grad, q_sqrt_grad, q_mu, q_sqrt, xi_transform in zip(In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances Project Name: GPflow/GPflow

Commit Name: 5e599fb01df65d156a40f7a138ab6627a06a50db

Time: 2020-05-07

Author: 6815729+condnsdmatters@users.noreply.github.com

File Name: gpflow/optimizers/natgrad.py

Class Name: NaturalGradient

Method Name: _natgrad_steps

Project Name: GPflow/GPflow

Commit Name: b41d4f38436e4a090c940dbd3bc7e2afd39a283e

Time: 2020-04-23

Author: st--@users.noreply.github.com

File Name: gpflow/optimizers/natgrad.py

Class Name: NaturalGradient

Method Name: _natgrad_steps

Project Name: deepmind/sonnet

Commit Name: 8c6540d278883cd3c78b501c69ad67a99d8b34a4

Time: 2017-06-12

Author: noreply@google.com

File Name: sonnet/python/modules/basic.py

Class Name: BatchApply

Method Name: _build