c5c350cf9f9b576cc9de939e4dc308404eb48852,catalyst/dl/experiments/experiment.py,ConfigExperiment,get_optimizer_and_model,#ConfigExperiment#Any#Any#,272

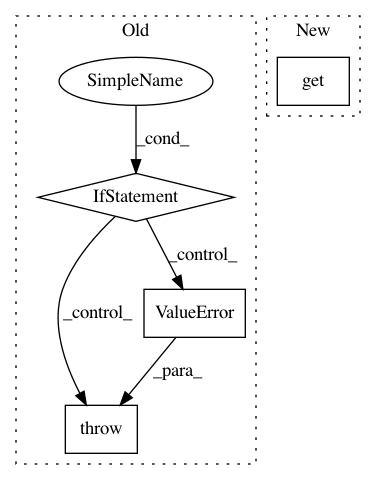

Before Change

if fp16:

utils.assert_fp16_available()

from apex import amp

if fp16_opt_level not in {"O1", "O2", "O3"}:

raise ValueError("fp16 mode must be one of O1, O2, O3")

model, optimizer = amp.initialize(

model, optimizer, opt_level=fp16_opt_level)

elif torch.cuda.device_count() > 1:

model = torch.nn.DataParallel(model)

After Change

optimizer_params = \

self.stages_config[stage].get("optimizer_params", {})

distributed_params = \

self.stages_config[stage].get("distributed_params", {})

optimizer = self._get_optimizer(

model_params=model_params, **optimizer_params)

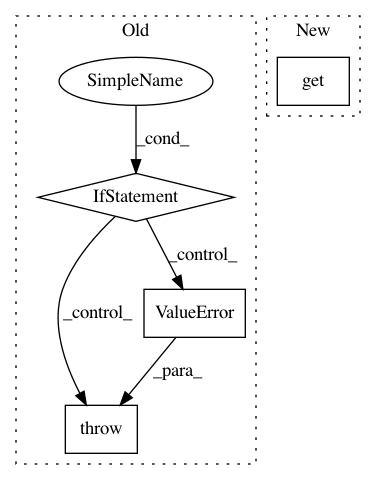

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances

Project Name: catalyst-team/catalyst

Commit Name: c5c350cf9f9b576cc9de939e4dc308404eb48852

Time: 2019-05-28

Author: scitator@gmail.com

File Name: catalyst/dl/experiments/experiment.py

Class Name: ConfigExperiment

Method Name: get_optimizer_and_model

Project Name: asyml/texar

Commit Name: f1b7e12c77298fdce190e7270af61c1a734fc8e1

Time: 2018-03-30

Author: zhitinghu@gmail.com

File Name: texar/core/optimization.py

Class Name:

Method Name: get_train_op

Project Name: tensorlayer/tensorlayer

Commit Name: c7e19808f4956666ae23f69364642108a4fe2513

Time: 2019-04-04

Author: ivbensekin@gmail.com

File Name: tensorlayer/layers/core.py

Class Name: Layer

Method Name: __init__