26421ce20c6b626ceacafbb3282cad1d5dce04ca,onmt/Models.py,Embeddings,forward,#Embeddings#Any#,60

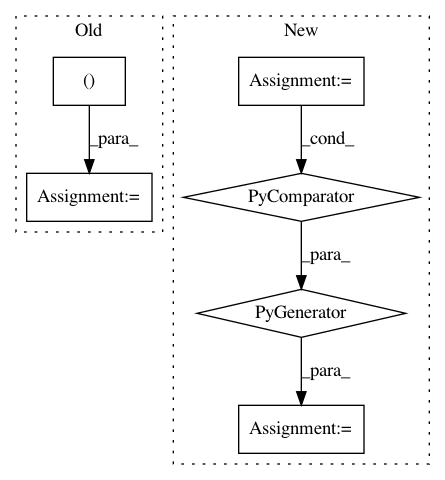

Before Change

word = self.word_lut(src_input[:, :, 0])

emb = word

if self.feature_dicts:

features = [feature_lut(src_input[:, :, j+1])

for j, feature_lut in enumerate(self.feature_luts)]

// Apply one MLP layer.

emb = self.activation(

self.linear(torch.cat([word] + features, -1)))

if self.positional_encoding:

emb = emb + Variable(self.pe[:emb.size(0), :1, :emb.size(2)]

.expand_as(emb))

emb = self.dropout(emb)

return emb

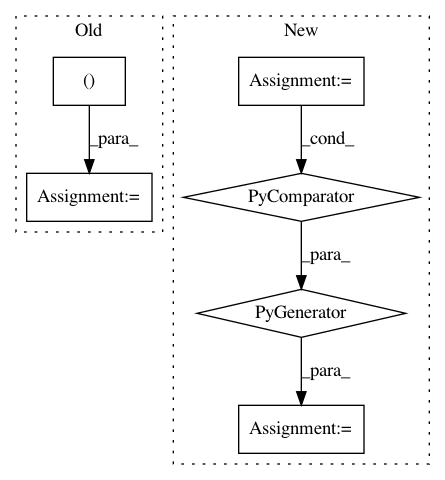

After Change

Return:

emb (FloatTensor): len x batch x sum of feature embedding sizes

feat_inputs = (feat.squeeze(2) for feat in src_input.split(1, dim=2))

features = [lut(feat) for lut, feat in zip(self.emb_luts, feat_inputs)]

emb = self.merge(features)

return emb

class Encoder(nn.Module):

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 6

Instances

Project Name: OpenNMT/OpenNMT-py

Commit Name: 26421ce20c6b626ceacafbb3282cad1d5dce04ca

Time: 2017-07-30

Author: bpeters@coli.uni-saarland.de

File Name: onmt/Models.py

Class Name: Embeddings

Method Name: forward

Project Name: OpenNMT/OpenNMT-py

Commit Name: 90e5b974a7173ab0bba0990a690e32f25f5b725a

Time: 2019-01-14

Author: benzurdopeters@gmail.com

File Name: tools/embeddings_to_torch.py

Class Name:

Method Name: get_vocabs

Project Name: OpenNMT/OpenNMT-py

Commit Name: 1802cda9ee0d03354daf790f847345f8dd515555

Time: 2017-06-22

Author: digangi@fbk.eu

File Name: onmt/Models.py

Class Name: NMTModel

Method Name: forward