128ea5274db96163c58a99405e7205abcab36192,onmt/Translator.py,Translator,translateBatch,#Translator#Any#Any#,84

Before Change

def unbottle(m):

return m.view(beamSize, batchSize, -1)

while i < self.opt.max_sent_length \

or any([len(b.finished) == 0 for b in beam]):

// Construct batch x beam_size nxt words.

// Get all the pending current beam words and arrange for forward.

inp = var(torch.stack([b.getCurrentState() for b in beam])

.t().contiguous().view(1, -1))

// Turn any copied words to UNKs

// 0 is unk

if self.copy_attn:

inp = inp.masked_fill(inp.gt(len(self.fields["tgt"].vocab) - 1), 0)

// Run one step.

decOut, decStates, attn = \

self.model.decoder(inp, src, context, decStates)

decOut = decOut.squeeze(0)

// decOut: beam x rnn_size

// (b) Compute a vector of batch*beam word scores.

if not self.copy_attn:

out = self.model.generator.forward(decOut).data

out = unbottle(out)

// beam x tgt_vocab

else:

out = self.model.generator.forward(decOut, attn["copy"].squeeze(0),

srcMap)

// beam x (tgt_vocab + extra_vocab)

out = dataset.collapseCopyScores(

unbottle(out.data),

batch, self.fields["tgt"].vocab)

// beam x tgt_vocab

out = out.log()

// (c) Advance each beam.

for j, b in enumerate(beam):

is_done = b.advance(out[:, j], unbottle(attn["copy"]).data[:, j])

decStates.beamUpdate_(j, b.getCurrentOrigin(), beamSize)

if is_done:

break

i += 1

if "tgt" in batch.__dict__:

allGold = self._runTarget(batch, dataset)

else:

allGold = [0] * batchSizeAfter Change

return m.view(beamSize, batchSize, -1)

for i in range(self.opt.max_sent_length):

if all((b.done() for b in beam) ):

break

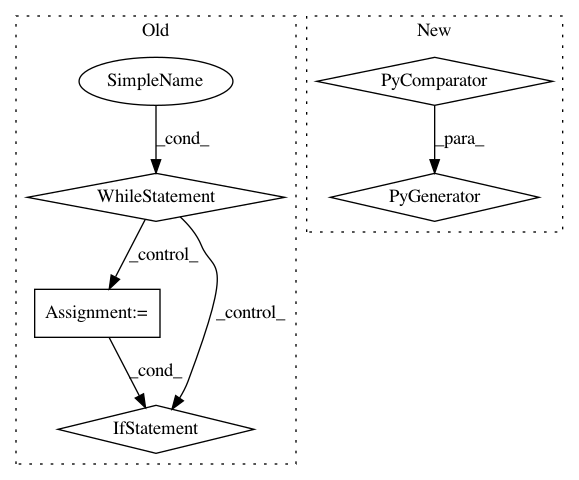

// Construct batch x beam_size nxt words.In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances Project Name: OpenNMT/OpenNMT-py

Commit Name: 128ea5274db96163c58a99405e7205abcab36192

Time: 2017-08-28

Author: srush@seas.harvard.edu

File Name: onmt/Translator.py

Class Name: Translator

Method Name: translateBatch

Project Name: google/deepvariant

Commit Name: 13a85e08e713f374933bc4fc082f67e1fa8dcd02

Time: 2018-02-20

Author: cym@google.com

File Name: deepvariant/util/io_utils.py

Class Name:

Method Name: read_shard_sorted_tfrecords

Project Name: hanxiao/bert-as-service

Commit Name: f0d581c071f14682c46f7917e11592c189382f53

Time: 2018-12-17

Author: hanhxiao@tencent.com

File Name: server/bert_serving/server/__init__.py

Class Name: BertServer

Method Name: _run