f19ace982075ea009af81f5e9f687cc2276f50ea,scripts/bert/fp16_utils.py,,grad_global_norm,#Any#Any#,24

Before Change

x = array.reshape((-1,)).astype("float32", copy=False)

return nd.dot(x, x)

norm_arrays = [_norm(arr) for arr in arrays]

// group norm arrays by ctx

def group_by_ctx(arr_list):

groups = collections.defaultdict(list)After Change

batch_size : int

Batch size of data processed. Gradient will be normalized by `1/batch_size`.

Set this to 1 if you normalized loss manually with `loss = mean(loss)`.

max_norm : NDArray, optional, default is None

max value for global 2-norm of gradients.

self.fp32_trainer.allreduce_grads()

step_size = batch_size * self._scaler.loss_scale

if max_norm:

_, ratio, is_finite = nlp.utils.grad_global_norm(self.fp32_trainer._params,

max_norm * self._scaler.loss_scale)

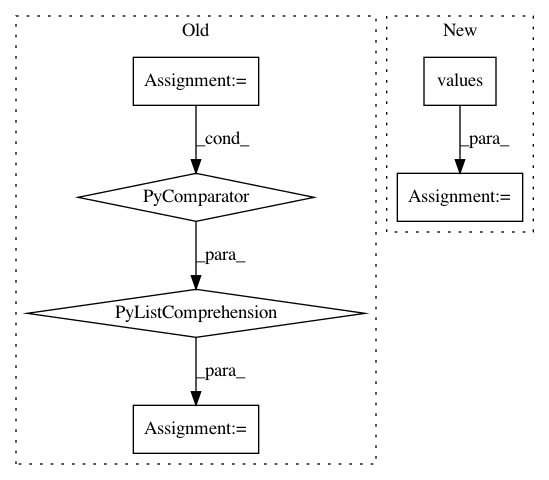

step_size = ratio * step_sizeIn pattern: SUPERPATTERN

Frequency: 3

Non-data size: 6

Instances Project Name: dmlc/gluon-nlp

Commit Name: f19ace982075ea009af81f5e9f687cc2276f50ea

Time: 2020-01-20

Author: 50716238+MoisesHer@users.noreply.github.com

File Name: scripts/bert/fp16_utils.py

Class Name:

Method Name: grad_global_norm

Project Name: uber/ludwig

Commit Name: ca98f96d527a03a7d7d76377eff44e1591d93ebe

Time: 2020-05-04

Author: jimthompson5802@gmail.com

File Name: ludwig/models/modules/combiners.py

Class Name: ConcatCombiner

Method Name: call

Project Name: ray-project/ray

Commit Name: 62c7ab518214286a4721dd7410978effd6d05471

Time: 2020-11-12

Author: sven@anyscale.io

File Name: rllib/execution/train_ops.py

Class Name: TrainTFMultiGPU

Method Name: __call__