aab3902d4a7d55f5a86058854adc36b8a12c873f,catalyst/dl/callbacks/base.py,OptimizerCallback,on_batch_end,#OptimizerCallback#Any#,202

Before Change

else:

model = state.model

model.zero_grad()

optimizer = state.get_key(

key="optimizer", inner_key=self.optimizer_key

)

loss = state.get_key(key="loss", inner_key=self.optimizer_key)

scaled_loss = self.fp16_grad_scale * loss.float()

scaled_loss.backward()

master_params = list(optimizer.param_groups[0]["params"] )

model_params = list(

filter(lambda p: p.requires_grad, model.parameters())

)

copy_grads(source=model_params, target=master_params)

for param in master_params:

param.grad.data.mul_(1. / self.fp16_grad_scale)

self.grad_step(

optimizer=optimizer,

optimizer_wd=self._optimizer_wd,

grad_clip_fn=self.grad_clip_fnAfter Change

def on_batch_end(self, state):

loss = state.get_key(key="loss", inner_key=self.loss_key)

if isinstance(loss, dict):

loss = list(loss.values())

if isinstance(loss, list):

loss = torch.mean(torch.stack(loss))

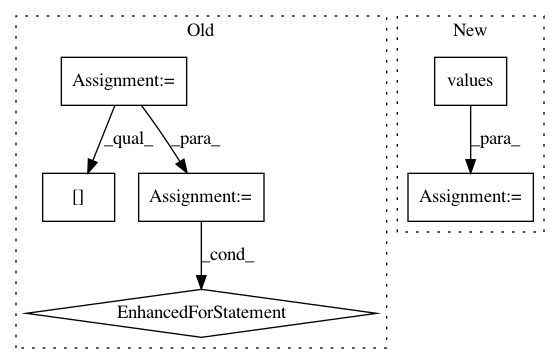

if self.prefix is not None:In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 6

Instances Project Name: catalyst-team/catalyst

Commit Name: aab3902d4a7d55f5a86058854adc36b8a12c873f

Time: 2019-05-20

Author: ekhvedchenya@gmail.com

File Name: catalyst/dl/callbacks/base.py

Class Name: OptimizerCallback

Method Name: on_batch_end

Project Name: NifTK/NiftyNet

Commit Name: fd8ca0c975e380dfcd7239408258b5852be33f06

Time: 2017-08-12

Author: wenqi.li@ucl.ac.uk

File Name: niftynet/engine/sampler_grid.py

Class Name: GridSampler

Method Name: layer_op

Project Name: ray-project/ray

Commit Name: 9c5e5a97576f9bb8c7d7256cd566cdd4bcd63a3f

Time: 2020-08-18

Author: mfitton@berkeley.edu

File Name: python/ray/tests/test_metrics.py

Class Name:

Method Name: test_raylet_info_endpoint