32579822389423c7f4120e222aa26652f8507735,onmt/utils/optimizers.py,Optimizer,set_parameters,#Optimizer#Any#,172

Before Change

self.sparse_params = []

for k, p in params:

if p.requires_grad:

if self.method != "sparseadam" or "embed" not in k:

self.params.append(p)

else:

self.sparse_params.append(p)

if self.method == "sgd":

self.optimizer = optim.SGD(self.params, lr=self.learning_rate)

elif self.method == "adagrad":

self.optimizer = optim.Adagrad(self.params, lr=self.learning_rate)

After Change

elif self.method == "sparseadam":

dense = []

sparse = []

for name, param in model.named_parameters():

if not param.requires_grad:

continue

// TODO: Find a better way to check for sparse gradients.

if "embed" in name:

sparse.append(param)

else:

dense.append(param)

self.optimizer = MultipleOptimizer(

[optim.Adam(dense, lr=self.learning_rate,

betas=self.betas, eps=1e-8),

optim.SparseAdam(sparse, lr=self.learning_rate,

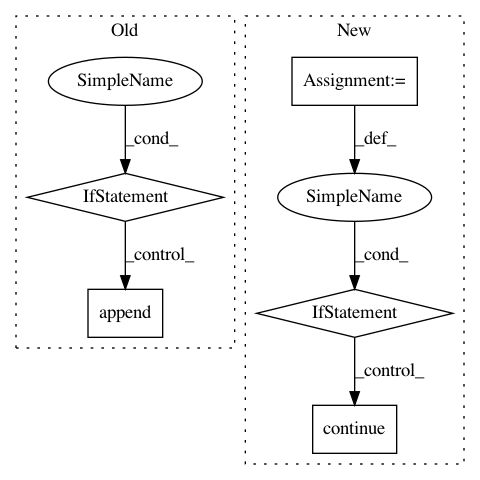

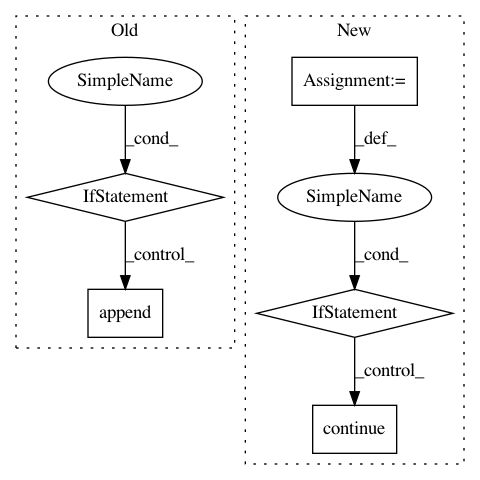

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances

Project Name: OpenNMT/OpenNMT-py

Commit Name: 32579822389423c7f4120e222aa26652f8507735

Time: 2018-12-18

Author: guillaumekln@users.noreply.github.com

File Name: onmt/utils/optimizers.py

Class Name: Optimizer

Method Name: set_parameters

Project Name: NifTK/NiftyNet

Commit Name: 453269872f7e4dc9a6dc81a1c758ffc365cfc312

Time: 2017-05-22

Author: wenqi.li@ucl.ac.uk

File Name: utilities/constraints_classes.py

Class Name: ConstraintSearch

Method Name: create_list_from_constraint

Project Name: streamlit/streamlit

Commit Name: d24989cdda816343f74a97fc32ce3fd1a689a265

Time: 2019-07-16

Author: thiago@streamlit.io

File Name: lib/streamlit/Server.py

Class Name:

Method Name: _is_url_from_allowed_origins