665b0076e089dbe60ecadc34bf2cbff9d7042482,keras/optimizers.py,SGD,__init__,#SGD#Any#Any#Any#,171

Before Change

self.iterations = K.variable(0, dtype="int64", name="iterations")

learning_rate = kwargs.pop("lr", learning_rate)

self.lr = K.variable(learning_rate, name="learning_rate")

self.momentum = K.variable(momentum, name="momentum")

self.decay = K.variable(self.initial_decay, name="decay")

self._momentum = True if momentum > 0. else False

self.nesterov = nesterov

super(SGD, self).__init__(**kwargs)

After Change

self.lr = K.variable(lr, name="lr")

self.decay = K.variable(decay, name="decay")

self.iterations = K.variable(0, dtype="int64", name="iterations")

if epsilon is None:

epsilon = K.epsilon()

self.epsilon = epsilon

self.initial_decay = decay

@interfaces.legacy_get_updates_support

def get_updates(self, loss, params):

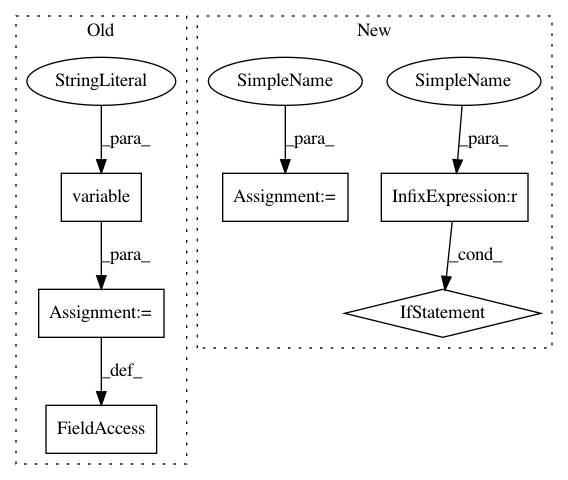

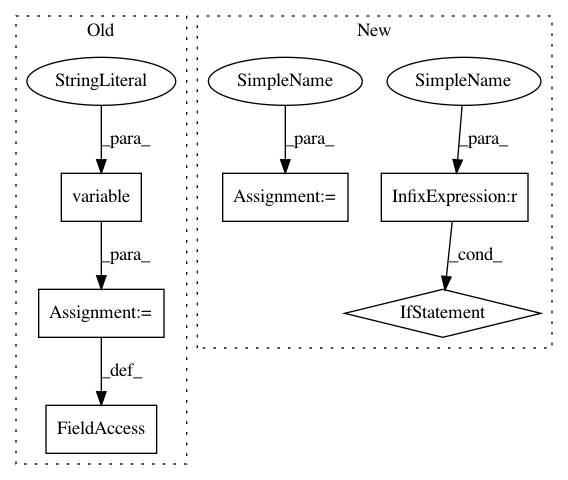

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 6

Instances

Project Name: keras-team/keras

Commit Name: 665b0076e089dbe60ecadc34bf2cbff9d7042482

Time: 2019-05-28

Author: francois.chollet@gmail.com

File Name: keras/optimizers.py

Class Name: SGD

Method Name: __init__

Project Name: keras-team/keras

Commit Name: 665b0076e089dbe60ecadc34bf2cbff9d7042482

Time: 2019-05-28

Author: francois.chollet@gmail.com

File Name: keras/optimizers.py

Class Name: Adam

Method Name: __init__

Project Name: keras-team/keras

Commit Name: 665b0076e089dbe60ecadc34bf2cbff9d7042482

Time: 2019-05-28

Author: francois.chollet@gmail.com

File Name: keras/optimizers.py

Class Name: RMSprop

Method Name: __init__