a90cd0fcbb81c2be472b16c61bebc75125d16133,rllib/examples/unity3d_env_local.py,,,#,29

Before Change

policies, policy_mapping_fn = \

Unity3DEnv.get_policy_configs_for_game(args.env)

config = {

"env": "unity3d",

"env_config": {

"episode_horizon": args.horizon,

},

// IMPORTANT: Just use one Worker (we only have one Unity running)!

"num_workers": 0,

// Other settings.

"sample_batch_size": 64,

"train_batch_size": 256,

"rollout_fragment_length": 20,

// Multi-agent setup for the particular env.

"multiagent": {

"policies": policies,

"policy_mapping_fn": policy_mapping_fn,

},

"framework": "tf",

}

stop = {

"training_iteration": args.stop_iters,

"timesteps_total": args.stop_timesteps,

After Change

help="The name of the Env to run in the Unity3D editor: `3DBall(Hard)?|"

"SoccerStrikersVsGoalie|Tennis|VisualHallway|Walker` (feel free to add "

"more and PR!)")

parser.add_argument(

"--file-name",

type=str,

default=None,

help="The Unity3d binary (compiled) game, e.g. "

""/home/ubuntu/soccer_strikers_vs_goalie_linux.x86_64". Use `None` for "

"a currently running Unity3D editor.")

parser.add_argument(

"--from-checkpoint",

type=str,

default=None,

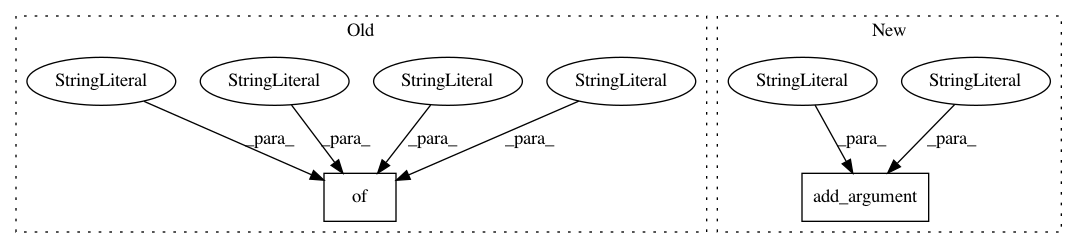

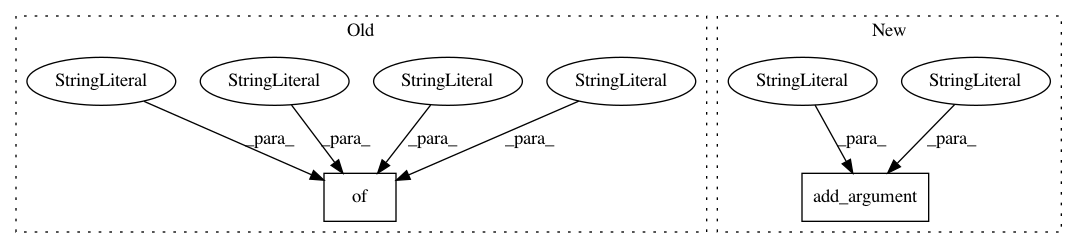

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 2

Instances

Project Name: ray-project/ray

Commit Name: a90cd0fcbb81c2be472b16c61bebc75125d16133

Time: 2020-06-11

Author: sven@anyscale.io

File Name: rllib/examples/unity3d_env_local.py

Class Name:

Method Name:

Project Name: asyml/texar

Commit Name: 04c86488fcfe4958da6b44c4067d9bfa4e05c532

Time: 2018-04-18

Author: shore@pku.edu.cn

File Name: examples/transformer/hyperparams.py

Class Name:

Method Name:

Project Name: asyml/texar

Commit Name: bc7aff6bd3872ca41468bdfeb6cc0512f87b24c0

Time: 2018-04-07

Author: shore@pku.edu.cn

File Name: examples/transformer/hyperparams.py

Class Name:

Method Name: