b1b61d3f90cf795c7b48b6d109db7b7b96fa21ff,src/gluonnlp/model/attention_cell.py,MultiHeadAttentionCell,__init__,#MultiHeadAttentionCell#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#,206

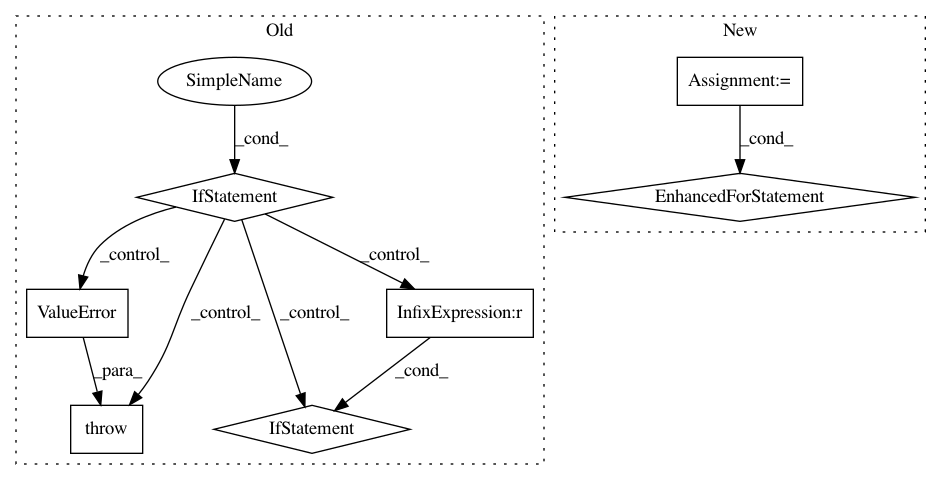

Before Change

self._value_units = value_units

self._num_heads = num_heads

self._use_bias = use_bias

if self._query_units % self._num_heads != 0:

raise ValueError("In MultiHeadAttetion, the query_units should be divided exactly"

" by the number of heads. Received query_units={}, num_heads={}"

.format(key_units, num_heads))

if self._key_units % self._num_heads != 0:

raise ValueError("In MultiHeadAttetion, the key_units should be divided exactly"

" by the number of heads. Received key_units={}, num_heads={}"

.format(key_units, num_heads))

if self._value_units % self._num_heads != 0:

raise ValueError("In MultiHeadAttetion, the value_units should be divided exactly"

" by the number of heads. Received value_units={}, num_heads={}"

.format(value_units, num_heads))

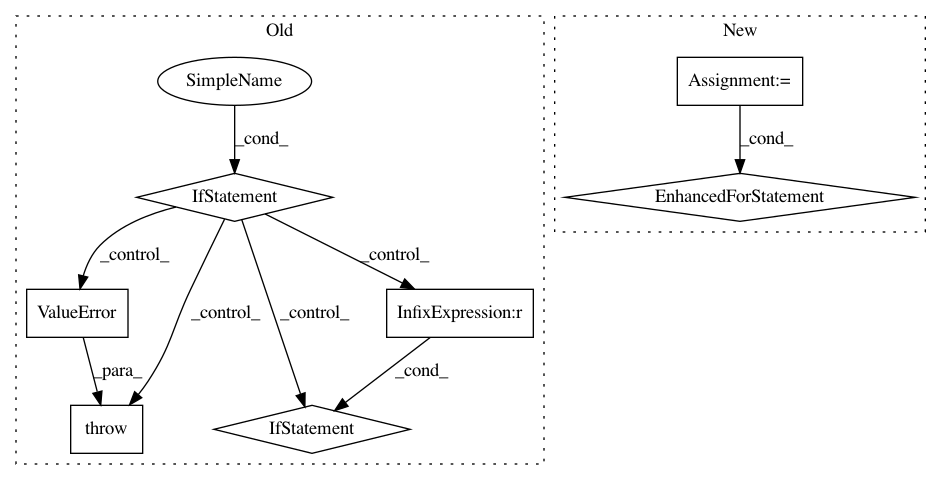

After Change

self._num_heads = num_heads

self._use_bias = use_bias

units = {"query": query_units, "key": key_units, "value": value_units}

for name, unit in units.items():

if unit % self._num_heads != 0:

raise ValueError(

"In MultiHeadAttetion, the {name}_units should be divided exactly"

" by the number of heads. Received {name}_units={unit}, num_heads={n}".format(

name=name, unit=unit, n=num_heads))

setattr(self, "_{}_units".format(name), unit)

with self.name_scope():

setattr(

self, "proj_{}".format(name),

nn.Dense(units=self._query_units, use_bias=self._use_bias, flatten=False,

weight_initializer=weight_initializer,

bias_initializer=bias_initializer, prefix="{}_".format(name)))

def __call__(self, query, key, value=None, mask=None):

Compute the attention.

Parameters

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 7

Instances

Project Name: dmlc/gluon-nlp

Commit Name: b1b61d3f90cf795c7b48b6d109db7b7b96fa21ff

Time: 2019-08-04

Author: lausen@amazon.com

File Name: src/gluonnlp/model/attention_cell.py

Class Name: MultiHeadAttentionCell

Method Name: __init__

Project Name: chainer/chainercv

Commit Name: 1cd87b67822c94c49d30ce1eabce792b3db7c272

Time: 2017-06-14

Author: yuyuniitani@gmail.com

File Name: chainercv/links/model/vgg/vgg.py

Class Name: VGG16Layers

Method Name: __init__

Project Name: NTMC-Community/MatchZoo

Commit Name: 2692f696e534b05bdbbb40e725018e6e3287f969

Time: 2018-12-24

Author: i@uduse.com

File Name: matchzoo/layers/matching_layer.py

Class Name: MatchingLayer

Method Name: build