668c3ef362995c55633fde592354160fec1d1efd,onmt/decoders/transformer.py,TransformerDecoder,forward,#TransformerDecoder#Any#Any#Any#,189

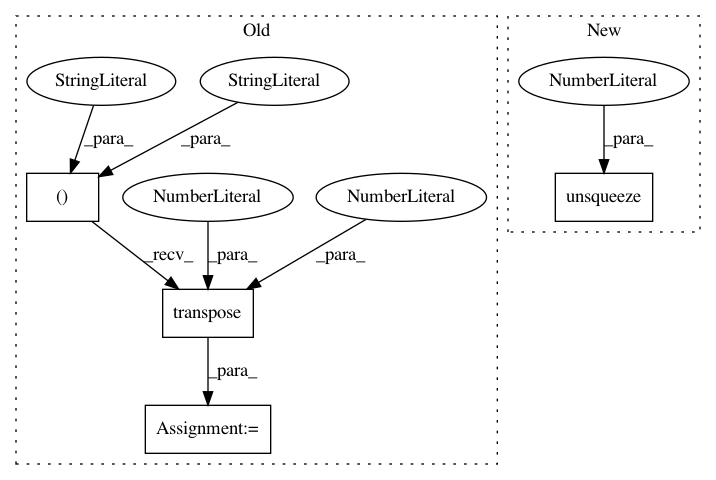

Before Change

self._init_cache(memory_bank)

src = self.state["src"]

src_words = src[:, :, 0].transpose(0, 1)

tgt_words = tgt[:, :, 0].transpose(0, 1)

src_batch, src_len = src_words.size()

tgt_batch, tgt_len = tgt_words.size()

emb = self.embeddings(tgt, step=step)

assert emb.dim() == 3 // len x batch x embedding_dim

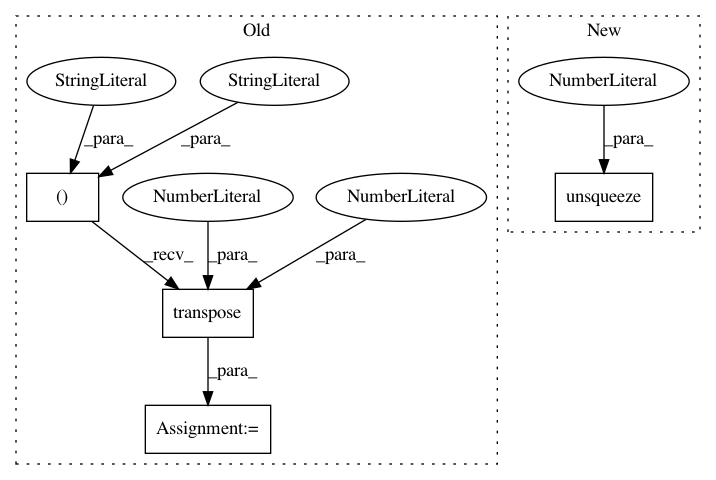

After Change

pad_idx = self.embeddings.word_padding_idx

src_lens = kwargs["memory_lengths"]

src_max_len = self.state["src"].shape[0]

src_pad_mask = ~sequence_mask(src_lens, src_max_len).unsqueeze(1)

tgt_pad_mask = tgt_words.data.eq(pad_idx).unsqueeze(1) // [B, 1, T_tgt]

for i, layer in enumerate(self.transformer_layers):

layer_cache = self.state["cache"]["layer_{}".format(i)] \

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances

Project Name: OpenNMT/OpenNMT-py

Commit Name: 668c3ef362995c55633fde592354160fec1d1efd

Time: 2019-06-27

Author: dylan.flaute@gmail.com

File Name: onmt/decoders/transformer.py

Class Name: TransformerDecoder

Method Name: forward

Project Name: OpenNMT/OpenNMT-py

Commit Name: 5972cb1690cafd70c2d1ef36c42707ec36e05276

Time: 2017-07-04

Author: sasha.rush@gmail.com

File Name: onmt/Models.py

Class Name: Decoder

Method Name: forward

Project Name: OpenNMT/OpenNMT-py

Commit Name: 668c3ef362995c55633fde592354160fec1d1efd

Time: 2019-06-27

Author: dylan.flaute@gmail.com

File Name: onmt/encoders/transformer.py

Class Name: TransformerEncoder

Method Name: forward