8b68349d43621619fe799ab4afc21da7f1fb2515,examples/reinforcement_learning/tutorial_PPO.py,,,#,233

Before Change

all_ep_r.append(ep_r)

else:

all_ep_r.append(all_ep_r[-1] * 0.9 + ep_r * 0.1)

print(

"Ep: %i" % ep,

"|Ep_r: %i" % ep_r,

("|Lam: %.4f" % METHOD["lam"]) if METHOD["name"] == "kl_pen" else "",

)

plt.ion()

plt.cla()

plt.title("PPO")

After Change

s = env.reset()

buffer_s, buffer_a, buffer_r = [], [], []

ep_r = 0

t0 = time.time()

for t in range(EP_LEN): // in one episode

// env.render()

a = ppo.choose_action(s)

s_, r, done, _ = env.step(a)

buffer_s.append(s)

buffer_a.append(a)

buffer_r.append((r + 8) / 8) // normalize reward, find to be useful

s = s_

ep_r += r

// update ppo

if (t + 1) % BATCH == 0 or t == EP_LEN - 1:

v_s_ = ppo.get_v(s_)

discounted_r = []

for r in buffer_r[::-1]:

v_s_ = r + GAMMA * v_s_

discounted_r.append(v_s_)

discounted_r.reverse()

bs, ba, br = np.vstack(buffer_s), np.vstack(buffer_a), np.array(discounted_r)[:, np.newaxis]

buffer_s, buffer_a, buffer_r = [], [], []

ppo.update(bs, ba, br)

if ep == 0:

all_ep_r.append(ep_r)

else:

all_ep_r.append(all_ep_r[-1] * 0.9 + ep_r * 0.1)

print("Episode: {}/{} | Episode Reward: {:.4f} | Running Time: {:.4f}"

.format(ep, EP_MAX, ep_r, time.time() - t0))

plt.ion()

plt.cla()

plt.title("PPO")

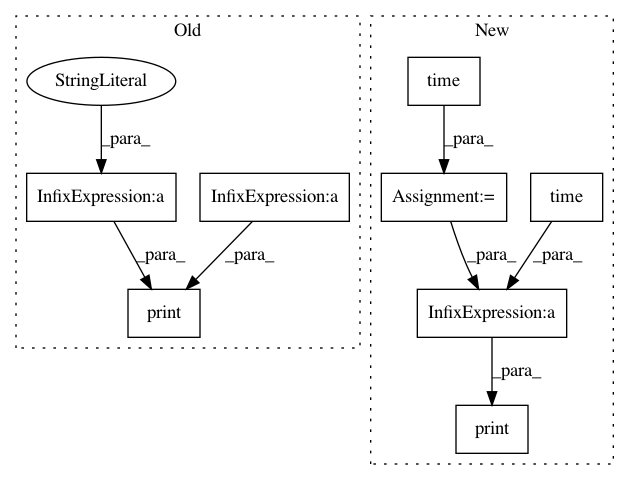

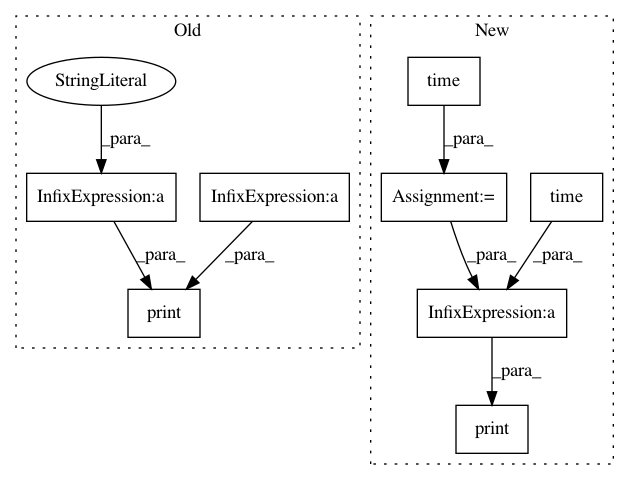

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 8

Instances

Project Name: tensorlayer/tensorlayer

Commit Name: 8b68349d43621619fe799ab4afc21da7f1fb2515

Time: 2019-06-10

Author: 34995488+Tokarev-TT-33@users.noreply.github.com

File Name: examples/reinforcement_learning/tutorial_PPO.py

Class Name:

Method Name:

Project Name: dmlc/gluon-nlp

Commit Name: 2bf8adc6913f17dfe8324b1b1a63df4d2b92b9a1

Time: 2018-08-13

Author: linhaibin.eric@gmail.com

File Name: scripts/language_model/large_word_language_model.py

Class Name:

Method Name: test

Project Name: tensorlayer/tensorlayer

Commit Name: 8b68349d43621619fe799ab4afc21da7f1fb2515

Time: 2019-06-10

Author: 34995488+Tokarev-TT-33@users.noreply.github.com

File Name: examples/reinforcement_learning/tutorial_DPPO.py

Class Name: Worker

Method Name: work