762078742de4aad07627f1add50b5c638a40f18b,skcuda/cublas.py,,,#,5404

Before Change

int(C), ldc)

cublasCheckStatus(status)

cublasDgeam.__doc__ = _GEAM_doc.substitute(precision="double precision",

real="real",

num_type="numpy.float64",

alpha_data="np.float64(np.random.rand())",

beta_data="np.float64(np.random.rand())",

a_data_1="np.random.rand(2, 3).astype(np.float64)",

b_data_1="np.random.rand(2, 3).astype(np.float64)",

a_data_2="np.random.rand(2, 3).astype(np.float64)",

b_data_2="np.random.rand(3, 2).astype(np.float64)",

c_data_1="alpha*a+beta*b",

c_data_2="alpha*a.T+beta*b",

func="cublasDgeam")

if _cublas_version >= 5000:

_libcublas.cublasCgeam.restype = int

_libcublas.cublasCgeam.argtypes = [_types.handle,

After Change

////// BLAS-like extension routines //////

// SGEAM, DGEAM, CGEAM, ZGEAM

_GEAM_doc = Template(

Matrix-matrix addition/transposition (${precision} ${real}).

Computes the sum of two ${precision} ${real} scaled and possibly (conjugate)

transposed matrices.

Parameters

----------

handle : int

CUBLAS context

transa, transb : char

"t" if they are transposed, "c" if they are conjugate transposed,

"n" if otherwise.

m : int

Number of rows in `A` and `C`.

n : int

Number of columns in `B` and `C`.

alpha : ${num_type}

Constant by which to scale `A`.

A : ctypes.c_void_p

Pointer to first matrix operand (`A`).

lda : int

Leading dimension of `A`.

beta : ${num_type}

Constant by which to scale `B`.

B : ctypes.c_void_p

Pointer to second matrix operand (`B`).

ldb : int

Leading dimension of `A`.

C : ctypes.c_void_p

Pointer to result matrix (`C`).

ldc : int

Leading dimension of `C`.

Examples

--------

>>> import pycuda.autoinit

>>> import pycuda.gpuarray as gpuarray

>>> import numpy as np

>>> alpha = ${alpha_data}

>>> beta = ${beta_data}

>>> a = ${a_data_1}

>>> b = ${b_data_1}

>>> c = ${c_data_1}

>>> a_gpu = gpuarray.to_gpu(a)

>>> b_gpu = gpuarray.to_gpu(b)

>>> c_gpu = gpuarray.empty(c.shape, c.dtype)

>>> h = cublasCreate()

>>> ${func}(h, "n", "n", c.shape[0], c.shape[1], alpha, a_gpu.gpudata, a.shape[0], beta, b_gpu.gpudata, b.shape[0], c_gpu.gpudata, c.shape[0])

>>> np.allclose(c_gpu.get(), c)

True

>>> a = ${a_data_2}

>>> b = ${b_data_2}

>>> c = ${c_data_2}

>>> a_gpu = gpuarray.to_gpu(a.T.copy())

>>> b_gpu = gpuarray.to_gpu(b.T.copy())

>>> c_gpu = gpuarray.empty(c.T.shape, c.dtype)

>>> transa = "c" if np.iscomplexobj(a) else "t"

>>> ${func}(h, transa, "n", c.shape[0], c.shape[1], alpha, a_gpu.gpudata, a.shape[0], beta, b_gpu.gpudata, b.shape[0], c_gpu.gpudata, c.shape[0])

>>> np.allclose(c_gpu.get().T, c)

True

>>> cublasDestroy(h)

References

----------

`cublas<t>geam <http://docs.nvidia.com/cuda/cublas///cublas-lt-t-gt-geam>`_

)

if _cublas_version >= 5000:

_libcublas.cublasSgeam.restype = int

_libcublas.cublasSgeam.argtypes = [_types.handle,

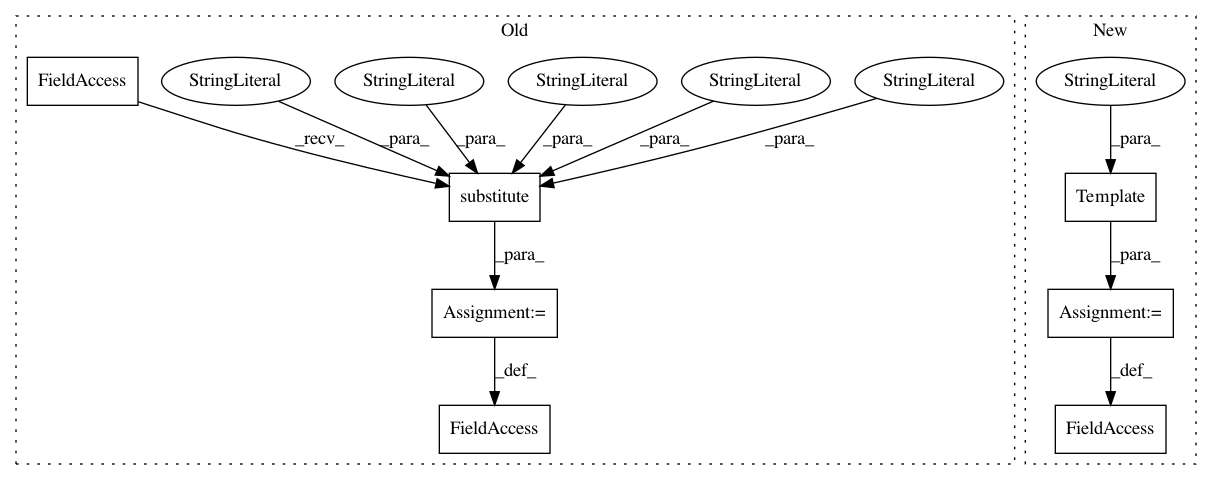

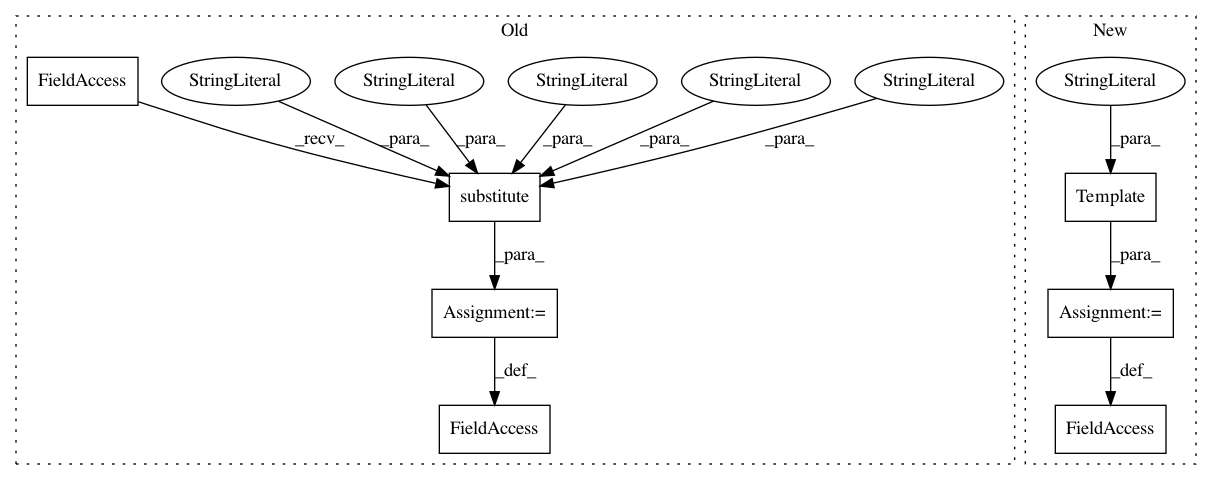

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 7

Instances

Project Name: lebedov/scikit-cuda

Commit Name: 762078742de4aad07627f1add50b5c638a40f18b

Time: 2019-07-08

Author: lev@columbia.edu

File Name: skcuda/cublas.py

Class Name:

Method Name:

Project Name: lebedov/scikit-cuda

Commit Name: 762078742de4aad07627f1add50b5c638a40f18b

Time: 2019-07-08

Author: lev@columbia.edu

File Name: skcuda/cublas.py

Class Name:

Method Name:

Project Name: lebedov/scikit-cuda

Commit Name: 762078742de4aad07627f1add50b5c638a40f18b

Time: 2019-07-08

Author: lev@columbia.edu

File Name: skcuda/cublas.py

Class Name:

Method Name: