22425200b7220b2b410c9aa5ebc4c783f6cfe5df,lxmls/deep_learning/rnn.py,LSTM,__init__,#LSTM#,271

Before Change

def __init__(self, W_e, n_hidd, n_tags):

// Dimension of the embeddings

n_emb = W_e.shape[0]

// MODEL PARAMETERS

W_x = np.random.uniform(size=(4*n_hidd, n_emb)) // RNN Input layer

W_h = np.random.uniform(size=(4*n_hidd, n_hidd)) // RNN recurrent var

W_c = np.random.uniform(size=(3*n_hidd, n_hidd)) // Second recurrent var

W_y = np.random.uniform(size=(n_tags, n_hidd)) // Output layer

// Cast to theano GPU-compatible type

W_e = W_e.astype(theano.config.floatX)

W_x = W_x.astype(theano.config.floatX)

W_h = W_h.astype(theano.config.floatX)

W_c = W_c.astype(theano.config.floatX)

W_y = W_y.astype(theano.config.floatX)

// Store as shared parameters

_W_e = theano.shared(W_e, borrow=True)

_W_x = theano.shared(W_x, borrow=True)

_W_h = theano.shared(W_h, borrow=True)

_W_c = theano.shared(W_c, borrow=True)

_W_y = theano.shared(W_y, borrow=True)

// Class variables

self.n_hidd = n_hidd

self.param = [_W_e, _W_x, _W_h, _W_c, _W_y]

def _forward(self, _x, _h0=None, _c0=None):

After Change

np.random.seed(seed)

// MODEL PARAMETERS

W_e = 0.01*np.random.uniform(size=(n_emb, n_words)) // Embedding layer

W_x = np.random.uniform(size=(4*n_hidd, n_emb)) // RNN Input layer

W_h = np.random.uniform(size=(4*n_hidd, n_hidd)) // RNN recurrent var

W_c = np.random.uniform(size=(3*n_hidd, n_hidd)) // Second recurrent var

W_y = np.random.uniform(size=(n_tags, n_hidd)) // Output layer

// Cast to theano GPU-compatible type

W_e = W_e.astype(theano.config.floatX)

W_x = W_x.astype(theano.config.floatX)

W_h = W_h.astype(theano.config.floatX)

W_c = W_c.astype(theano.config.floatX)

W_y = W_y.astype(theano.config.floatX)

// Store as shared parameters

_W_e = theano.shared(W_e, borrow=True)

_W_x = theano.shared(W_x, borrow=True)

_W_h = theano.shared(W_h, borrow=True)

_W_c = theano.shared(W_c, borrow=True)

_W_y = theano.shared(W_y, borrow=True)

// Class variables

self.n_hidd = n_hidd

self.param = [_W_e, _W_x, _W_h, _W_c, _W_y]

def _forward(self, _x, _h0=None, _c0=None):

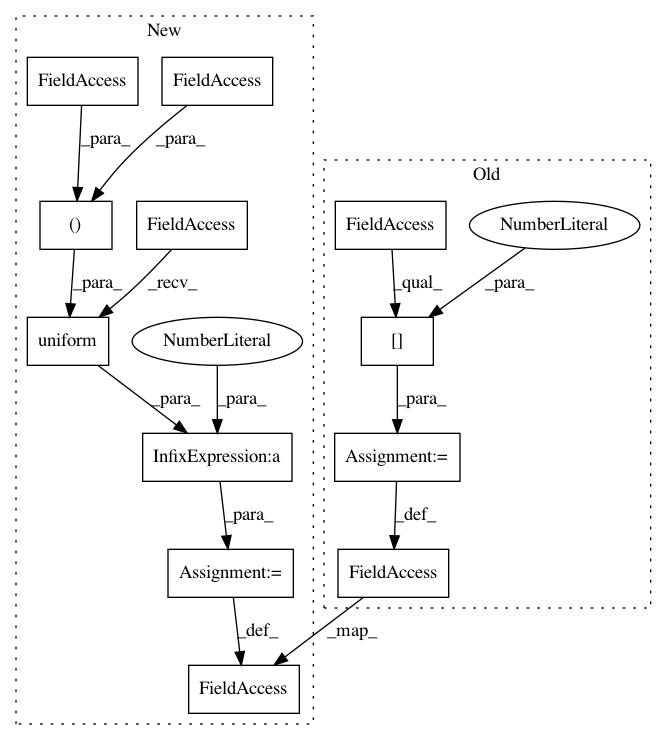

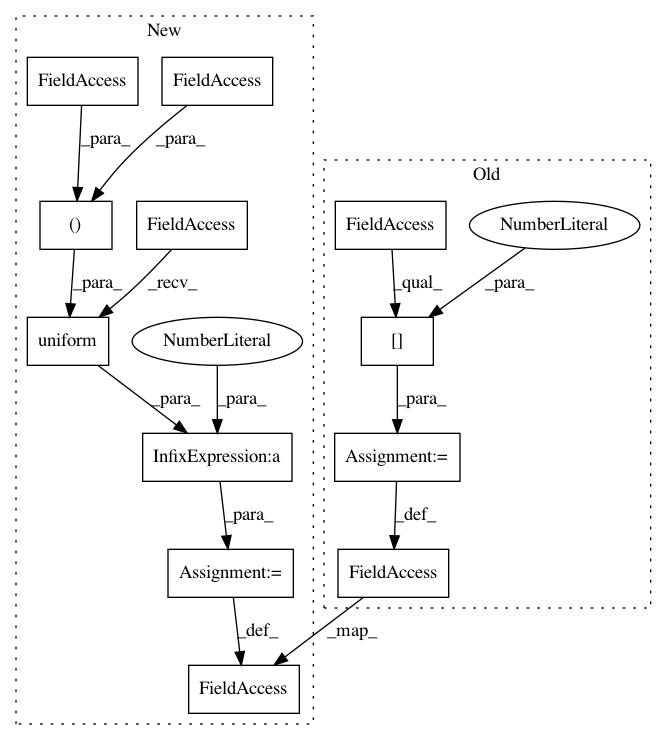

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 12

Instances

Project Name: LxMLS/lxmls-toolkit

Commit Name: 22425200b7220b2b410c9aa5ebc4c783f6cfe5df

Time: 2016-06-21

Author: ramon@astudillo.com

File Name: lxmls/deep_learning/rnn.py

Class Name: LSTM

Method Name: __init__

Project Name: LxMLS/lxmls-toolkit

Commit Name: 22425200b7220b2b410c9aa5ebc4c783f6cfe5df

Time: 2016-06-21

Author: ramon@astudillo.com

File Name: lxmls/deep_learning/rnn.py

Class Name: NumpyRNN

Method Name: __init__

Project Name: LxMLS/lxmls-toolkit

Commit Name: 22425200b7220b2b410c9aa5ebc4c783f6cfe5df

Time: 2016-06-21

Author: ramon@astudillo.com

File Name: lxmls/deep_learning/rnn.py

Class Name: RNN

Method Name: __init__