f98bd2ec9d4289939ff6661d5a9c43ee7e8996f7,models/shared_rnn.py,RNN,forward,#RNN#,195

Before Change

if hidden_norms.data.max() > max_norm:

logger.info(f"clipping {hidden_norms.max()} to {max_norm}")

norm = hidden[hidden_norms > max_norm].norm(dim=-1)

norm = norm.unsqueeze(-1)

detached_norm = torch.autograd.Variable(norm.data,

requires_grad=False)

hidden[hidden_norms > max_norm] *= max_norm/detached_norm

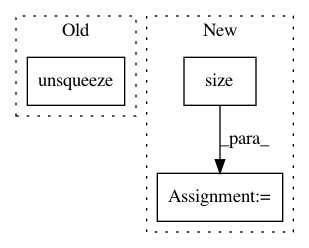

After Change

clip_select = hidden_norms > max_norm

clip_norms = hidden_norms[clip_select]

mask = np.ones(hidden.size())

normalizer = max_norm/clip_norms

normalizer = normalizer[:, np.newaxis]

mask[clip_select] = normalizer

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances

Project Name: carpedm20/ENAS-pytorch

Commit Name: f98bd2ec9d4289939ff6661d5a9c43ee7e8996f7

Time: 2018-03-11

Author: dukebw@mcmaster.ca

File Name: models/shared_rnn.py

Class Name: RNN

Method Name: forward

Project Name: SenticNet/conv-emotion

Commit Name: 87d57a3d34a1eef2c6ad5519741710e3321f136c

Time: 2019-03-19

Author: 40890991+soujanyaporia@users.noreply.github.com

File Name: DialogueRNN/model.py

Class Name: BiE2EModel

Method Name: forward

Project Name: jadore801120/attention-is-all-you-need-pytorch

Commit Name: 0b0eabbfd972c9e3f6323bff9d39ac5fc3ba9cc7

Time: 2018-08-23

Author: yhhuang@nlg.csie.ntu.edu.tw

File Name: transformer/Translator.py

Class Name: Translator

Method Name: translate_batch