99abcc6e9b57f441999ce10dbc31ca1bed79c356,ch15/04_train_ppo.py,,,#,55

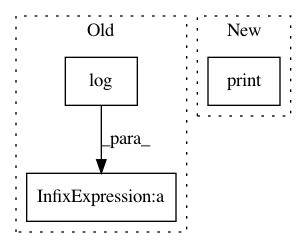

Before Change

surr_obj_v = adv_v * torch.exp(logprob_pi_v - logprob_old_pi_v)

clipped_surr_v = torch.clamp(surr_obj_v, 1.0 - PPO_EPS, 1.0 + PPO_EPS)

loss_policy_v = -torch.min(surr_obj_v, clipped_surr_v).mean()

entropy_loss_v = ENTROPY_BETA * (-(torch.log(2*math.pi*var_v) + 1)/2).mean()

loss_v = loss_policy_v + entropy_loss_v + loss_value_v

loss_v.backward()

optimizer.step()

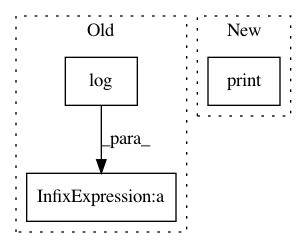

After Change

net_act.cuda()

net_crt.cuda()

print(net_act)

print(net_crt)

writer = SummaryWriter(comment="-ppo_" + args.name)

agent = model.AgentA2C(net_act, cuda=args.cuda)

exp_source = ptan.experience.ExperienceSourceFirstLast(envs, agent, GAMMA, steps_count=REWARD_STEPS)

In pattern: SUPERPATTERN

Frequency: 4

Non-data size: 3

Instances

Project Name: PacktPublishing/Deep-Reinforcement-Learning-Hands-On

Commit Name: 99abcc6e9b57f441999ce10dbc31ca1bed79c356

Time: 2018-02-10

Author: max.lapan@gmail.com

File Name: ch15/04_train_ppo.py

Class Name:

Method Name:

Project Name: hunkim/PyTorchZeroToAll

Commit Name: e6acec63de2d3e6dff20422e8b07837d33a7e670

Time: 2019-06-21

Author: hameedabdulrashid@gmail.com

File Name: 09_01_softmax_loss.py

Class Name:

Method Name:

Project Name: PacktPublishing/Deep-Reinforcement-Learning-Hands-On

Commit Name: 4296a765125fff6491892a1bb70fb32ac516dae6

Time: 2018-02-10

Author: max.lapan@gmail.com

File Name: ch15/01_train_a2c.py

Class Name:

Method Name:

Project Name: jostmey/rwa

Commit Name: 66de7491bd7e16a072e2d1302df1f88c277dae2e

Time: 2017-04-04

Author: jostmey@gmail.com

File Name: adding_problem_100/rwa_model/train.py

Class Name:

Method Name: