835ebfd174f6930ecce3a687bc6de852a4910f98,contents/12_Proximal_Policy_Optimization/simply_PPO.py,PPO,__init__,#PPO#,37

Before Change

surr = ratio * self.tfadv

if METHOD["name"] == "kl_pen":

self.tflam = tf.placeholder(tf.float32, None, "lambda")

with tf.variable_scope("loss"):

kl = tf.stop_gradient(kl_divergence(oldpi, pi))

self.kl_mean = tf.reduce_mean(kl)

self.aloss = -(tf.reduce_mean(surr - self.tflam * kl))

After Change

// actor

pi, pi_params = self._build_anet("pi", trainable=True)

oldpi, oldpi_params = self._build_anet("oldpi", trainable=False)

with tf.variable_scope("sample_action"):

self.sample_op = tf.squeeze(pi.sample(1), axis=0) // choosing action

with tf.variable_scope("update_oldpi"):

self.update_oldpi_op = [oldp.assign(p) for p, oldp in zip(pi_params, oldpi_params)]

self.tfa = tf.placeholder(tf.float32, [None, A_DIM], "action")

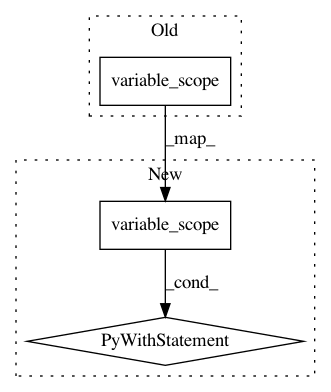

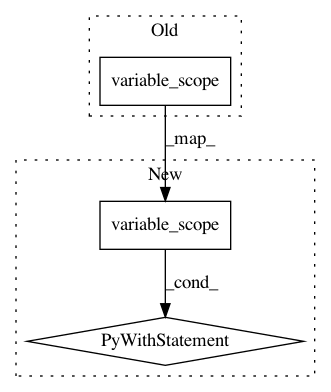

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances

Project Name: MorvanZhou/Reinforcement-learning-with-tensorflow

Commit Name: 835ebfd174f6930ecce3a687bc6de852a4910f98

Time: 2017-08-13

Author: morvanzhou@gmail.com

File Name: contents/12_Proximal_Policy_Optimization/simply_PPO.py

Class Name: PPO

Method Name: __init__

Project Name: asyml/texar

Commit Name: ebec86aebcbe1a0044cb0991c964f95eae3f752c

Time: 2019-11-16

Author: pengzhi.gao@petuum.com

File Name: texar/tf/modules/decoders/dynamic_decode.py

Class Name:

Method Name: dynamic_decode

Project Name: MorvanZhou/Reinforcement-learning-with-tensorflow

Commit Name: 1a292afa66250814e3fa3fab26e4f7e5140baf31

Time: 2017-08-10

Author: morvanzhou@gmail.com

File Name: contents/12_Proximal_Policy_Optimization/simply_PPO.py

Class Name: PPO

Method Name: __init__