e7f739f3ad1a377bfbcd0b92c55c2b9d947764fc,algo/a2c_acktr.py,A2C_ACKTR,update,#A2C_ACKTR#,34

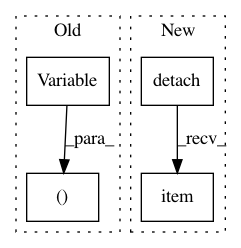

Before Change

advantages = Variable(rollouts.returns[:-1]) - values

value_loss = advantages.pow(2).mean()

action_loss = -(Variable(advantages.data) * action_log_probs).mean()

if self.acktr and self.optimizer.steps % self.optimizer.Ts == 0:

// Sampled fisher, see Martens 2014

self.actor_critic.zero_grad()

pg_fisher_loss = -action_log_probs.mean()

value_noise = Variable(torch.randn(values.size()))

if values.is_cuda:

value_noise = value_noise.cuda()

sample_values = values + value_noise

vf_fisher_loss = -(

values - Variable(sample_values.data)).pow(2).mean()

fisher_loss = pg_fisher_loss + vf_fisher_loss

self.optimizer.acc_stats = True

fisher_loss.backward(retain_graph=True)

self.optimizer.acc_stats = False

self.optimizer.zero_grad()

(value_loss * self.value_loss_coef + action_loss -

dist_entropy * self.entropy_coef).backward()

if self.acktr == False:

nn.utils.clip_grad_norm(self.actor_critic.parameters(),

self.max_grad_norm)

self.optimizer.step()

return value_loss, action_loss, dist_entropy

After Change

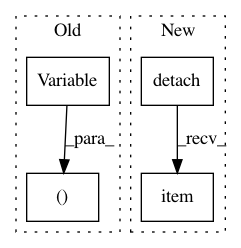

advantages = rollouts.returns[:-1] - values

value_loss = advantages.pow(2).mean()

action_loss = -(advantages.detach() * action_log_probs).mean()

if self.acktr and self.optimizer.steps % self.optimizer.Ts == 0:

// Sampled fisher, see Martens 2014

self.actor_critic.zero_grad()

pg_fisher_loss = -action_log_probs.mean()

value_noise = torch.randn(values.size())

if values.is_cuda:

value_noise = value_noise.cuda()

sample_values = values + value_noise

vf_fisher_loss = -(values - sample_values.detach()).pow(2).mean()

fisher_loss = pg_fisher_loss + vf_fisher_loss

self.optimizer.acc_stats = True

fisher_loss.backward(retain_graph=True)

self.optimizer.acc_stats = False

self.optimizer.zero_grad()

(value_loss * self.value_loss_coef + action_loss -

dist_entropy * self.entropy_coef).backward()

if self.acktr == False:

nn.utils.clip_grad_norm_(self.actor_critic.parameters(),

self.max_grad_norm)

self.optimizer.step()

return value_loss.item(), action_loss.item(), dist_entropy.item()

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances

Project Name: ikostrikov/pytorch-a2c-ppo-acktr

Commit Name: e7f739f3ad1a377bfbcd0b92c55c2b9d947764fc

Time: 2018-04-24

Author: ikostrikov@gmail.com

File Name: algo/a2c_acktr.py

Class Name: A2C_ACKTR

Method Name: update

Project Name: activatedgeek/LeNet-5

Commit Name: b9d7f39a752d23a7de60ea6d679812c401fab57e

Time: 2019-01-01

Author: 1sanyamkapoor@gmail.com

File Name: run.py

Class Name:

Method Name: train

Project Name: activatedgeek/LeNet-5

Commit Name: b9d7f39a752d23a7de60ea6d679812c401fab57e

Time: 2019-01-01

Author: 1sanyamkapoor@gmail.com

File Name: run.py

Class Name:

Method Name: test