fd723d719ddb49b66a5084215343de763a40083d,trainer.py,Trainer,validate,#Trainer#,247

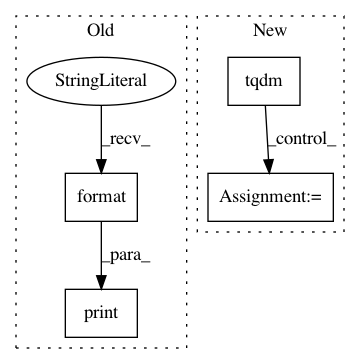

Before Change

// print to screen

if i % self.print_freq == 0:

print(

"Valid: [{0}/{1}]\t"

"Time: {batch_time.val:.3f} ({batch_time.avg:.3f})\t"

"Valid Loss: {loss.val:.4f} ({loss.avg:.4f})\t"

"Valid Acc: {acc.val:.3f} ({acc.avg:.3f})".format(

i, len(self.valid_loader),

batch_time=batch_time, loss=losses, acc=accs)

)

print("[*] Valid Acc: {acc.avg:.3f}".format(acc=accs))

// log to tensorboard

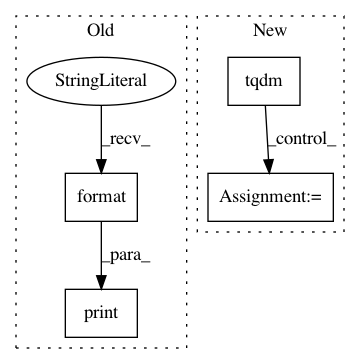

After Change

accs = AverageMeter()

tic = time.time()

with tqdm(total=self.num_valid) as pbar:

for i, (x, y) in enumerate(self.valid_loader):

x = Variable(x, volatile=True)

y = Variable(y, volatile=True)

self.batch_size = x.shape[0]

self.reset()

// initialize location vector

l_t = torch.Tensor(self.batch_size, 2).uniform_(-1, 1)

l_t = Variable(l_t)

// extract the glimpses

log_pi = 0.

for t in range(self.num_glimpses - 1):

// forward pass through model

self.h_t, mu, l_t, p = self.model(x, l_t, self.h_t)

// accumulate log of policy

log_pi += p

// last iteration

self.h_t, mu, l_t, p, b_t, log_probas = self.model(

x, l_t, self.h_t, last=True

)

log_pi += p

// calculate reward

predicted = torch.max(log_probas, 1)[1]

R = (predicted == y).float()

// compute losses for differentiable modules

loss_action = F.nll_loss(log_probas, y)

loss_baseline = F.mse_loss(b_t, R)

// compute reinforce loss

adjusted_reward = R - b_t

log_pi = log_pi / self.num_glimpses

loss_reinforce = torch.mean(-log_pi*adjusted_reward)

// sum up into a hybrid loss

loss = loss_action + loss_baseline + loss_reinforce

// compute accuracy

acc = 100 * (R.sum() / len(y))

// store

losses.update(loss.data[0], x.size()[0])

accs.update(acc.data[0], x.size()[0])

// measure elapsed time

toc = time.time()

batch_time.update(toc-tic)

pbar.set_description(

(

"{:.1f}s - valid loss: {:.3f} - valid acc: {:.3f}".format(

(toc-tic), loss.data[0], acc.data[0])

)

)

pbar.update(self.batch_size)

// log to tensorboard

if self.use_tensorboard:

log_value("val_loss", losses.avg, epoch)

log_value("val_acc", accs.avg, epoch)

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances

Project Name: kevinzakka/recurrent-visual-attention

Commit Name: fd723d719ddb49b66a5084215343de763a40083d

Time: 2018-01-23

Author: kevinarmandzakka@gmail.com

File Name: trainer.py

Class Name: Trainer

Method Name: validate

Project Name: kevinzakka/recurrent-visual-attention

Commit Name: fd723d719ddb49b66a5084215343de763a40083d

Time: 2018-01-23

Author: kevinarmandzakka@gmail.com

File Name: trainer.py

Class Name: Trainer

Method Name: train_one_epoch

Project Name: zo7/deconvfaces

Commit Name: 41ef0bf4ece3daf13a02b14c79eb7ddebb42bc0c

Time: 2016-09-04

Author: zo7.flynn@gmail.com

File Name: facegen/generate.py

Class Name:

Method Name: generate_from_yaml