ecd656578d31171683e7928477132e75348317d1,pyprob/model.py,Model,posterior,#Model#,87

Before Change

len_str_num_traces = len(str(num_traces))

print("Time spent | Time remain.| Progress | {} | Accepted|Smp reuse| Traces/sec".format("Trace".ljust(len_str_num_traces * 2 + 1)))

prev_duration = 0

for i in range(num_traces):

if util._verbosity > 1:

duration = time.time() - time_start

if (duration - prev_duration > util._print_refresh_rate) or (i == num_traces - 1):

prev_duration = duration

traces_per_second = (i + 1) / duration

print("{} | {} | {} | {}/{} | {} | {} | {:,.2f} ".format(util.days_hours_mins_secs_str(duration), util.days_hours_mins_secs_str((num_traces - i) / traces_per_second), util.progress_bar(i+1, num_traces), str(i+1).rjust(len_str_num_traces), num_traces, "{:,.2f}%".format(100 * (traces_accepted / (i + 1))).rjust(7), "{:,.2f}%".format(100 * samples_reused / max(1, samples_all)).rjust(7), traces_per_second), end="\r")

sys.stdout.flush()

candidate_trace = next(self._trace_generator(trace_mode=TraceMode.POSTERIOR, inference_engine=inference_engine, metropolis_hastings_trace=current_trace, observe=observe, *args, **kwargs))

log_acceptance_ratio = math.log(current_trace.length_controlled) - math.log(candidate_trace.length_controlled) + candidate_trace.log_prob_observed - current_trace.log_prob_observed

for variable in candidate_trace.variables_controlled:

if variable.reused:

log_acceptance_ratio += torch.sum(variable.log_prob)

log_acceptance_ratio -= torch.sum(current_trace.variables_dict_address[variable.address].log_prob)

samples_reused += 1

samples_all += candidate_trace.length_controlled

if state._metropolis_hastings_site_transition_log_prob is None:

warnings.warn("Trace did not hit the Metropolis Hastings site, ensure that the model is deterministic except pyprob.sample calls")

else:

log_acceptance_ratio += torch.sum(state._metropolis_hastings_site_transition_log_prob)

// print(log_acceptance_ratio)

if math.log(random.random()) < float(log_acceptance_ratio):

traces_accepted += 1

current_trace = candidate_trace

// do thinning

if i % thinning_steps == 0:

posterior.add(map_func(current_trace))

if util._verbosity > 1:

print()

posterior.finalize()After Change

sys.stdout.flush()

candidate_trace = next(self._trace_generator(trace_mode=TraceMode.POSTERIOR, inference_engine=inference_engine, metropolis_hastings_trace=current_trace, observe=observe, *args, **kwargs))

if filter is not None:

if not filter(candidate_trace):

continue

log_acceptance_ratio = math.log(current_trace.length_controlled) - math.log(candidate_trace.length_controlled) + candidate_trace.log_prob_observed - current_trace.log_prob_observed

for variable in candidate_trace.variables_controlled:

if variable.reused:

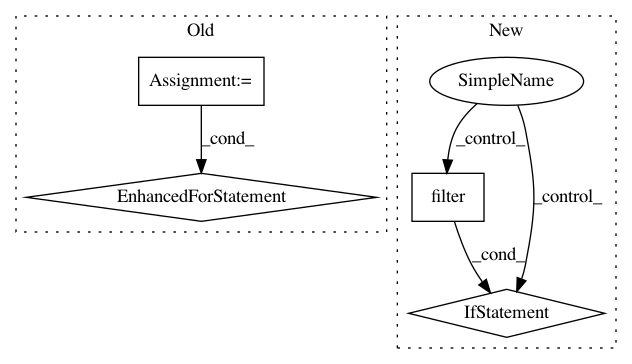

log_acceptance_ratio += torch.sum(variable.log_prob)In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances Project Name: pyprob/pyprob

Commit Name: ecd656578d31171683e7928477132e75348317d1

Time: 2020-08-06

Author: atilimgunes.baydin@gmail.com

File Name: pyprob/model.py

Class Name: Model

Method Name: posterior

Project Name: pyprob/pyprob

Commit Name: ecd656578d31171683e7928477132e75348317d1

Time: 2020-08-06

Author: atilimgunes.baydin@gmail.com

File Name: pyprob/model.py

Class Name: Model

Method Name: _traces

Project Name: snorkel-team/snorkel

Commit Name: 90fa42738c0c76a13cfe7d9dfb439662b4e961d6

Time: 2016-08-22

Author: stephenhbach@gmail.com

File Name: snorkel/viewer.py

Class Name: Viewer

Method Name: save_labels