19fbae4ea6092bfc69e4f828febbd15f72365311,cesium/features/cadence_features.py,,delta_t_hist,#,22

Before Change

def delta_t_hist(t, nbins=50):

Build histogram of all possible delta_t"s without storing every value

hist = np.zeros(nbins, dtype="int")

bins = np.linspace(0, max(t) - min(t), nbins + 1)

for i in range(len(t)):

hist += np.histogram(t[i] - t[:i], bins=bins)[0]

hist += np.histogram(t[i + 1:] - t[i], bins=bins)[0]

return hist / 2 // Double-counts since we loop over every pair twice

def normalize_hist(hist, total_time):After Change

and then aggregate the result to have `nbins` total values.

f, x = np.histogram(t, bins=conv_oversample * nbins)

g = np.convolve(f, f[::-1] )[len(f) - 1:] // Discard negative domain

g[0] -= len(t) // First bin is double-counted because of i=j terms

hist = g.reshape((-1, conv_oversample)).sum(axis=1) // Combine bins

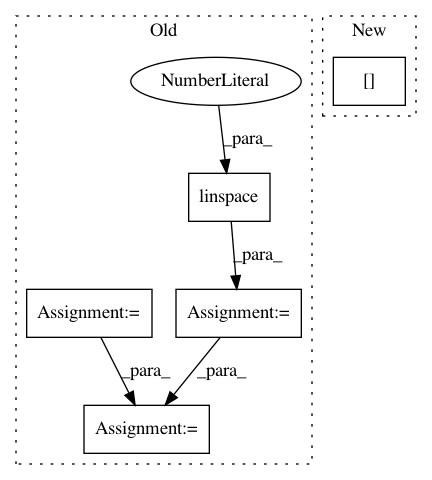

return histIn pattern: SUPERPATTERN

Frequency: 4

Non-data size: 5

Instances Project Name: cesium-ml/cesium

Commit Name: 19fbae4ea6092bfc69e4f828febbd15f72365311

Time: 2016-11-10

Author: brettnaul@gmail.com

File Name: cesium/features/cadence_features.py

Class Name:

Method Name: delta_t_hist

Project Name: scikit-learn-contrib/imbalanced-learn

Commit Name: aa6af82f458acf3f853e5174d34b11d319eea1c0

Time: 2016-06-17

Author: victor.dvro@gmail.com

File Name: unbalanced_dataset/under_sampling/instance_hardness_threshold.py

Class Name: InstanceHardnessThreshold

Method Name: transform

Project Name: suavecode/SUAVE

Commit Name: b92a9d6bc5437898b194c9246b96420207cf4f9f

Time: 2020-12-23

Author: jtrentsmart@gmail.com

File Name: trunk/SUAVE/Methods/Performance/V_h_diagram.py

Class Name:

Method Name: V_h_diagram

Project Name: librosa/librosa

Commit Name: b7c2f6e9ccd65a53d8ae9aa0d3ee287ce9c93019

Time: 2014-02-07

Author: brm2132@columbia.edu

File Name: librosa/feature.py

Class Name:

Method Name: estimate_tuning