14c2381207c5972359b2af450a233730ff877ee1,TTS/bin/train_wavegrad.py,,train,#,84

Before Change

// backward pass with loss scaling

optimizer.zero_grad()

if amp is not None:

with amp.scale_loss(loss, optimizer) as scaled_loss:

scaled_loss.backward()

else:

loss.backward()

if c.clip_grad > 0:

grad_norm = torch.nn.utils.clip_grad_norm_(model.parameters(),

c.clip_grad)

optimizer.step()

After Change

scaler.update()

else:

loss.backward()

grad_norm = torch.nn.utils.clip_grad_norm_(model.parameters(),

c.clip_grad)

optimizer.step()

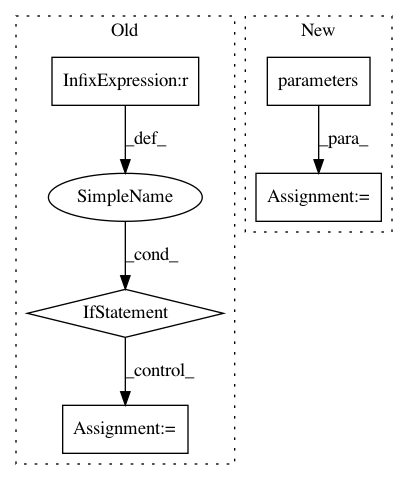

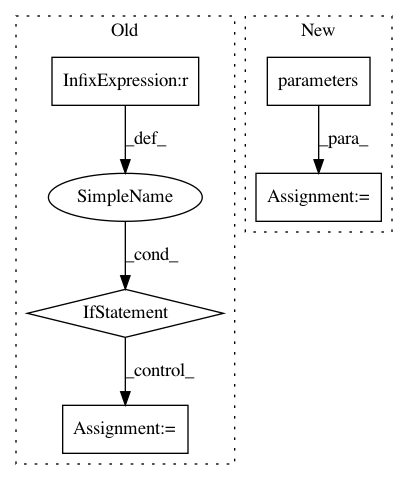

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances

Project Name: mozilla/TTS

Commit Name: 14c2381207c5972359b2af450a233730ff877ee1

Time: 2020-10-29

Author: erogol@hotmail.com

File Name: TTS/bin/train_wavegrad.py

Class Name:

Method Name: train

Project Name: jindongwang/transferlearning

Commit Name: 376b01c2e338ec63e638f62a76d67f6a9323e47c

Time: 2019-08-14

Author: jindongwang@outlook.com

File Name: code/deep/DeepCoral/DeepCoral.py

Class Name:

Method Name:

Project Name: havakv/pycox

Commit Name: e2a50c54a397f5912e596b2c56a35ff9442031bc

Time: 2018-05-07

Author: havard@DN0a22c460.SUNet

File Name: pycox/models/high_level.py

Class Name: CoxPHLinear

Method Name: __init__