d0c329325c37ce342f78f43fc8486b5aa0e87d72,core/tokenizer.py,,,#,19

Before Change

"Jan": 1, "Feb": 1, "Mar": 1, "Apr": 1, "Jun": 1, "Jul": 1, "Aug": 1, "Sep": 1, "Oct": 1, "Nov": 1, "Dec": 1

}"""

// Hey, it"s reddit, better use lowercased list (and lowercase matched word later in code)

nonbreaking_prefixes = {

"a": 1, "b": 1, "c": 1, "d": 1, "e": 1, "f": 1, "g": 1, "h": 1, "i": 1, "j": 1, "k": 1, "l": 1, "m": 1, "n": 1, "o": 1, "p": 1, "q": 1, "r": 1, "s": 1, "t": 1, "u": 1, "v": 1, "w": 1, "x": 1, "y": 1, "z": 1,

"adj": 1, "adm": 1, "adv": 1, "asst": 1, "bart": 1, "bldg": 1, "brig": 1, "bros": 1, "capt": 1, "cmdr": 1, "col": 1, "comdr": 1, "con": 1, "corp": 1, "cpl": 1, "dR": 1, "dr": 1, "drs": 1, "ens": 1,

"gen": 1, "gov": 1, "hon": 1, "hr": 1, "hosp": 1, "insp": 1, "lt": 1, "mm": 1, "mr": 1, "mrs": 1, "ms": 1, "maj": 1, "messrs": 1, "mlle": 1, "mme": 1, "msgr": 1,

"op": 1, "ord": 1, "pfc": 1, "ph": 1, "prof": 1, "pvt": 1, "rep": 1, "reps": 1, "res": 1, "rt": 1, "sen": 1, "sens": 1, "sfc": 1, "sgt": 1, "sr": 1, "st": 1, "supt": 1, "surg": 1,

"vs": 1, "i.e": 1, "rev": 1, "e.g": 1,

"no": 2, "nos": 1, "nrt": 2, "nr": 1, "pp": 2,

"jan": 1, "feb": 1, "mar": 1, "apr": 1, "jun": 1, "jul": 1, "aug": 1, "sep": 1, "oct": 1, "nov": 1, "dec": 1

}

// Load list of protected words/phrases (those will not be breaked)

protected_phrases = []

with open(preprocessing["protected_phrases_file"], "r", encoding="utf-8") as protected_file:

After Change

with open(preprocessing["protected_phrases_file"], "r", encoding="utf-8") as protected_file:

protected_phrases = list(filter(None, protected_file.read().split("\n")))

protected_phrases_regex = []

with open(preprocessing["protected_phrases_regex_file"], "r", encoding="utf-8") as protected_file:

protected_phrases_regex = list(filter(None, protected_file.read().split("\n")))

// Tokenize sentense

def tokenize(sentence):

// Remove special tokens

sentence = re.sub("<unk>|<s>|</s>", "", sentence, flags=re.IGNORECASE)

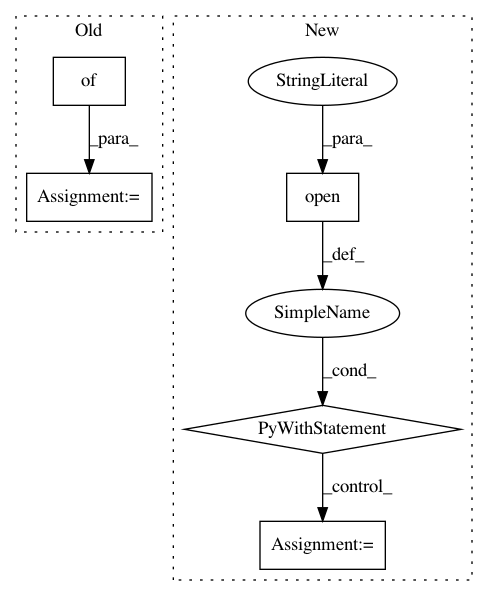

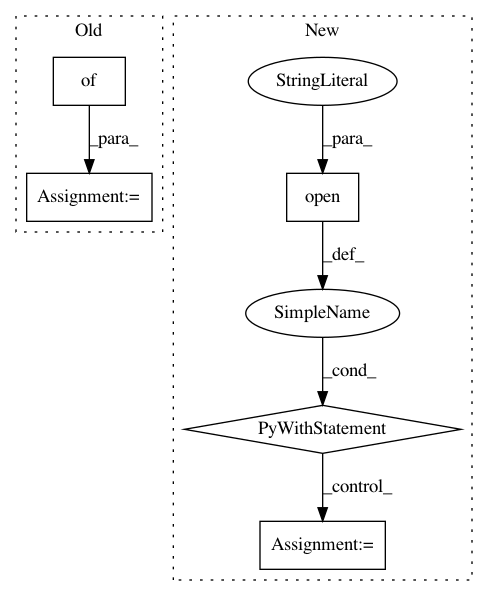

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances

Project Name: daniel-kukiela/nmt-chatbot

Commit Name: d0c329325c37ce342f78f43fc8486b5aa0e87d72

Time: 2017-11-22

Author: daniel@kukiela.pl

File Name: core/tokenizer.py

Class Name:

Method Name:

Project Name: estnltk/estnltk

Commit Name: 70241c951da537919f8203e4bb5c8ae1123aeb2f

Time: 2016-11-15

Author: u.raudvere@gmail.com

File Name: docs/conf.py

Class Name:

Method Name:

Project Name: UFAL-DSG/tgen

Commit Name: e4eac21828a6b9037d314266908f4ba083b1940e

Time: 2015-12-15

Author: odusek@ufal.mff.cuni.cz

File Name: tgen/parallel_percrank_train.py

Class Name:

Method Name: dump_ranker